Chapter 24 Laboratory organization and management

Management structure and function

The management structure of a haematology laboratory should indicate a clear line of accountability of each member of staff to the head of department. In turn, the head of department may be managerially accountable to a clinical director (of laboratories) and thence to a hospital or health authority executive committee. The head of department is responsible for departmental leadership, for ensuring that the laboratory has authoritative representation within the hospital and for ensuring that managerial and administrative tasks are performed efficiently. Where the head of the department delegates managerial tasks to others, these responsibilities must be clearly defined and stated. Formerly, the director was usually a medically qualified haematologist, but nowadays in many laboratories, this role is being undertaken by appropriately qualified biomedical scientists, while medical haematologists serve as consultants. In that role, they should be fully conversant with the principles of laboratory practice, especially with interpretation and clinical significance of the various analytical procedures, so as to provide a reliable and authoritative link between laboratory and clinic. Furthermore, all medical staff, especially junior hospital doctors, should be invited to visit the laboratory, to see how it functions, how various tests are performed, their level of complexity, clinical utility and cost: this should give them confidence to order tests rationally, rather than automatically requesting all the tests listed on the laboratory request form.1

Management of the laboratory requires an executive committee answerable to the head of department. Under this executive, there should be a number of designated individuals responsible for implementing the functions of the department (Table 24.1).

Table 24.1 Example of components of a management structure

| Executive committee | |

| Head of department | |

| Business manager | |

| Consultant haematologist | |

| Principal scientific officer | |

| Safety officer | |

| Quality control officer | |

| Computer and data processing supervisor | |

| Sectional scientific/technical heads | |

| Cytometry | |

| Blood film morphology | |

| Immunohaematology | |

| Haemostasis | |

| Blood transfusion | |

| Special investigations (haemolytic anaemias, haemoglobinopathies, cytochemistry, molecular techniques, etc.) | |

| Clerical supervisor | |

Staff Appraisal

All members of staff should receive training to enhance their skills and to develop their careers. This requires setting of goals and regular appraisal of progress for both managerial and technical ability. The appraisal process should cascade down from the head of department and appropriate training must be given to those who undertake appraisals at successive levels. The appraiser should provide a short list of topics to the person to be interviewed, who should be encouraged to add to the list, so that each understands the items to be covered. Topics to be considered should include: quality of performance and accurate completion of assignments; productivity and dependability; ability to work in a team; and ability to relate to patients, clinicians and co-workers. It is not appropriate to include considerations relating to pay. An appraisal interview should be a constructive dialogue of the present state of development and the progress made to date; it should be open-ended and should identify future training requirements. Ideally, the staff members should leave the interviews with the knowledge that their personal development and future progress are of importance to the department; that priorities have been identified; that an action plan with milestones and a time scale has been agreed; and that progress will be monitored. Formal appraisal interviews (annually for senior staff and more often for others) should be complemented by less formal follow-up discussions to monitor progress and to check that suboptimal performance has been modified. Documentation of formal interviews can be limited to a short list of agreed objectives. Performance appraisal can have lasting value in the personal development of individuals, but the process can easily be mishandled and should not be started without training in how to hold an appraisal interview.2

Continuing Professional Development

Continuing professional development is a process of continuous systematic learning which enables health workers to be constantly brought up to date on developments in their professional work and thus ensure their competence to practice throughout their professional careers. Policies and programmes have been established in a number of countries and, in some, participation is a mandatory requirement for the right to practice.3

Strategic and Business Planning

Workload Assessment and Costing of Tests

A similar workload recording method was published by the College of American Pathologists,4 and the Welcan system was established in the UK.5 However, more recently, benchmarking schemes have been established that take account of productivity, cost-effectiveness and utilization compared with a peer group. The College of American Pathologists created their Laboratory Management Index Program in which participants submit their laboratories operating data on a quarterly basis and receive peer comparison reports from similar laboratories around the country by which their own cost-effectiveness can be evaluated.

Financial Control

Full costing of tests includes all aspects of laboratory function (Table 24.2).

Table 24.2 Factors contributing to cost of laboratory tests

| Direct costs | |

| Staff salaries | |

| Laboratory equipment purchases | |

| Reagents and other consumables | |

| Equipment maintenance | |

| Standardization and quality control | |

| Specific technical training on equipment | |

| Indirect costs | |

| Capital costs and mortgage factor | |

| Depreciation | |

| Building repairs and routine maintenance | |

| Lighting, heating and waste disposal | |

| Personnel services | |

| Cleaning services | |

| Transport, messengers and porters | |

| Laundry services | |

| Computers and information technology | |

| Telephone and fax | |

| Postage | |

| Journals and textbooks | |

Calculation of Test Costs

where

When automation is coupled with centralization of the service to another site, care must be taken to maintain service quality.6 Failure to do so will encourage clinicians to establish independent satellite laboratories. Loss of contact between clinical users and laboratory staff may compromise the pre-analytical phase of the test process and may lead to inappropriate requests, excessive requests and test samples that are of inadequate volume or are poorly identified. When services are centralized, attention must be paid to all phases (pre-analytical, analytical and post-analytical) of the test process, including the need for packaging the specimens and the cost of their transport to the laboratory.6

Test reliability

The reliability of a quantitative test is defined in terms of the uncertainty of measurement of the analyte (sometimes referred to in documents as ‘measurand’). This is based on its accuracy and precision.7

Accuracy is the closeness of agreement between the measurement that is obtained and the true value; the extent of discrepancy is the systematic error or bias. The most important causes of systematic error are listed in Table 24.3. The error can be eliminated or at least greatly reduced by using a reference standard with the test, together with internal quality control and regular checking by external quality assessment (see Chapter 25, p. 594).

Table 24.3 Systematic errors in analyses

| Analyser calibration uncertain (no reference standard available) |

| Bias in instrument, equipment or glassware |

| Faulty dilution |

| Faults in the measuring steps (e.g. reagents, spectrometry, calculations) |

| Sampling not representative of specimen |

| Specimens not representative of in vivo status (Ch. 1, p. 3) |

| Incomplete definition of analyte or lack of critical resolution of analyser |

| Approximations and arbitrary assumptions inherent in analyser’s function |

| Environmental effects on analyser |

| Pre-analytical deterioration of specimens |

Precision is the closeness of agreement when a test is repeated a number of times. Imprecision is the result of random errors; it is expressed as standard deviation (SD) and coefficient of variation (CV%). When the data are spread normally (Gaussian distribution), for clinical purposes, there is a 95% probability that results that fall within a range of +2SD to −2SD of the target value are correct and a 99% probably if within the range of +3SD to −3SD (see also Fig. 2.1).

Some of the other factors listed in Table 24.3 can be quantified to calculate the combined uncertainty of measurement. Thus, for example, when a calibration preparation is used, its uncertainty is usually stated on the label or accompanying certificate. The standard uncertainty is then calculated from the sum of the quantified uncertainties as follows:

Expanded uncertainty of measurement takes account of non-quantifiable items by multiplying the previous amount by a ‘coverage factor’ (k), which is usually taken to be ×2 for 95% level of confidence.7,8

It may be necessary to decide by statistical analysis whether two sets of data differ significantly. The t-test is used to assess the likelihood of significant difference at various levels of probability by comparing the means or individually paired results. The F-ratio is useful to assess the influence of random errors in two sets of test results (see Appendix, p. 625).

Of particular importance are reports with ‘critical laboratory values’ that may be indicative of life-threatening conditions requiring rapid clinical intervention. Haemoglobin concentration, platelet count and activated partial thromboplastin time have been included in this category.9 The development of critical values should involve consultation with clinical services.

Test selection

It is important for the laboratory to be aware of the limits of accuracy that it achieves in its routine performance each day as well as day-to-day.10 Clinicians should be made aware of the level of uncertainty of results for any test and the potential effect of this on their diagnosis and interpretation of response to treatment (see below).

To evaluate the diagnostic reliability and predictive value of an individual laboratory test, it is necessary to calculate test sensitivity and specificity.11 Sensitivity is the fraction of true positive results when a test is applied to patients known to have the relevant disease or when results have been obtained by a reference method. Specificity is the fraction of true negative results when the test is applied to normals.

where TP = true positive; TN = true negative; FP = false positive; FN = false negative.

Overall reliability can be calculated as:

Likelihood Ratio

The ratio of positive results in disease to the frequency of false-positive results in healthy individuals gives a statistical measure of the discrimination by the test between disease and normality. It can be calculated as follows:12

An alternative method is that of Youden, which is obtained by calculating Specificity/(1 − Sensitivity).13 Values range between −1 and +1. With a positive ratio rising above zero towards +1 there is an increasing probability that the test will discriminate the presence of the specified disease and there is decreasing likelihood that the test is valid when the ratio falls from 0 to −1.

Receiver–Operator Characteristic Analysis

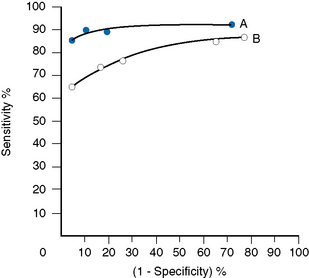

The relative usefulness of different methods for the same test or of a new method against a reference method can also be assessed by analysing the receiver–operator characteristics (ROC).12 This is demonstrated on a graph by plotting the true-positive rates (sensitivity) on the vertical axis against false-positive rates (1 − specificity) on the horizontal axis for a series of paired measurements (Fig. 24.1). Obviously, the ideal test would show high sensitivity (i.e. 100% on vertical axis), with no false positives (i.e. 0% on horizontal axis). Realistically, there would be a compromise between the two criteria, with test selection depending on its purpose, i.e. whether as a screening to exclude the disease in question or to confirm a clinical suspicion that the disease is present. In the illustrated case Test A is more reliable than Test B for both circumstances.

Test Utility

For assessing cost-effectiveness of a particular test, account must be taken of (1) cost per test as compared with other tests that provide similar clinical information; (2) diagnostic reliability; and (3) clinical usefulness as assessed by the extent with which the test is relied on in clinical decisions, whether the results are likely to change the physician’s diagnostic opinion and the clinical management of the patient, taking account of disease prevalence and a specified clinical or public health situation. This requires audit by an independent assessor to judge what proportion of the requests for a particular test are actually used intelligently and what percentage are unnecessary or wasted tests.14,15 Information on the utility of various tests can also be obtained from benchmarking (see p. 579) and published guidelines. Examples of the latter are the documents published by the British Committee for Standards in Haematology (www.BCSHGuidelines.com). The realistic cost-effectiveness of any test may be assessed by the formula:

Instrumentation

Equipment Evaluation

Assessment of the clinical utility and cost-effectiveness of equipment to match the nature and volume of laboratory workload is a very important exercise. Guidelines for evaluation of blood cell analysers and other haematology instruments have been published by the International Council for Standardization in Haematology.16 In the UK, appraisal of various items of laboratory equipment was formerly undertaken by selected laboratories at the request of the Department of Health’s Medical Devices Agency, subsequently renamed Medicines and Healthcare products Regulatory Agency (MHRA) and now replaced by the Centre for Evidence-based Purchasing (CEP). (Their reports can be accessed from the website: www.pasa.nhs.uk/ceppublications.)

Principles of Evaluation

The following aspects are usually included in evaluations:

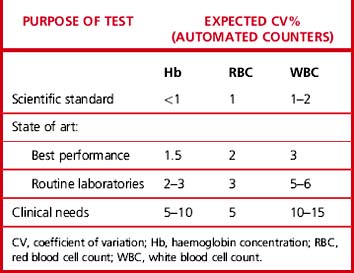

Precision

Carry out appropriate measurements 10 times consecutively on three or more specimens selected in the pathological range so as to include a low, a high and a middle range concentration of the analyte. Calculate the replicate SD and CV as shown on p. 625. The degree of precision that is acceptable depends on the purpose of the test (Table 24.4). To check between-batch precision, measure three samples in several successive batches of routine tests; calculate the SD and CV in the same way.

Carryover

where l1 and l3 are the results of the first and third measurements of the samples with a low concentration and h3 is the third measurement of the sample with a high concentration.

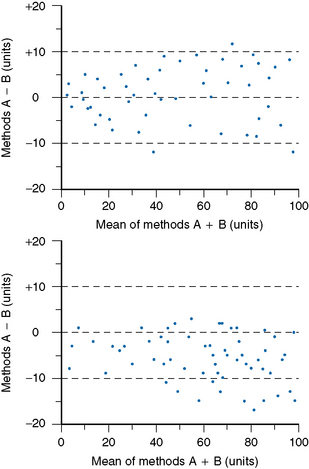

Accuracy and Comparability

Accuracy and comparability test whether the new instrument (or method) gives results that agree satisfactorily with those obtained with an established procedure and with a reference method. Test specimens should be measured alternately, or in batches, by the two procedures. If results by the two methods are analysed by correlation coefficient (r), a high correlation does not mean that the two methods agree. Correlation coefficient is a measure of relation and not agreement. It is better to use the limits of agreement method.12 For this, plot the differences between paired results on the vertical axis of linear graph paper against the means of the pairs on the horizontal axis (Fig. 24.2); differences between the methods are then readily apparent over the range from low to high values. If the scatter of differences increases at high values, logarithmic transformed data should be plotted.