Fig. 21.1

Age-standardized incidence rates per 100,000 person-years. Rates were standardized to the World Standard Population. Data based on [4]

CRC is rare under the age of 40, but incidence rates increase with age thereafter. With the rates rising rapidly after age 50 years, approximately 90 % of CRC cases are diagnosed in people age 50 years or older [5]. For an average individual in a typical Western population (e.g., U.S.), the lifetime risk of developing CRC is approximately 5 % [6].

Globally, the age-standardized incidence rate is about 1.4 times higher in men (20.6/100,000 person-years) than in women (14.3/100,000 person-years) [7]. Yet, women on average live longer than men, with the median age at diagnosis higher (73 years) than that in men (69 years) [8]. There are only slightly more CRC deaths in men (373,631/year) than in women (320,250/year) [7].

In the U.S., the highest age-standardized incidence rate has been observed in African Americans (men: 62.3/100,000 person-years, women: 47.5/100,000 person-years) among all racial and ethnic groups, followed by White, Hispanic, Asian/Pacific Islander, and American Indian/Alaska Native people [9].

21.3 Risk Factors

The risk of CRC is predominantly attributed to unhealthy lifestyle factors associated with Westernization. Migrant studies observed that CRC incidence and mortality rates among immigrants who moved from low-risk to high-risk countries converged to the rates of the host countries [10, 11], indicating the importance of environmental influences in colorectal carcinogenesis. In the U.S., as much as 50 % of CRC cases were estimated to be attributable to an unhealthy diet, physical inactivity, and excess body weight [12]. Additionally including a lower intake of folic acid from supplements and long-term smoking, more than two-thirds of CRC cases can be attributable to lifestyle factors [13]. Considering that these lifestyle factors are modifiable, such a large population attributable risk percent implies a considerable number of CRC cases preventable through lifestyle modifications.

In contrast, some factors that predispose people to CRC are non-modifiable. While explaining a smaller proportion of CRC cases, non-modifiable risk factors including a positive family history and inherited genetic factors are responsible for differences in the susceptibility of individuals to CRC (Table 21.1) [14–16], and contribute particularly to early-onset CRC.

Table 21.1

Non-modifiable risk factors for colorectal cancer

Groups | Lifetime risk of colorectal cancer (%) | Contribution to colorectal cancer cases (%) | |

|---|---|---|---|

Average population in the U.S.a | 5 | 75 | |

High-risk populations | |||

Familial adenomatous polyposis | 100 | 1 | |

Hereditary non-polyposis colorectal cancer syndrome | 50 | 5 | |

Inflammatory bowel disease | 5–50 | 1 | |

Family history of colorectal cancer | 10–15 | 15–20 | |

Past history of adenomas | 10–20 | Variable | |

21.3.1 Non-modifiable Risk Factors

21.3.1.1 Hereditary Syndromes

Most CRC occurs sporadically, (i.e., without known hereditary causes). Less than 10 % of CRC occur due to inherited gene mutations [17], and these cancers tend to manifest at younger ages (under age 50). The two most common subtypes of familial syndromes that predispose people to CRC are hereditary non-polyposis colorectal cancer (HNPCC) syndrome and familial adenomatous polyposis (FAP).

As the most common familial syndrome, HNPCC syndrome (also known as Lynch syndrome) is caused by mutations in DNA mismatch repair genes that are inherited in an autosomal dominant fashion [17]. HNPCC syndrome increases CRC risk by elevating malignant potential of adenomas (described below); affected individuals do not have an unusual number of adenomas but the adenomas they develop are more likely to progress into CRC. Approximately half of individuals with HNPCC syndrome develop CRC and the mean age at diagnosis is 44 years [18].

FAP is an autosomal dominant disease caused by an inherited mutation in the adenomatous polyposis coli (APC) gene, a tumor suppressor. APC mutations are hallmarks of early-stage colorectal carcinogenesis. Unlike HNPCC syndrome, individuals with FAP develop hundreds to thousands of adenomas in their teens or early adulthood. While fewer than 10 % of adenomas progress into cancer [19], some adenomas that occur with FAP inevitably undergo malignant transformation due to the presence of such a high number of adenomas. Thus, affected individuals have an almost 100 % chance of getting CRC by the age of 40 if not treated via colectomy. Yet, FAP is a rare disease (about 1 in 10,000 people) [20] and accounts for less than 1 % of CRC cases [21].

21.3.1.2 Personal History of Inflammatory Bowel Diseases (Particularly, Ulcerative Colitis)

Ulcerative colitis and Crohn’s disease, the two most common forms of inflammatory bowel disease, cause chronic inflammation in all or a part of the gastrointestinal tract. Chronic inflammation in the colon triggers compensatory proliferation to regenerate damaged tissue, which increases opportunities for mutations to occur [22]. Accumulation of genetic alterations can lead to dysplasia and subsequently to cancer. Thus, the pathogenesis of CRC in these patients does not evolve through adenoma, the classical precursor, but rather through dysplasia induced by chronic inflammation. Although CRC risk varies by duration and anatomic extent of the disease, affected individuals, particularly those with ulcerative colitis, can have up to a 50 % chance of developing CRC [23] if not treated, and the mean age at diagnosis is between age 40 and 50 years [24]. Thus, along with HNPCC syndrome and FAP, ulcerative colitis defines the top three subgroups at high risk of developing CRC.

21.3.1.3 Family History of Colorectal Cancer

The lifetime risk of CRC is roughly doubled for individuals with a family history of CRC in a first-degree relative (parents, siblings, or children). The risk is even higher if the first-degree relative is diagnosed young or if more than one first-degree relative is affected [25, 26]. The increased risk may be attributable to inherited genes, shared environmental factors, or combinations of the two. A family history of CRC is found in approximately 15–20 % of CRC patients.

21.3.1.4 Adenomas (Adenomatous Polyps)

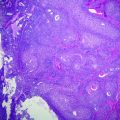

A colorectal polyp is an abnormal growth of tissue rising from the inner lining of the large intestine into the lumen. When classified histologically, the most common types include hyperplastic and adenomatous polyps [27]. Hyperplastic polyps are generally non-cancerous. Of note, once considered as a subgroup of hyperplastic polyps, serrated polyps are now recognized as a distinctive type with malignant potential (i.e., precancerous).

Adenomatous polyps (adenomas), originating from the mucus-secreting epithelial cells of the colorectum, are benign by themselves but harbor malignant potential. While fewer than 10 % of adenomas progress to cancers, more than 95 % of sporadic CRC develop from adenomas [19, 28]. Thus, adenomas represent the classical precursor lesions of CRC, with the carcinogenic pathway termed as adenoma-carcinoma sequence. Defined as advanced adenoma, an adenoma with a large size (≥1 cm in diameter), a villous component, or high-grade dysplasia has a particularly high propensity to develop into cancer [29].

Adenomas are common in Western countries. About one-third of asymptomatic average risk individuals of age 50–75 years harbor adenomas [30]. A significant proportion of people with initial adenomectomy develops recurrent adenomas within three years [31]. Although varying by characteristics of adenomas and subsequent surveillance by colonoscopy, the lifetime risk of CRC among individuals with a history of adenomas is estimated to be 10–20 %.

21.3.2 Modifiable Risk/Protective Factors

21.3.2.1 Risk Factors Associated with Westernization (Obesity, Sedentary Lifestyle, Western Dietary Pattern)

Invariably high incidence rates of CRC in Westernized countries have been incontrovertibly attributed to environmental factors associated with Westernization. A number of risk factors have been identified with relatively high consistency, although the underlying mechanisms are not fully understood. Initial hypotheses focused on the direct effects of high-fat and low-fiber intakes on the colorectal lumen, suggesting that their influences on fecal contents may drive colorectal carcinogenesis [32, 33]. However, accumulating evidence lends support to an alternative hypothesis, which implicates insulin and insulin-like growth factor 1 (IGF-1) [32]. The observation that risk factors for hyperinsulinemia are also risk factors for CRC led to the hypothesis that hyperinsulinemia and a corresponding increase in free IGF-1 may be the underlying mediators linking lifestyle risk factors associated with Westernization to CRC (Fig. 21.2) [32]. Insulin and IGF-1 increase proliferation and decrease apoptosis of colorectal epithelial cells, thereby promoting colorectal carcinogenesis [33, 34]. This insulin/IGF hypothesis is supported by a nested case-control study conducted among non-diabetic people, which observed a lower risk of CRC among those with lower plasma levels of both c-peptide (a marker of insulin secretion) and IGF-1/IGF-1 binding protein ratio [35].

Obesity

Evidence from prospective cohort studies consistently indicates that excess adiposity may elevate the risk of CRC, with the association stronger for colon cancer (CC) than for rectal cancer (RC) and stronger in men than in women. The association has been investigated based on anthropometric measures including body mass index (BMI), waist circumference (WC) or waist-to-hip ratio (WHR), and adult weight change. While correlated with each other, these measures capture different aspects of adiposity, with each representing a degree of overall body fatness, abdominal fatness, and time-integrated fat accumulation, respectively. Generally, overweight or obese men experience a 1.5- to 2-fold increased risk of CC compared with those in the normal or low range of BMI. The adverse effect of excess adiposity is more consistently seen with WC or WHR across epidemiologic studies, suggesting particular relevance of abdominal adiposity to CC risk. For instance, in the European Prospective Investigation Into Cancer and Nutrition (EPIC) study, a 55 % increased risk of CC was observed for men with BMI ≥29.4 kg/m2 compared to men with BMI <23.6 kg/m2, but no significant association was found in women [36]. In contrast, in an analysis comparing extreme quintiles of WHR, an approximately 50 % increased risk of CC was observed for both men and women [36]. Annual weight gain (kg/year) during adulthood (from age 20 to 50) was positively associated with CC, especially if it resulted in an increase in WC [37].

Substantial evidence points to abdominal fat, specifically visceral adipose tissue (VAT), as a principal etiologic factor for CC pathogenesis. First, an association between excess adiposity and CC risk is stronger in men than in women [38], and men have a tendency towards abdominal distribution of fat [39, 40]. Second, a positive association of WC or WHR with CC risk remained significant even after adjustment for BMI, whereas that of BMI with CC risk became non-significant after adjustment for WC or WHR [36, 41]. Third, in studies that used VAT and subcutaneous adipose tissue (SAT) in the abdominal area as measured by CT, VAT but not SAT was associated with adenomas, particularly with advanced colorectal adenomas [42–44]. Finally, in a study that simultaneously included BMI, WC, and VAT in the same regression model, only VAT was a statistically significant predictor of adenomas [45]. Taken altogether, VAT may be the underlying mediator of excess adiposity and colorectal neoplasia and thus, the presence of an association between WC and CC independent of BMI may be attributable to the better capability of WC to capture VAT than BMI.

Biological mechanisms explaining the specific contribution of VAT to CC risk are well-aligned with the insulin/IGF hypothesis. Compared to SAT, VAT secrets more pro-inflammatory cytokines (e.g., interleukin-6, tumor necrosis factor-α) and less insulin-sensitizing adiponectin [46–48]. Furthermore, VAT more readily effuses free fatty acids into the circulation and, in response, the liver and muscle preferentially uptake fatty acids over glucose, becoming less responsive to insulin [48–50]. Hence, VAT is more strongly associated with insulin resistance and consequently, more relevant to the etiology of CC. In view of this, VAT may be linked to differential incident rates of colorectal neoplasia by sex and race. The amount of VAT is higher in men than in women on average [40, 51]. Asians, who have a tendency towards lower muscle mass and greater abdominal adiposity, have more VAT than Caucasians for a given BMI [52]. Consistently, higher incidence rates of colorectal neoplasia are observed in men than women and in Asians in the normal range of BMI than Caucasians with equivalent BMIs [53].

Sedentary Lifestyle

With Westernization, people become highly sedentary in diverse domains of life including occupation and recreation. Distinct from physical inactivity describing the absence of structured and purposive exercise, sedentary behavior is characterized by prolonged sitting.

Sedentary lifestyle is emerging as an independent risk factor for CRC by initiating qualitatively unique cellular and molecular responses in the skeletal muscle that may be relevant to colorectal carcinogenesis [54, 55]. To date, the largest prospective study was conducted in the NIH-AARP Diet and Health Study cohort in which sedentary lifestyle was captured by time spent watching TV. Independent of physical activity, those with ≥63 h/week of TV viewing time had an approximately 1.5-fold increased risk of CC compared with those with <21 h/week of TV viewing time [56].

Western Dietary Pattern

People eat foods not in isolation but in combination. Thus, the analysis of dietary patterns that captures the combined effects of nutrients and foods as they are consumed in a population has some advantages over the single nutrient or food approach in epidemiologic studies [57]. With regard to CRC, one of the most commonly identified dietary patterns is an unhealthy Western diet characterized by high consumption of red/processed meats and refined grains [58]. Although studies varied in the inclusion of high-fat dairy products and French fries in defining the Western dietary pattern, a deleterious effect of the Western dietary pattern on CRC risk has been consistently reported [58]. In a recent meta-analysis based on 11 observational studies, the risk of CC, but not RC, increased with higher accordance with the Western dietary pattern [59].

High consumption of red/processed meat that predominately characterizes the Western dietary pattern may contribute to carcinogenesis not only through its adverse effects on circulating insulin concentration [60], but also through heme-iron [61] and carcinogens (N-nitroso compounds [62]; heterocyclic amines and polycyclic aromatic hydrocarbons that are produced when meats are cooked at high temperatures [63]).

21.3.2.2 Other Risk Factors

Smoking

A wide range of carcinogens in cigarette smoke can readily reach the colorectal mucosa through the circulatory system or direct ingestion, inducing genetic mutations or epigenetic alterations [64]. Long-term heavy smoking, chiefly initiated at an early age, is a strong risk factor for CRC, particularly for RC. According to a recent dose–response meta-analysis, CRC risk decreased by 4 % for each 10-year delay in smoking initiation, but increased by 20 % for each 40-year increase in smoking duration [65]. Of note, a positive association between smoking and CRC risk was not supported by earlier studies conducted soon after the smoking epidemic (around the 1920s for men and the late 1940s for women), but was demonstrated in later studies that allowed for 35–40 years of an induction period between the booming of smoking and CRC diagnosis [64, 66–68]. Thus, smoking appears to act predominantly at an early stage of carcinogenesis.

The mutagenic effect of tobacco smoke has been generally considered irreversible, as most studies have shown that past smokers carry a persistently higher risk than non-smokers [66]. However, recent epidemiologic studies on duration of smoking cessation and CRC risk have suggested reversibility of the effect. For instance, in a large cohort study conducted in the U.S., CRC risk among past smokers reversed to lifelong non-smoker level after at least 31 years of cessation [69]. A more recent study accounting for molecular heterogeneity of CRC observed that the benefit of smoking cessation was limited to CpG island methylator pathway (CIMP)-high CRC, a distinct molecular phenotype of CRC characterized by selected cancer-related genes hypermethylated at CpG islands (promoter regions of genes rich in a linear sequence of a cytosine nucleotide and a guanine nucleotide that are linked via phosphate) [70, 71]. Thus, smoking may also act at a relatively late stage of carcinogenesis related to DNA methylation pathways leading to CIMP-high CRC.

Heavy Alcohol Intake

Heavy alcohol intake is a fairly consistent risk factor for CRC. In a pooled analysis of eight large prospective studies that included 4687 CRC cases among nearly 500,000 participants, individuals who consumed ≥45 g/day of alcohol (i.e., ethanol content in 3 drinks/day in the U.S.) had a 42 % elevated risk of CRC relative to non-drinkers, irrespective of types of alcoholic drinks [72]. In general, the association is stronger among men than among women, probably due to a higher range of alcohol intake in men, although a true gender difference cannot be excluded. Consistent with the well-known biological interaction between alcohol and folate (i.e., alcohol antagonizes the effect of folate by impairing the intestinal absorption of folate or by suppressing intracellular folate metabolism) [73], alcohol intake was noticeably harmful when folate status was poor, especially among men [74].

The adverse effect of alcohol on CRC risk may be chiefly mediated by the first metabolite of ethanol [75, 76], acetaldehyde, which is classified as a carcinogen. Absorbed alcohol is rapidly distributed to the body through the bloodstream, reaching colonocytes. Intestinal microflora with alcohol dehydrogenase (ADH) activity oxidizes the supplied ethanol to acetaldehyde, thereby serving as a major determinant of acetaldehyde concentration in colonocytes [75–77]. As colonocytes lack the ability to detoxify acetaldehyde, the accumulated acetaldehyde in these cells may induce colorectal carcinogenesis by causing DNA damage, promoting excessive growth of the colonic mucosa [75, 76], or antagonizing the protective effect of folate particularly by directly destructing intracellular folate [74]. The central contribution of acetaldehyde to colorectal carcinogenesis is also supported by the findings that the positive association between alcohol intake and CRC risk is pronounced among people carrying genetic polymorphisms leading to acetaldehyde accumulations (e.g., ADH1C alleles encoding ADH enzymes with high activity [i.e., quick conversion of ethanol to acetaldehyde] or ALDH2 alleles encoding ALDH enzymes with low activity [i.e., slow elimination of acetaldehyde]) [75].

21.3.2.3 Protective Factors

Physical Activity

Physical activity is a well-accepted protective factor, particularly against CC. The investigation of physical activity in relation to CRC has been hindered to some extent due to difficulties measuring activity accurately as well as a narrow range of physical activity in many study populations. These factors have likely generated heterogeneity in the magnitude and statistical significance of epidemiologic findings. Nevertheless an inverse association with CRC was consistently observed across sex, study designs, countries, domains of physical activity (e.g., occupational, recreational, household), and stage of carcinogenesis (i.e., adenomas, cancer) [79, 80].

Physical activity has diverse dimensions including intensity, duration, and frequency. The total Metabolic Equivalent Task (MET)-hours/week, a composite score incorporating all of the aforementioned dimensions of total weekly physical activity, is the most comprehensive measure of physical activity. In large cohort studies of men (the Health Professionals Follow-up Study) and women (the Nurses’ Health Study) that used validated questionnaires to assess leisure-time physical activity, a higher MET-hours/week was associated with a substantially reduced risk of CC after accounting for BMI and other important risk factors [81, 82]. Given that walking was the most common type of leisure-time physical activity in these cohorts, people may have accumulated most of their MET-hours/week through walking. Thus, these findings suggest that activities of moderate intensity such as brisk walking may be sufficient to reduce CC risk. Yet several lines of evidence indicate that a greater intensity of physical activity may provide additional benefit [83, 84].

The beneficial effect of physical activity on CC risk may be mediated through the insulin/IGF pathway as well as through other mechanisms. Physical activity lowers circulating insulin levels directly by increasing insulin sensitivity [85] and indirectly by decreasing abdominal fatness (particularly VAT). Evidence supporting the direct effect not mediated through adiposity comes from some, but not all biomarker studies, which showed a significant inverse relationship, particularly among men, between physical activity and circulating C-peptide, insulin, or leptin concentrations adjusting for measures of adiposity [86, 87]. With regard to the indirect effect, VAT, which is more lipolytic than SAT, is preferentially lost in response to physical activity [48, 88–90]. Controlled trials have demonstrated that moderate-intensity physical activities reduce VAT [88, 89], with vigorous activities inducing greater VAT loss [91], and a significant reduction in VAT occurs even in the absence of weight loss [88, 89]. Other proposed mechanisms whereby physical activity lowers CC risk include reducing chronic inflammation, improving immune function, and decreasing colonic exposure to carcinogens by increasing colonic motility [92, 93].

Fiber

A possible beneficial role of dietary fiber intake in the prevention of CRC was first hypothesized by Burkitt in the late 1960s upon observing a low incidence of CRC in southern Africa, where dietary fiber intake was high [94]. Several mechanistic explanations have been proposed to support the potential association. Insoluble fibers may reduce CRC risk by increasing stool bulk, shortening stool transit time, and decreasing exposure of the colorectal mucosa to potential carcinogens [95]. Soluble fibers, which can be fermented by the anaerobic intestinal microbiota into short-chain fatty acids (e.g., acetate, propionate, and butyrate), may protect against CRC by reducing colorectal pH or through butyrate, which inhibits proliferation and induces apoptosis of CRC cells [95, 96].

Observational studies overall suggest an inverse association, with a recent dose–response meta-analysis of prospective studies estimating an approximately 10 % reduced risk of CRC for a 10 g/day increased intake of total fiber, particularly cereal fiber intake [97]. However, some cohort studies that adjusted for physical activity and dietary intake of folate and calcium did not observe an association [98–101]. No appreciable protective association was observed in most randomized trials of fiber supplements and risk of adenoma [102]. Yet, at present, we cannot entirely dismiss a potential protective role of fiber intake against CRC, because its efficacy may be limited to specific types (insoluble, soluble) or food sources (fruits, vegetables, and cereal fibers) and may vary depending on intestinal microflora profiles [96, 102]. Additionally, in modern diets in which refined food is a dominant component, the amount and quality of fiber is much lower than in traditional diets.

Micronutrients: Folate, Calcium, Vitamin D

Folate (Vitamin B9)

For decades, folate has been proposed to protect against CRC due to its critical roles in maintaining the integrity of DNA synthesis and regulating DNA methylation that controls proto-oncogene expression. In contrast, in recent years, a role in facilitating the progression of preneoplastic lesions has been suggested [103–106].

Particularly required to observe a benefit of folate on CRC risk is a long induction period, which is evident from both molecular mechanistic perspectives and epidemiologic studies. For example, in colonocytes, total folate concentration significantly decreases from the normal tissue without adenoma, to the normal tissue adjacent to adenoma, and to the adenoma itself [107]. The lower folate level in the normal appearing, non-neoplastic tissue corresponds to an increase in uracil misincorporation into DNA and DNA hypomethylation [107], which suggests the presence of a field defect surrounding adenomas. As the progression from precursor lesions to cancer diagnosis generally takes at least 10 years [108], macroscopically undetectable abnormalities due to folate deficiency would occur at least a decade before CRC is apparent. In a pooled analysis of 13 prospective studies with follow-up periods ranging from 7 to 20 years, total folate intake of ≥560 mcg/day relative to <240 mcg/day was significantly associated with a 13 % lower risk of CC [109]. In a cohort study that directly tested several potential induction periods between folate intake and CRC risk, a significant inverse association emerged only after a lag period of at least 12–16 years [110]. In view of this finding, randomized placebo-controlled trials (RCTs) of folic acid supplementations, if having a short follow-up period, are unlikely to observe a benefit on CRC. On the contrary, for the outcome of initial adenomas, a benefit was observed after 3 years of intervention in a recent RCT in China [111].

Regarding the potential adverse effect of excess folic acid on lesions at late stages of colorectal carcinogenesis, a recent meta-analysis of 13 RCTs provided rebutting evidence that folic acid supplementations at considerably high doses (500–40,000 mcg/day) did not substantially increase the risk of CRC during the first 5 years of intervention compared to placebos (Relative Risk (RR) 1.07, 95 % CI 0.83–1.37) [112]. In RCTs, most of the cancers diagnosed in the first 5 years are likely to originate from covert advanced adenomas or latent cancers. Thus, if folic acid supplementation were to promote the progression of such existing neoplasms, a significantly increased risk of CRC should have been observed in the supplement arms, which was not the case. Even in the subgroup analysis restricted to three trials conducted among participants with a prior history of adenomas and thus at high risk for CRC, no evidence of a positive association was apparent (RR 0.76, 95 % CI 0.32–1.80) [112].

Calcium

There is strong mechanistic evidence that calcium may decrease the risk of colorectal neoplasia. Experimental studies in animals and humans suggest that calcium may bind secondary bile acids or ionized fatty acids in the colorectal lumen, diminishing their carcinogenic effects on the colorectal mucosa [113, 114]. Alternatively, evidence from in vivo and in vitro human colonic epithelial cells suggests that calcium may reduce cell proliferation and promote cell differentiation by modulating cell signaling pathways [115, 116]. Calcium supplementation of 2000 mg/day was demonstrated to induce favorable changes on gene expression in the APC/β-catenin pathway in the normal-appearing mucosa of colorectal adenoma patients [117]. Perturbation of this pathway is a common early event in colorectal carcinogenesis.

RCTs also provide strong evidence supporting the chemopreventive potential of calcium against adenomas. In a meta-analysis of RCTs, those assigned to take 1200–2000 mg of calcium supplements without co-administered vitamin D over 3–4 years had an approximately 20 % reduced risk of adenoma recurrence compared to those assigned to the placebo group [118]. In the Calcium Polyp Prevention Study, the largest RCT (930 participants) included in the meta-analysis, 1200 mg/day supplementation of calcium carbonate over 4 years was most protective against histologically advanced neoplasms, suggesting its benefit may extend to cancer outcome as well [119].

Contrary to expectation, a meta-analysis of eight RCTs on CRC outcome showed no benefit of calcium supplementation over 4 years [120]. These inconsistent results by adenoma versus cancer outcome may be potentially explained by the presence of a long induction period between adequate calcium intake and CRC diagnosis. Given that the prevention or removal of an adenoma leads to the prevention of CRC and that the progression from adenoma to CRC spans at least 10 years, a longer follow-up would be required for the observed benefit of calcium against adenoma outcome [118] to extend to cancer outcome. Consistent with this hypothesis, a pooled analysis of 10 cohort studies [121] whose follow-up periods ranged between 6 and 16 years showed a significant inverse association between total calcium intake and CRC [121].

While the potential of calcium as a chemopreventive agent against CRC appears promising, the optimal dose remains to be identified. More specifically, a pooled analysis indicated no additional benefit at a total calcium intake beyond 1000 mg/day [121], whereas a recent dose–response meta-analysis of prospective observational studies suggested that CRC risk may continue to decrease beyond 1000 mg/day [122].

Vitamin D Status

Observational studies provide consistent evidence indicating an etiologic role of a poor vitamin D status in CRC development and, particularly, in CRC progression [123]. The potential anti-carcinogenic effect of vitamin D was first proposed by Garland and Garland in 1980, upon noticing that CC mortality rates in the U.S. were generally higher in the northern regions that have lower exposure to solar ultraviolet B (UV-B) radiation, which is required to synthesize vitamin D in the skin [124]. The hypothesis is further corroborated by molecular studies showing that vitamin D, beyond its conventional role in calcium and phosphorus homeostasis, influences cellular signaling pathways leading to an inhibition of proliferation and angiogenesis and an activation of apoptosis [125].

In epidemiologic studies, the hypothesis has been investigated using a variety of surrogate measures of vitamin D status such as UV-B radiation exposure, dietary and supplementary vitamin D intake, and predicted or measured levels of circulating 25(OH)vitamin D (25(OH)D), which is considered the best biochemical indicator of vitamin D status. Regardless of the measures used, results have been generally consistent across studies [123, 126]. For example, in the largest available study on circulating 25(OH)D and CRC endpoints, the previously mentioned EPIC study, prediagnostic 25(OH)D concentration in the highest quintile was associated with an approximately 40 % reduced risk of developing CRC [127] and a 31 % improved survival of patients with CRC [128] compared with the level in the lowest quintile.

The optimal levels of 25(OH)D to reduce CRC incidence and mortality are not yet established, but available studies suggest the range of 30–40 ng/mL [123]. This range is consistent with the clinical practice guidelines on vitamin D deficiency by the Endocrine Society, which recommends a minimum level of 30 ng/mL but 40–60 ng/mL to guarantee sufficiency [129].

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree