Methods of Molecular Analysis

2.1 INTRODUCTION

Advances made over the last 10 years in understanding the biology of cancer have transformed the field of oncology. While genetic analysis was limited previously to gross chromosomal abnormalities in karyotypes, DNA in cells can now be analyzed to the individual base pair level. This intricate knowledge of the genetics of cancer increases the possibility that personalized treatment for individual cancers lies in the near future. To appreciate the relevance and nature of these technological advances, as well as their implications for function, an understanding of the modern tools of molecular biology is essential. This chapter reviews the cytogenetic, nucleic, proteomic, and bioinformatics methods used to study the molecular basis of cancer, and highlights methods that are likely to affect in the future management of cancer.

2.2 PRINCIPAL TECHNIQUES FOR NUCLEIC ACID ANALYSIS

2.2.1 Cytogenetics and Karyotyping

Cancer arises as a result of the stepwise accumulation of genetic changes that confer a selective growth advantage to the involved cells (see Chap. 5, Sec. 5.2). These changes may consist of abnormalities in specific genes (such as amplification of oncogenes or deletion of tumor-suppressor genes). Although molecular techniques can identify specific DNA mutations, cytogenetics provides an overall description of chromosome number, structure, and the extent and nature of chromosomal abnormalities.

Several techniques can be used to obtain tumor cells for cytogenetic analysis. Leukemias and lymphomas from peripheral blood, bone marrow, or lymph node biopsies are easily dispersed into single cells suitable for chromosomal analysis. In contrast, cytogenetic analysis of solid tumors has several difficulties; the cells are tightly bound together and must be dispersed by mechanical means and/or by digestion with proteolytic enzymes (eg, collagenase) which can damage cells. Secondly, the mitotic index in solid tumors is often low (see Chap. 9, Sec. 9.2), making it difficult to find enough metaphase cells to obtain good-quality cytogenetic preparations. Finally, lymphoid and myeloid and other (normal) cells often infiltrate solid tumors and may be confused with the malignant cell population.

Chromosomes are usually examined in metaphase, when they become condensed and appear as 2 identical sister chromatids held together at the centromere as DNA replication has already occurred at that stage of mitosis. Exposure of the tumor cells to agents such as colcemid arrests them in metaphase by disrupting the mitotic spindle fibers that normally separate the chromatids. The cells are then swollen in a hypotonic solution, fixed in methanol-acetic acid, and metaphase “spreads” are prepared by physically dropping the fixed cells onto glass microscope slides.

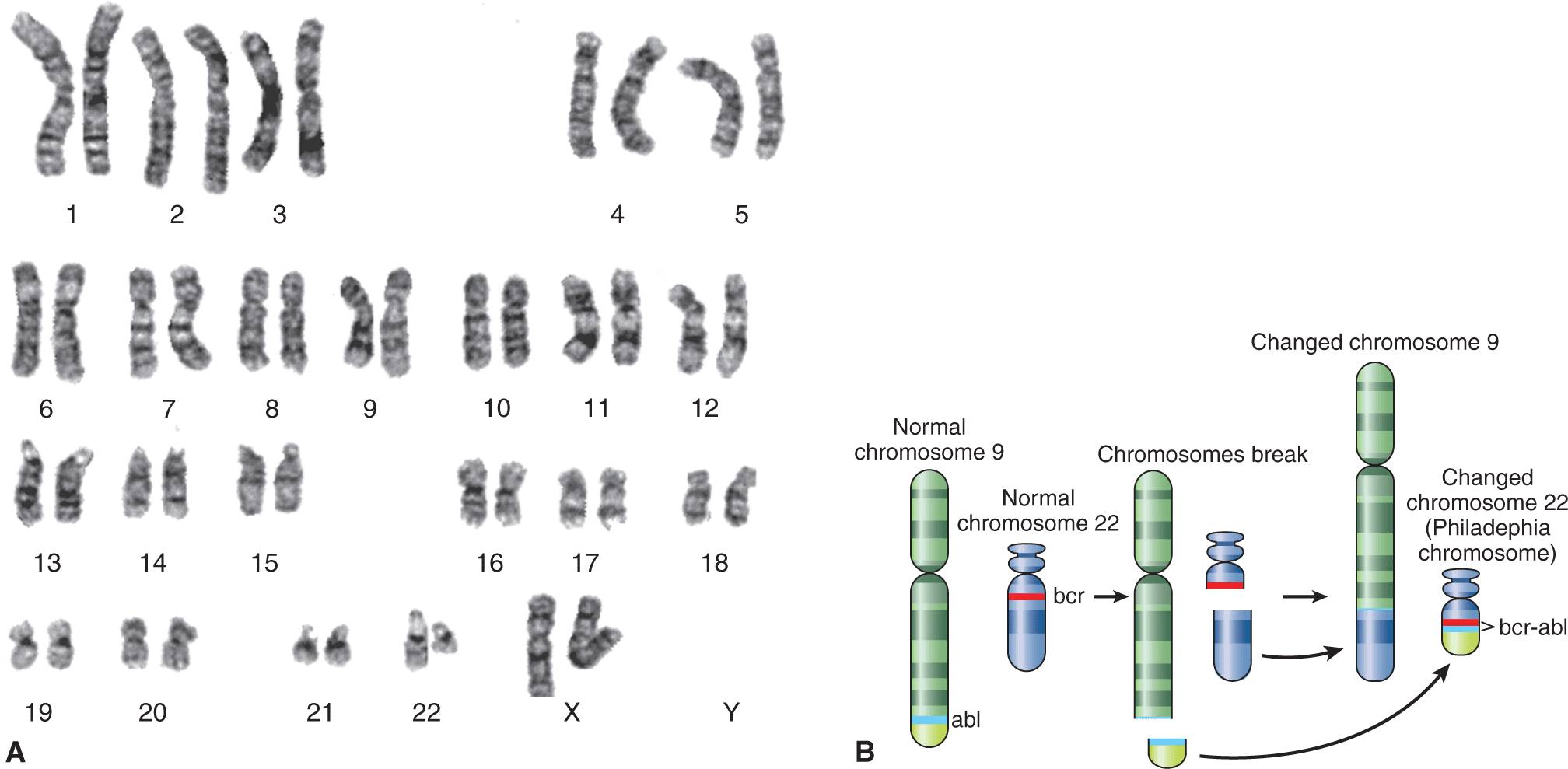

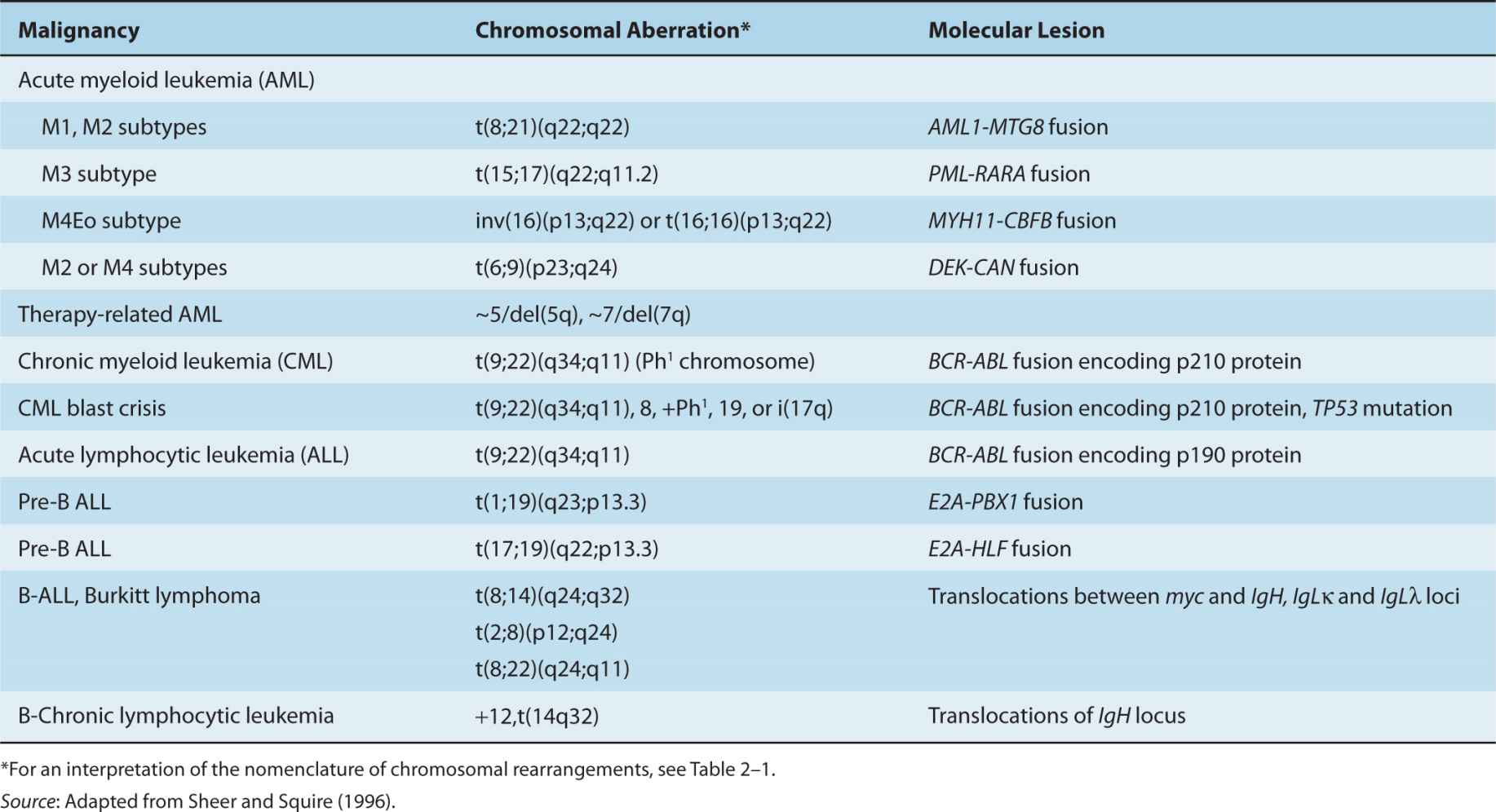

Chromosomes can be recognized by their size and shape and by the pattern of light and dark “bands” observed after specific staining. The most popular way of generating banded chromosomes is proteolytic digestion with trypsin, followed by a Giemsa stain. A typical metaphase spread prepared using conventional methods has approximately 550 bands, whereas cells spread at prophase can have more than 800 bands; these bands can be analyzed using bright-field microscopy and digital photography. The result of cytogenetic analysis is a karyotype, which, in written form, describes the chromosomal abnormalities using the international consensus cyto-genetic nomenclature (Brothman et al, 2009; see Fig. 2–1 and Table 2–1). Table 2–2 lists common chromosomal abnormalities in lymphoid and myeloid malignancies.

FIGURE 2–1 The photograph on the left (A) shows a typical karyotype from a patient with chronic myelogenous leukemia. By international agreement, the chromosomes are numbered according to their appearance following G-banding. Note the loss of material from the long arm of one copy of the chromosome 22 pair (the chromosome on the right) and its addition to the long arm of 1 copy of chromosome 9 (also the chromosome on the right of the pair). B) A schematic illustration of the accepted band pattern for this rearrangement. The green and red lines indicate the precise position of the break points that are involved. The karyotypic nomenclature for this particular chromosomal abnormality is t(9;22)(q34;q11). This description means that there is a reciprocal translocation between chromosomes 9 and 22 with break points at q34 on chromosome 9 and q11 on chromosome 22. The rearranged chromosome 22 is sometimes called the Philadelphia chromosome (or Ph chromosome), after the city of its discovery.

TABLE 2–1 Nomenclature for chromosomes and their abnormalities.

TABLE 2–2 Common chromosomal abnormalities in lymphoid and myeloid malignancies.

The study of solid tumors has been facilitated by new analytic approaches that combine elements of conventional cytogenetics with molecular methodologies. This new hybrid discipline is called molecular cytogenetics, and its application to tumor analysis usually involves the use of techniques based on fluorescence in situ hybridization or FISH (see Sec. 2.2.6).

2.2.2 Hybridization and Nucleic Acid Probes

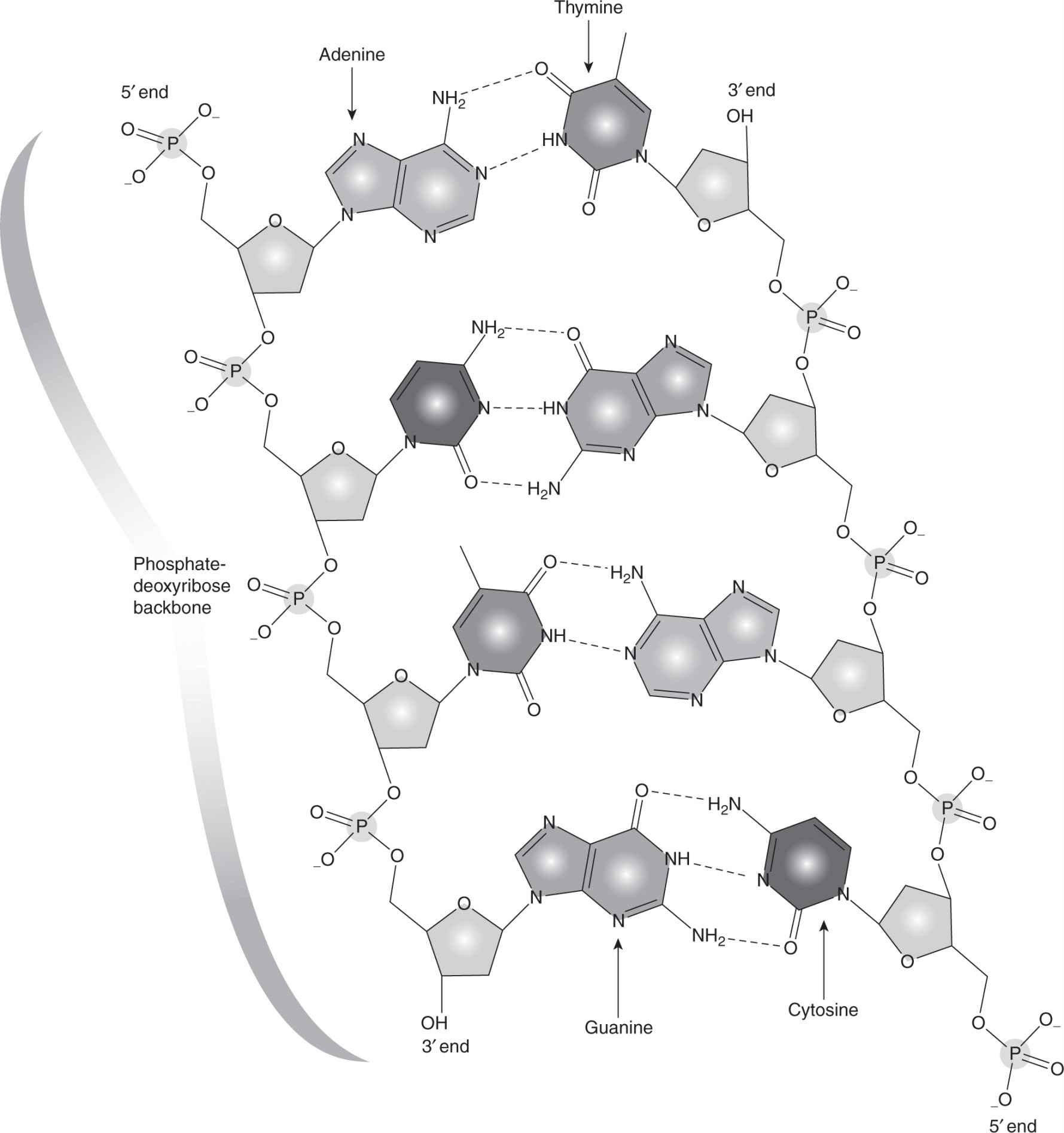

DNA is composed of 2 complementary strands (the sense strand and the non-sense strand) of specific sequences of 4 nucleotide bases that make up the genetic alphabet. The association (via hydrogen bonds) between 2 bases on opposite complementary DNA or certain types of RNA strands that are connected via hydrogen bonds is called a base pair (often abbreviated bp). In the canonical Watson-Crick DNA base pair, adenine (A) forms a base pair with thymine (T) and guanine (G) forms a base pair with cytosine (C). In RNA, thymine is replaced by uracil (U). There are 2 processes that rely on this base pairing (Fig. 2–2). As DNA replicates during the S phase of the cell cycle, a part of the helical DNA molecule unwinds and the strands separate under the action of topoisomerase II (see Chap. 18, Fig. 18–13). DNA polymerase enzymes add nucleotides to the 3′-hydroxyl (3′-OH) end of an oligonucleotide that is hybridized to a template, thus leading to synthesis of a complementary new strand of DNA. Transcription of messenger RNA (mRNA) takes place through an analogous process under the action of RNA polymerase with one of the DNA strands (the non-sense strand) acting as a template; complementary bases (U, G, C, and A) are added to the mRNA through pairing with bases in the DNA strand so that the sequence of bases in the RNA is the same as in the “sense” strand of the DNA (except that U replaces T). During this process the DNA strand is separated temporarily from its partner through the action of topoisomerase I (see Chap. 18, Sec. 18.4). Only parts of the DNA in each gene are translated into polypeptides, and these coding regions are known as exons; non-coding regions (introns) are interspersed throughout the genes and are spliced out of the mRNA transcript during the RNA maturation process and before protein synthesis. Synthesis of polypeptides, the building blocks of proteins, are then directed by the mRNA in association with ribosomes, with each triplet of bases in the exons of the DNA encoding a specific amino acid that is added to the polypeptide chain.

FIGURE 2–2 The DNA duplex molecule, also called the double helix, consists of 2 strands that wind around each other. The strands are held together by chemical attraction of the bases that comprise the DNA. A bonds to T and G bonds to C. The bases are linked together to form long strands by a “backbone” chemical structure. The DNA bases and backbone twist around to form a duplex spiral.

To develop an understanding of the techniques now used in both clinical cancer care and research, it is necessary to understand the specificity of hybridization and the action and fidelity of DNA polymerases. When double-stranded DNA is heated, the complementary strands separate (denature) to form single-stranded DNA. Given suitable conditions, separated complementary regions of specific DNA sequences can join together to reform a double-stranded molecule. This renaturation process is called hybridization. This ability of single-stranded nucleic acids to hybridize with their complementary sequence is fundamental to the majority of techniques used in molecular genetic analysis. Using an appropriate reaction mixture containing the relevant nucleotides and DNA or RNA polymerase, a specific piece of DNA can be copied or transcribed. If radiolabeled or fluorescently labeled nucleotides are included in a reaction mixture, the complementary copy of the template can be used as a highly sensitive hybridization-dependent probe.

2.2.3 Restriction Enzymes and Manipulation of Genes

Restriction enzymes are endonucleases that have the ability to cut DNA only at sites of specific nucleotide sequences and always cut the DNA at exactly the same place within the designated sequence. Figure 2–3 illustrates some commonly used restriction enzymes together with the sequence of nucleotides that they recognize and the position at which they cut the sequence. Restriction enzymes are important because they allow DNA to be cut into reproducible segments that can be analyzed precisely. An important feature of many restriction enzymes is that they create sticky ends. These ends occur because the DNA is cut in a different place on the 2 strands. When the DNA molecule separates, the cut end has a small single-stranded portion that can hybridize to other fragments having compatible sequences (ie, fragments digested using the same restriction enzyme) thus allowing investigators to cut and paste pieces of DNA together.

FIGURE 2–3 The nucleotide sequences recognized by 5 different restriction endonucleases are shown. On the left side, the sequence recognized by the enzyme is shown; the sites where the enzymes cut the DNA are shown by the arrows. On the right side, the 2 fragments produced following digestion with that restriction enzyme are shown. Note that each recognition sequence is a palindrome; ie, the first 2 or 3 bases are complementary to the last 2 or 3 bases. For example, for Eco R1, GAA is complementary to TTC. Also note that following digestion, each fragment has a singlestranded tail of DNA. This tail is useful in allowing fragments that contain complementary overhangs to anneal with each other.

Once a gene has been identified, the DNA segment of interest can be inserted into a bacterial virus or plasmid to facilitate its manipulation and propagation using restriction enzymes. A complementary DNA strand (cDNA) is first synthesized using mRNA as the template by a reverse transcriptase enzyme. This cDNA contains only the exons of the gene from which the mRNA was transcribed. Figure 2–4 presents a schematic of how a restriction fragment of DNA containing the coding sequence of a gene can be inserted into a bacterial plasmid conferring resistance against the drug ampicillin to the host bacterium. The plasmid or virus is referred to as a vector carrying the passenger DNA sequence of the gene of interest. The vector DNA is cut with the same restriction enzyme used to prepare the cloned gene, so that all the fragments will have compatible sticky ends and can be spliced back together. The spliced fragments can be sealed with the enzyme DNA ligase, and the reconstituted molecule can be introduced into bacterial cells. Because bacteria that take up the plasmid are resistant to the drug (eg, ampicillin), they can be isolated and propagated to large numbers. In this way, large quantities of a gene can be obtained (ie, cloned) and labeled with either radioactivity or biotin for use as a DNA probe for analysis in Southern or northern blots (see Sec. 2.2.4). Cloned DNA can be used directly for nucleotide sequencing (see Sec. 2.2.10), or for transfer into other cells. Alternatively, the starting DNA may be a complex mixture of different restriction fragments derived from human cells. Such a mixture could contain enough DNA so that the entire human genome is represented in the passenger DNA inserted into the vectors. When a large number of different DNA fragments have been inserted into a vector population and then introduced into bacteria, the result is a DNA library, which can be plated out and screened by hybridization with a specific probe. In this way an individual recombinant DNA clone can be isolated from the library and used for most of the other applications described in the following sections.

FIGURE 2–4 Insertion of a gene into a bacterial plasmid. The cDNA of interest (pink line) is digested with a restriction endonuclease (depicted by scissors) to generate a defined fragment of cDNA with “sticky ends.” The circular plasmid DNA is cut with the same restriction endonuclease to generate single-stranded ends that will hybridize and to the cDNA fragment. The recombinant DNA plasmid can be selected for growth using antibiotics because the ampicillinresistance gene (hatched) is included in the construct. In this way, large amounts of the human cDNA can be obtained for further purposes (eg, for use as a probe on a Southern blot).

2.2.4 Blotting Techniques

Southern blotting is a method for analyzing the structure of DNA (named after the scientist who developed it). Figure 2–5 outlines schematically the Southern blot technique. The DNA to be analyzed is cut into defined lengths using a restriction enzyme, and the fragments are separated by electrophoresis through an agarose gel. Under these conditions the DNA fragments are separated based on size, with the smallest fragments migrating farthest in the gel and the largest remaining near the origin. Pieces of DNA of known size are electrophoresed at the same time (in a separately loaded well) and act as a molecular mass marker. A piece of nylon membrane is then laid on top of the gel and a vacuum is applied to draw the DNA through the gel into the membrane, where it is immobilized. A common application of the Southern technique is to determine the size of the fragment of DNA that carries a particular gene. The nylon membrane containing all the fragments of DNA cut with a restriction enzyme is incubated in a solution containing a radioactive or fluorescently-labeled probe which is complementary to part of the gene (see Sec. 2.2.2). Under these conditions, the probe will anneal with homologous DNA sequences present on the DNA in the membrane. After gentle washing to remove the single-stranded, unbound probe, the only labeled probe remaining on the membrane will be bound to homologous sequences of the gene of interest. The location of the gene on the nylon membrane can then be detected either by the fluorescence or radioactivity associated with the probe. An almost identical procedure can be used to characterize mRNA separated by electrophoresis and transferred to a nylon membrane. The technique is called northern blotting and is used to evaluate the expression patterns of genes. An analogous procedure, called western blotting, is used to characterize proteins. Following separation by denaturing gel electrophoresis, the proteins are immobilized by transfer to a charged synthetic membrane. To identify specific proteins, the membrane is incubated in a solution containing a specific primary antibody either directly labeled with a fluorophore, or incubated with a secondary antibody that will bind to the primary antibody and is conjugated to horseradish peroxidase (HRP) or biotin. The primary antibody will bind only to the region of the membrane containing the protein of interest and can be detected either directly by its fluorescence or by exposure to chemoluminescence detection reagents.

FIGURE 2–5 Analysis of DNA by Southern blotting. Schematic outline of the procedures involved in analyzing DNA fragments by the Southern blotting technique. The method is described in more detail in the text.

2.2.5 The Polymerase Chain Reaction

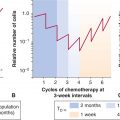

The polymerase chain reaction (PCR) allows rapid production of large quantities of specific pieces of DNA (usually about 200 to 1000 base pairs) using a DNA polymerase enzyme called Taq polymerase (which is isolated from a thermophilic bacterial species and is thus resistant to denaturation at high temperatures). Specific oligonucleotide primers complementary to the DNA at each end of (flanking) the region of interest are synthesized or obtained commercially, and are used as primers for Taq polymerase. All components of the reaction (the target DNA, primers, deoxynucleotides, and Taq polymerase) are placed in a small tube and the reaction sequence is accomplished by simply changing the temperature of the reaction mixture in a cyclical manner (Fig. 2–6A). A typical PCR reaction would involve: (a) Incubation at 94°C to denature (separate) the DNA duplex and create single-stranded DNA. (b) Incubation at 53°C to allow hybridization of the primers, which are in vast excess (this temperature may vary depending on the sequence of the primers). (c) Incubation at 72°C to allow Taq polymerase to synthesize new DNA from the primers. Repeating this cycle permits another round of amplification (Fig. 2–6B). Each cycle takes only a few minutes. Twenty cycles can theoretically produce a million-fold amplification of the DNA of interest. PCR products can then be sequenced or subjected to other methods of genetic analysis. Polymerase proteins with greater heat stability and copying fidelity can allow for longrange amplification using primers separated by as much as 15 to 30 kilobases of intervening target DNA (Ausubel and Waggoner, 2003). The PCR is exquisitely sensitive and its applications include the detection of minimal residual disease in hematopoietic malignancies and of circulating cancer cells from solid tumors.

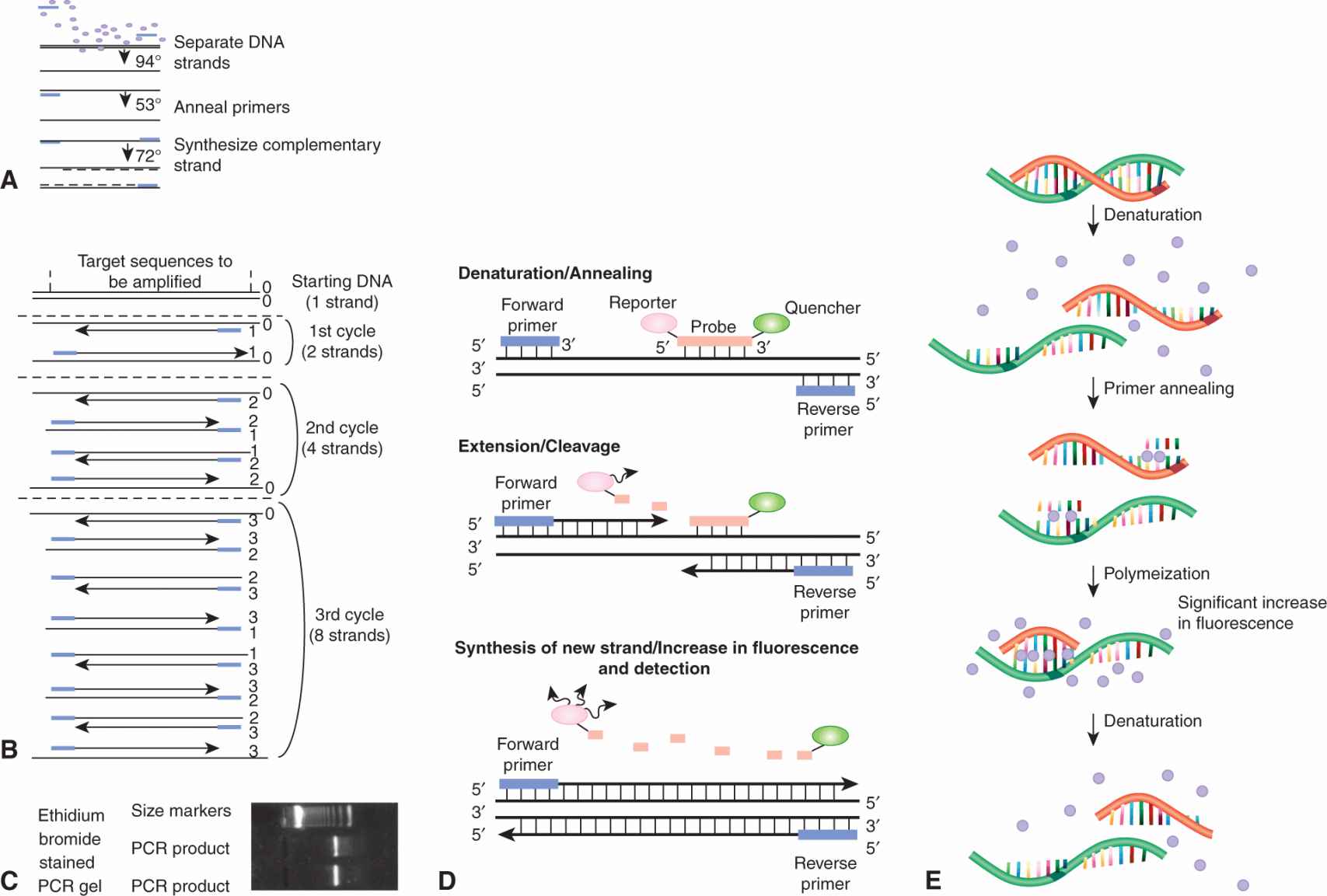

FIGURE 2–6 Reaction sequence for 1 cycle of PCR. Each line represents 1 strand of DNA; the small rectangles are primers and the circles are nucleotides. B) The first 3 cycles of PCR are shown schematically. C) Ethidium bromide-stained gel after 20 cycles of PCR. See text for further explanation. D) Real-time PCR using SYBR Green dye. SYBR Green dye binds preferentially to double-stranded DNA; therefore, an increase in the concentration of a double-stranded DNA product leads to an increase in fluorescence. During the polymerization step, several molecules of the dye bind to the newly synthesized DNA and a significant increase in fluorescence is detected and can be monitored in real time. E) Realtime PCR using fluorescent dyes and molecular beacons. During denaturation, both probe and primers are in solution and remain unbound from the DNA strand. During annealing, the probe specifically hybridizes to the target DNA between the primers (top panel) and the 5′-to-3′ exonuclease activity of the DNA polymerase cleaves the probe, thus dissociating the quencher molecule from the reporter molecule, which results in fluorescence of the reporters.

PCR is widely used to study gene expression or screen for mutations in RNA. Reverse transcriptase is used to make a single-strand cDNA copy of an mRNA and the cDNA is used as a template for a PCR reaction as described above. This technique allows amplification of cDNA corresponding to both abundant and rare RNA transcripts. The development of realtime quantitative PCR has allowed improved quantitation of the DNA (or cDNA) template and has proven to be a sensitive method to detect low levels of mRNA (often obtained from small samples or microdissected tissues) and to quantify gene expression. Different chemistries are available for real time detection (Fig. 2–6C, D). There is a very specific 5′ nuclease assay, which uses a fluorogenic probe for the detection of reaction products after amplification, and there is a less specific but much less expensive assay, which uses a fluorescent dye (SYBR Green I) for the detection of double-stranded DNA products. In both methods, the fluorescence emission from each sample is collected by a charge-coupled device camera and the data are automatically processed and analyzed by computer software. Quantitative real-time PCR using fluorogenic probes can analyze multiple genes simultaneously within the same reaction. The SYBR Green methodology involves individual analysis of each gene of interest but, using multiwell plates, both approaches provide high-throughput sample analysis with no need for post-PCR processing or gels.

2.2.6 Fluorescence in Situ Hybridization

To perform fluorescence in situ hybridization (FISH), DNA probes specific for a gene or particular chromosome region are labeled (usually by incorporation of biotin, digoxigenin, or directly with a fluorochrome) and then hybridized to (denatured) metaphase chromosomes. The DNA probe will reanneal to the denatured DNA at its precise location on the chromosome. After washing away the unbound probe, the hybridized sequences are detected using avidin directly (which binds strongly to biotin), or antibodies to digoxigenin that are coupled to fluorescent secondary antibodies, such as fluorescein isothiocyanate. The sites of hybridization are then detected using fluorescent microscopy. The main advantage of FISH for gene analyses is that information is obtained directly about the positions of the probes in relation to chromosome bands or to other previously or simultaneously mapped reference probes.

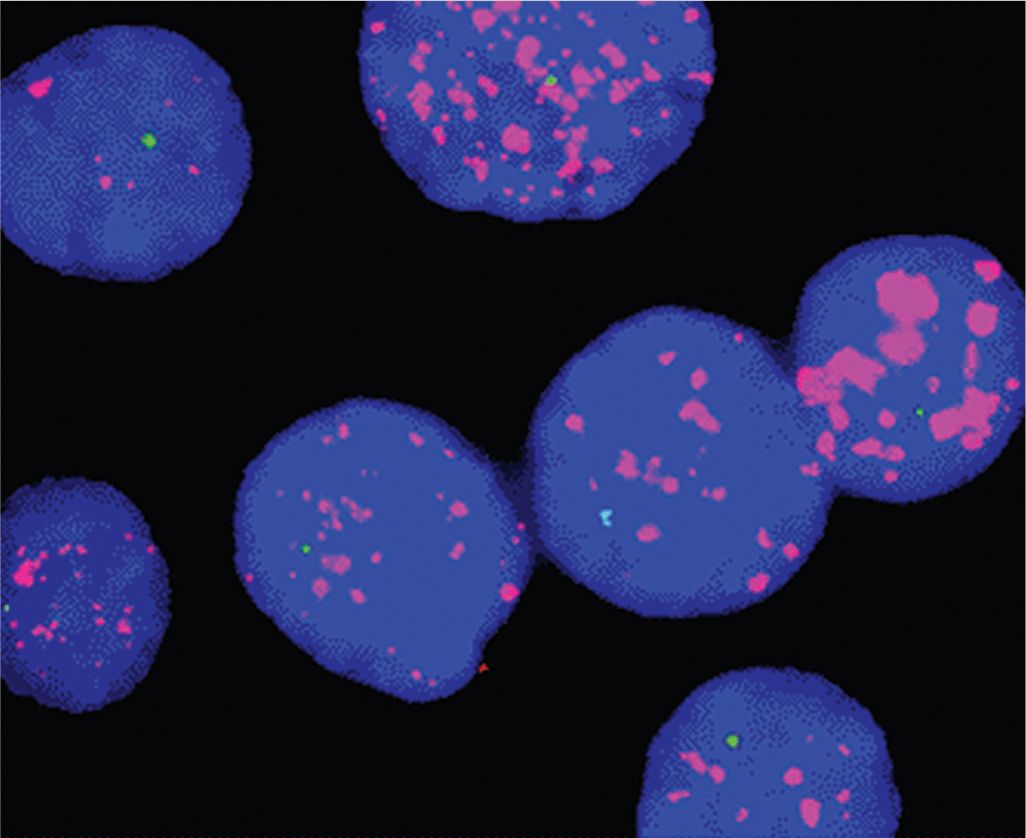

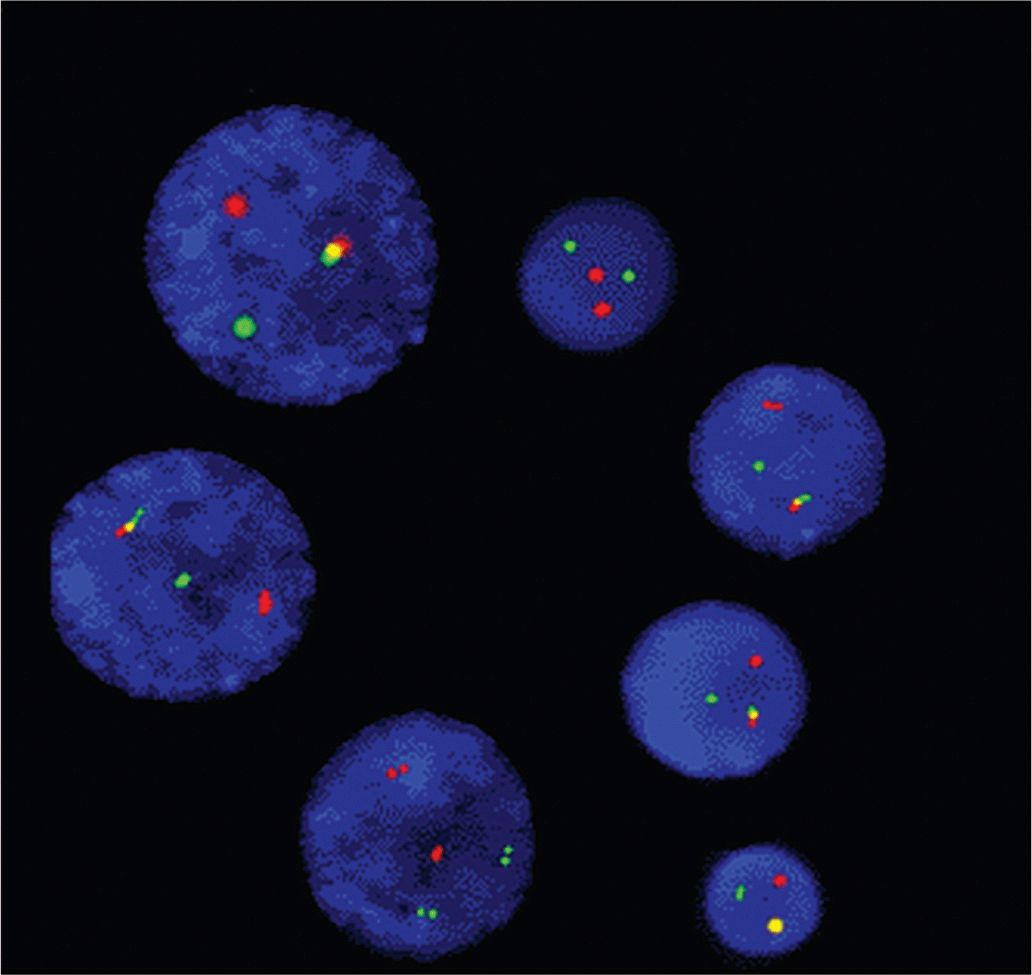

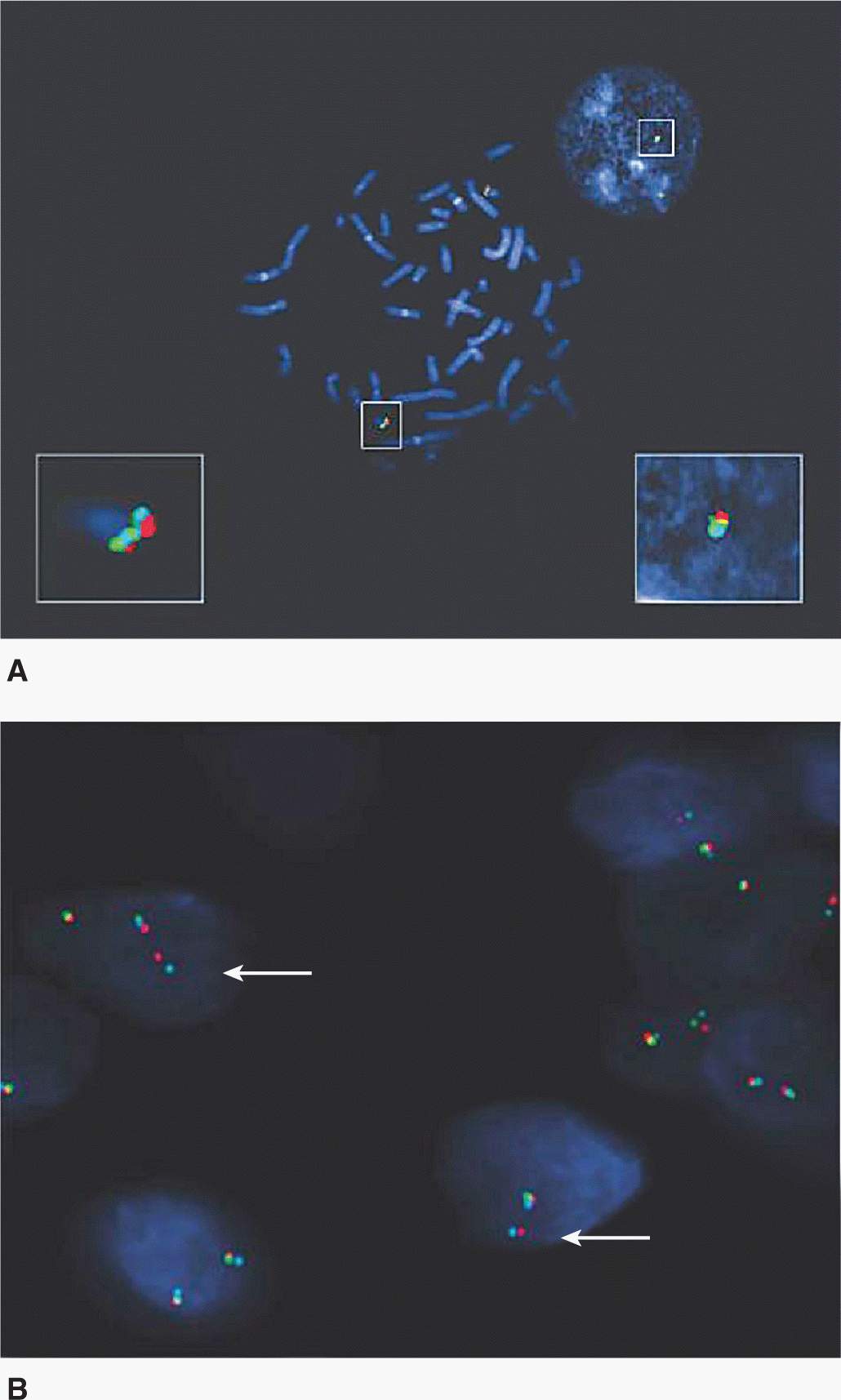

FISH can be performed on interphase nuclei from paraffin-embedded tumor biopsies or cultured tumor cells, which allows cytogenetic aberrations such as amplifications, deletions or other abnormalities of whole chromosomes to be visualized without the need for obtaining good-quality metaphase preparations. For example, FISH is a standard technique to determine the HER2 status of breast cancers and can be used to detect N-myc amplification in neuroblastoma (Fig. 2–7). Whole chromosome abnormalities can also be detected using specific centromere probes that lead to 2 signals from normal nuclei, 1 signal when there is only 1 copy of the chromosome (monosomy), or 3 signals when there is an extra copy (trisomy). Chromosome or gene deletions can also be detected with probes from the relevant regions. For example, if the probes used for FISH are close to specific translocation break points on different chromosomes, they will appear joined as a result of the translocation generating a “color fusion” signal or conversely, alternative probes can be designed to “break apart” in the event of a specific gene deletion or translocation. This technique is particularly useful for the detection of the bcr-abl rearrangement in chronic myeloid leukemia (Fig. 2–8) and the tmprss2-erg abnormalities in prostate cancer (Fig. 2–9).

FIGURE 2–7 MYCN amplification in nuclei from neuroblastoma detected by FISH with a MYCN probe (magenta speckling) and a deletion of the short arm of chromosome 1. The signal (pale bluegreen) from the remaining chromosome 1 is seen as a single spot in each nucleus.

FIGURE 2–8 Detection of the Philadelphia chromosome in interphase nuclei of leukemia cells. All nuclei contain 1 green signal (BCR gene), 1 pink signal (ABL gene), and an intermediate fusion yellow signal because of the 9:22 chromosome translocation.

FIGURE 2–9 FISH analysis showing rearrangement of TMPRSS2 and ERG genes in PCa. A) FISH confirms the colocalization of Oregon Green-labeled 5 V ERG (green signals), AlexaFluor 594-labeled 3 V ERG (red signals), and Pacific Blue-labeled TMPRSS2 (light blue signals) in normal peripheral lymphocyte metaphase cells and in normal interphase cells. B) In PCa cells, break-apart FISH results in a split of the colocalized 5 V green/3 V red signals, in addition to a fused signal (comprising green, red, and blue signals) of the unaffected chromosome 21. Using the TMPRSS2/ERG set of probes on PCa frozen sections, TMPRSS2 (blue signal) remains juxtaposed to ERG 3 V (red signal; see white arrows), whereas colocalized 5 V ERG signal (green) is lost, indicating the presence of TMPRSS2/ERG fusion and concomitant deletion of 5 V ERG region. (Reproduced with permission from Yoshimoto et al, 2006.)

2.2.7 Comparative Genomic Hybridization

If the cytogenetic abnormalities are unknown, it is not possible to select a suitable probe to clarify the abnormalities by FISH. Comparative genomic hybridization (CGH) has been developed to produce a detailed map of the differences between chromosomes in different cells by detecting increases (amplifications) or decreases (deletions) of segments of DNA.

For analysis of tumors by CGH, the DNA from malignant and normal cells is labeled with 2 different fluorochromes and then hybridized simultaneously to normal chromosome metaphase spreads. For example, tumor DNA is labeled with biotin and detected with fluorescein (green fluorescence) while the control DNA is labeled with digoxigenin and detected with rhodamine (red fluorescence). Regions of gain or loss of DNA, such as deletions, duplications, or amplifications, are seen as changes in the ratio of the intensities of the 2 fluorochromes along the target chromosomes. One disadvantage of CGH is that it can detect only large blocks (>5 Mb) of over- or underrepresented chromosomal DNA and balanced rearrangements (such as inversions or translocations) can escape detection. Improvements to the original CGH technique have used microarrays where CGH is applied to arrayed sequences of DNA bound to glass slides. The arrays are constructed using genomic clones of various types such as bacterial artificial chromosomes (a DNA construct that can be used to carry 150 to 350 kbp [kilobase pairs] of normal DNA) or synthetic oligonucleotides that are spaced across the entire genome. This technique has allowed the detection of genetic aberrations of smaller magnitude than was possible using metaphase chromosomes, although they have now been superseded by high density single-nucleotide polymorphism (SNP) arrays (see below).

2.2.8 Spectral Karyotyping/Multifluor Fluorescence in Situ Hybridization

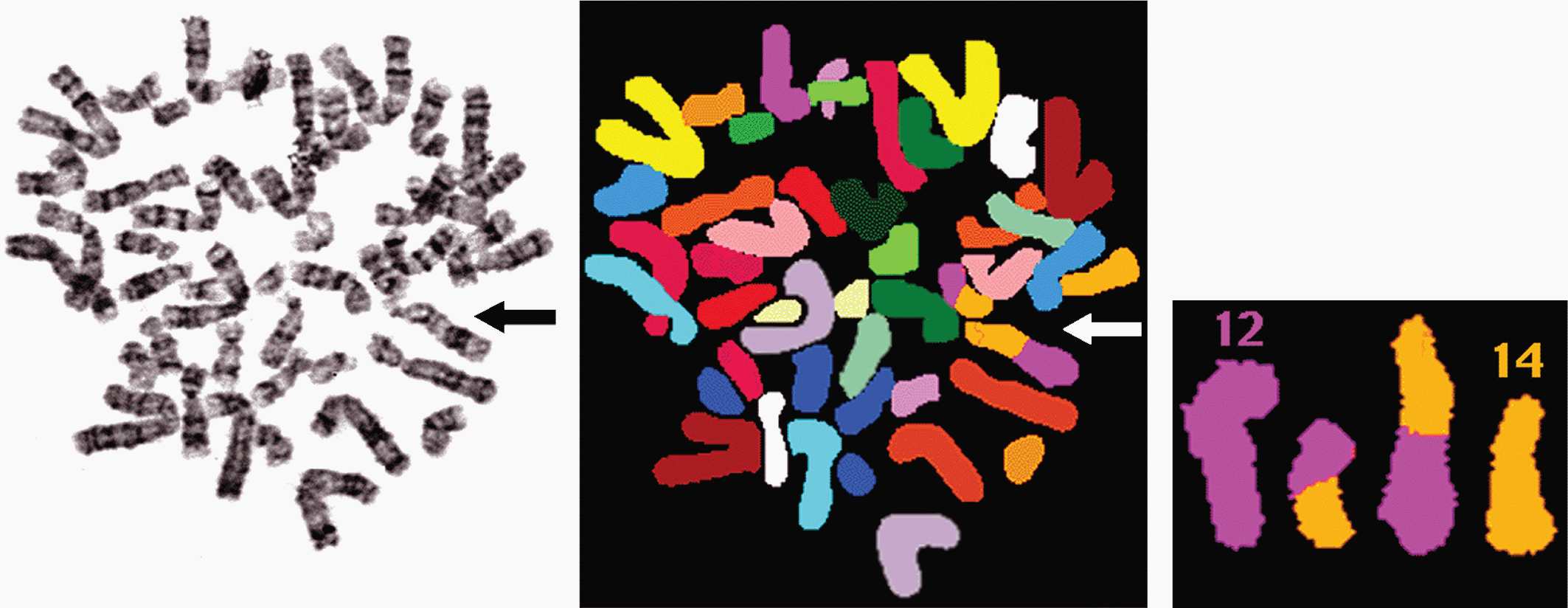

A deficiency of both array CGH and conventional cDNA microarrays is the lack of information about structural changes within the karyotype. For example, with an expression array, a particular gene may be overexpressed but it would be unclear whether this is secondary to a translocation placing the gene next to a strong promoter or an amplification. Universal chromosome painting techniques have been developed to assist in this determination with which it is possible to analyze all chromosomes simultaneously. Two commonly used techniques, spectral karyotyping (SKY) (Veldman et al, 1997) and multifluor fluorescence in situ hybridization (M-FISH) (Speicher et al, 1996), are based on the differential display of colored fluorescent chromosome-specific paints, which provide a complete analysis of the chromosomal complement in a given cell. Using this combination of 23 different colored paints as a “cocktail probe,” subtle differences in fluorochrome labeling of chromosomes after hybridization allows a computer to assign a unique color to each chromosome pair. Abnormal chromosomes can be identified by the pattern of color distribution along them with chromosomal rearrangements leading to a distinct transition from one color to another at the position of the breakpoint (Fig. 2–10). In contrast to CGH, detection of such karyotype rearrangements using SKY and M-FISH is not dependent upon change in copy number. This technology is particularly suited to solid tumors where the complexity of the karyotypes may mask the presence of chromosomal aberrations.

FIGURE 2–10 SKY and downstream analyses of a patient with a translocation. One of the aberrant chromosomes can initially be seen with G banding, the same metaphase spread has been subjected to SKY and then a 12;14 reciprocal translocation is identified.

2.2.9 Single-Nucleotide Polymorphisms

DNA sequences can differ at single nucleotide positions within the genome. These SNPs can occur as frequently as 1 in every 1000 base pairs and can occur in both introns and exons. In introns they generally have little effect, but in exons they can affect protein structure and function. For example, SNPs may be involved in altered drug metabolism because of their modifying effect on the cytochrome P450 metabolizing enzymes. They also contribute to disease (eg, SNPs that result in missense mutations) and disease predisposition. Most early methods to characterize SNPs required PCR amplification of the sample to be genotyped prior to sequence analysis; modern methods of gene sequencing and array analyses, however, have largely replaced this older technique. One application of SNPs in cancer medicine has been the use of SNP arrays in genomic analyses. These DNA microarrays, use tiled SNP probes to some of the 50 million SNPs in the human genome to interrogate genomic architecture. For example, SNP arrays can be used to study such phenomena as loss of heterozygosity (LOH) and amplifications. Indeed, the particular advantage of SNP arrays is that they can detect copy-neutral LOH (also known as uniparental disomy or gene conversion) whereby one allele or whole chromosome is missing and the other allele is duplicated with potential pathological consequences.

2.2.10 Sequencing of DNA

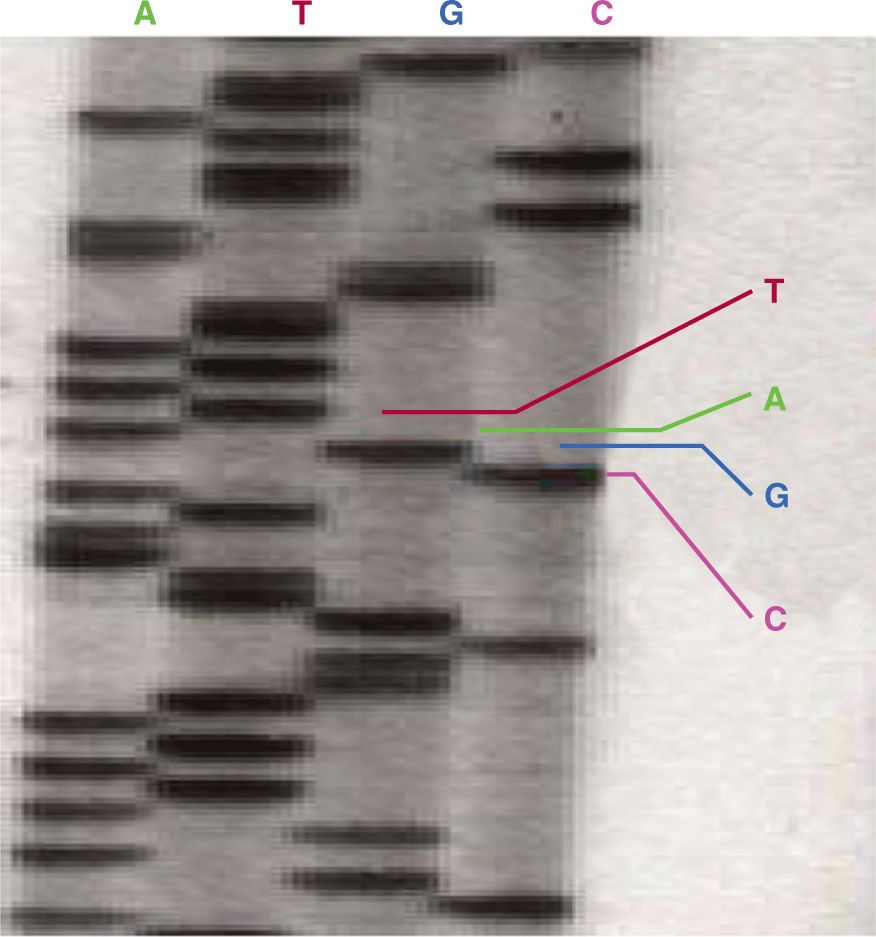

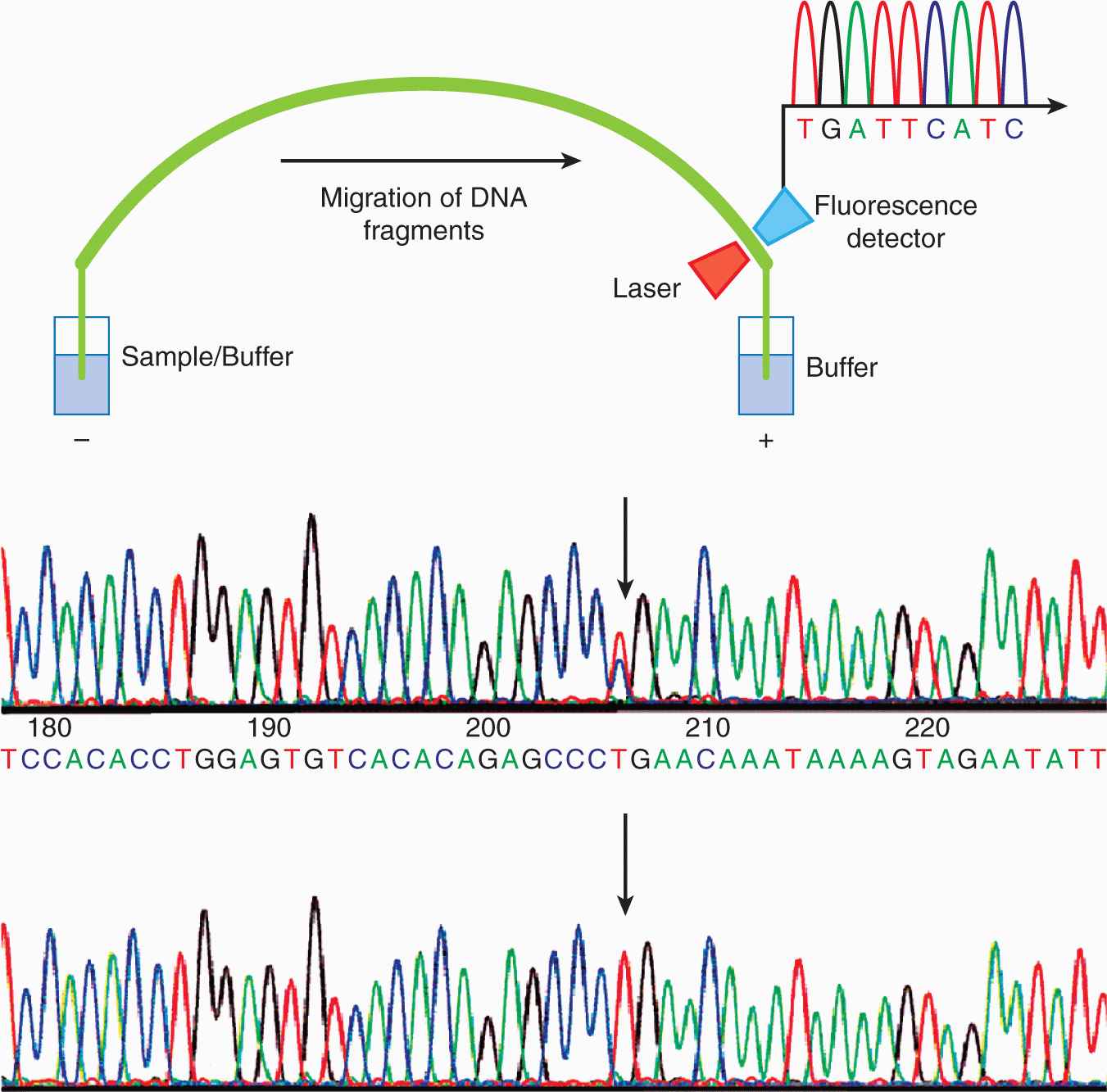

To characterize the primary structure of genes, and thus of the potential repertoire of proteins that they encode, it is necessary to determine the sequence of their DNA. Sanger sequencing (the classical method) relied on oligonucleotide primer extension and dideoxy-chain termination (dideoxy-nucleotides (ddNTPs) lack the 3′-OH group required for the phosphodiester bond between 2 nucleosides). DNA sequencing was carried out in 4 separate reactions each containing 1 of the 4 ddNTPs (ie, ddATP, ddCTP, ddGTP, or ddTTP) together with ddNTPs. In each reaction, the same primer was used to ensure DNA synthesis began at the same nucleotide. The extended primers therefore terminated at different sites whenever a specific ddNTP was incorporated. This method produced fragments of different sizes terminating at different 3′ nucleotides. The newly synthesized and labeled DNA fragments were heat-denatured, and then separated by size with gel electrophoresis and with each of the 4 reactions in individual adjacent lanes (lanes A, T, G, C); the DNA bands were then visualized by autoradiography or UV light, and the DNA sequence could be directly interpreted from the x-ray film or gel image (Fig. 2–11). Using this method it was possible to obtain a sequence of 200 to 500 bases in length from a single gel. The next development was automated Sanger sequencing which involved the development of fluorescently labeled-primers (dye primers) and –ddNTPs (dye terminators). With the automated procedures the reactions are performed in a single tube containing all 4 ddNTPs, each labeled with a different fluorescent dye. Since the four dyes fluoresce at different wavelengths, a laser then reads the gel to determine the identity of each band according to the wavelengths at which it fluoresces. The results are then depicted in the form of a chromatogram, which is a diagram of colored peaks that correspond to the nucleotide in that location in the sequence. Then sequencing analysis software interprets the results, identifying the bases from the fluorescent intensities (Fig. 2–12).

FIGURE 2–11 Dideoxy-chain termination sequencing showing an extension reaction to read the position of the nucleotide guanidine (see text for details). (Courtesy of Lilly Noble, University of Toronto, Toronto.)

FIGURE 2–12 Outline of automated sequencing and thereafter automated sequencing of BRCA2, the hereditary breast cancer predisposition gene. Each colored peak represents a different nucleotide. The lower panel is the sequence of the wild-type DNA sample. The sequence of the mutation carrier in the upper panel contains a double peak (indicated by an arrow) in which nucleotide T in intron 17 located 2 bp downstream of the 5′ end of exon 18 is converted to a C. The mutation results in aberrant splicing of exon 18 of the BRCA2 gene. The presence of the T nucleotide, in addition to the mutant C, implies that only 1 copy of the 2 BRCA2 genes is mutated in this sample.

So-called next-generation sequencing (NGS) uses a variety of approaches to automate the sequencing process by creating micro-PCR reactors and/or attaching the DNA molecules to be sequenced to solid surfaces or beads, allowing for millions of sequencing events to occur simultaneously. Although the analyzed sequences are generally much shorter (~21 to ~400 base pairs) than in previous sequencing technologies, they can be counted and quantified, allowing for the identification of mutations in a small subpopulation of cells which is part of a larger population with wild-type sequences. The recent introduction of approaches that allow for sequencing of both ends of a DNA molecule (ie, paired end massively parallel sequencing or mate-pair sequencing), make it possible to detect balanced and unbalanced somatic rearrangements (eg, fusion genes) in a genome-wide fashion.

There are several types of NGS machines in routine use that fall into 4 methodological categories; (a) Roche/454, Life/APG, (b) Illumina/Solexa, (c) Ion Torrent, and (d) Pacific Biosciences. It is beyond the scope of this chapter to describe these in detail or to foreshadow developing technologies, but an overview of the key differences is provided below.

Each technology includes a number of steps grouped as (a) template preparation, (b) sequencing/imaging, and (c) data analysis. Initially, all methods involve randomly breaking genomic DNA into small sizes from which either fragment templates (randomly sheared DNA usually <1 kbp in size) or mate-pair templates (linear DNA fragments originating from circularized sheared DNA of a particular size) are created.

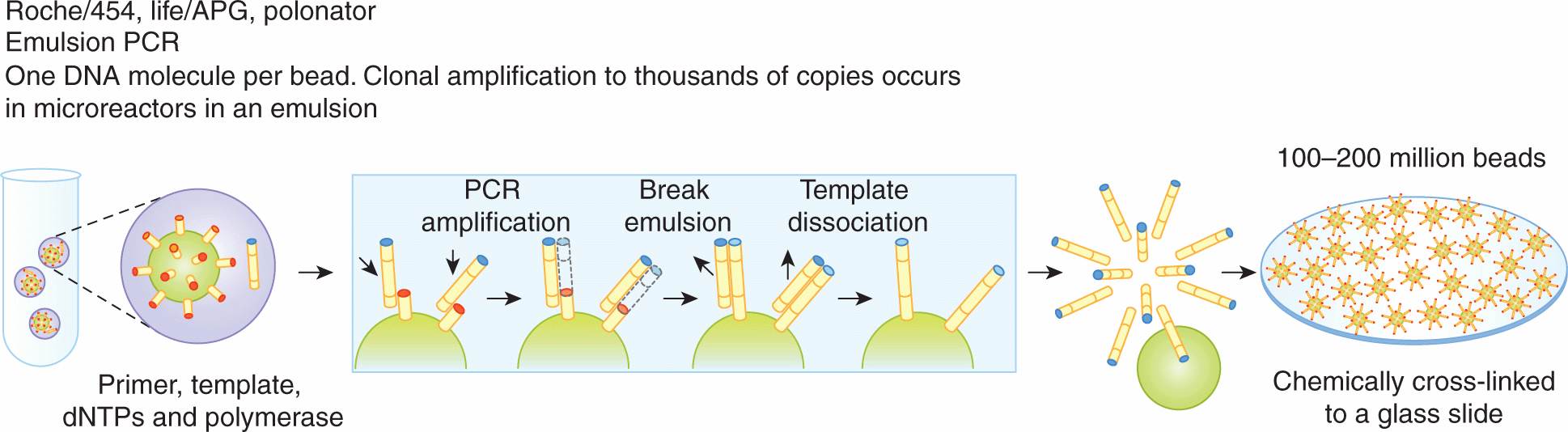

There are 2 types of template preparation: clonally amplified templates and single-molecule templates. Clonally amplified templates rely on PCR techniques to amplify the DNA so that fluorescence is detectable when fluorescently labeled nucleotides are added. Emulsion PCR (Fig. 2–13) is used to prepare a library of fragment or mate-pair targets and then adaptors (short DNA segments) containing universal priming sites are ligated to the target ends, allowing complex genomes to be amplified with common PCR primers. After ligation, the DNA is separated into single strands and captured onto beads under conditions that favor 1 DNA molecule per bead. After the successful amplification of DNA, millions of molecules can be chemically cross-linked to an amino-coated glass surface (Life/APG; Ion Torrent) or deposited into individual PicoTiterPlate (PTP) wells (Roche/454). Solid-phase amplification (Fig. 2–14) used in the Illumina/Solexa platform produces randomly distributed, clonally amplified clusters from fragment or mate-pair templates on a glass slide. High-density forward and reverse primers are covalently attached to the slide and the DNA segments of interest and the ratio of the primers to the template on the support define the surface density of the amplified clusters. These primers can also provide free ends to which a universal primer can be hybridized to initiate the NGS reaction.

FIGURE 2–13 In emulsion PCR (emPCR), a reaction mixture is generated compromising an oil–aqueous emulsion to encapsulate bead–DNA complexes into single aqueous droplets. PCR amplification is subsequently carried out in these droplets to create beads containing thousands of copies of the same template sequence. EmPCR beads can then be chemically attached to a glass slide or a reaction plate. (From Metzker, 2010.)

FIGURE 2–14 The 2 basic steps of solid-phase amplification are initial priming and extending of the single-stranded, single-molecule template, and then bridge amplification of the immobilized template with immediately adjacent primers to form clusters. (From Metzker, 2010.)

In general, the preparation of single-molecule templates is more straightforward and requires less starting material (<1 μg) than emulsion PCR or solid-phase amplification. More importantly, these methods do not require PCR, which may create mutations and bias in amplified templates and regions. A variant of this (Pacific Biosciences; see below) uses spatially distributed single-polymerase molecules that are attached to a solid support that analyze circularized sheared DNA selected for a given size, such as 2 kbp, to which primed template molecules are bound.

Cyclic reversible termination (CRT) is currently used in the Illumina/Solexa platform. CRT uses reversible terminators in a cyclic method that comprises nucleotide incorporation, fluorescence imaging and cleavage. In the first step, a DNA polymerase, bound to the primed template, adds or incorporates only 1 fluorescently modified nucleotide, complementary to the template base. DNA synthesis is then terminated. Following incorporation, the remaining unincorporated nucleotides are washed away. Imaging is then performed to identify the incorporated nucleotide. This is followed by a cleavage step, which removes the terminating/inhibiting group and the fluorescent dye. Additional washing is performed before starting another incorporation step.

Another cyclic method is single-base ligation (SBL) used in the Life/APG platform, which uses a DNA ligase and either 1-or 2-base-encoded probes. In its simplest form, a fluorescently labeled probe hybridizes to its complementary sequence adjacent to the primed template. DNA ligase is then added which joins the dye-labeled probe to the primer. Nonligated probes are washed away, followed by fluorescence imaging to determine the identity of the ligated probe. The cycle can be repeated either by (a) using cleavable probes to remove the fluorescent dye and regenerate a 5′-PO4 group for subsequent ligation cycles or (b) by removing and hybridizing a new primer to the template.

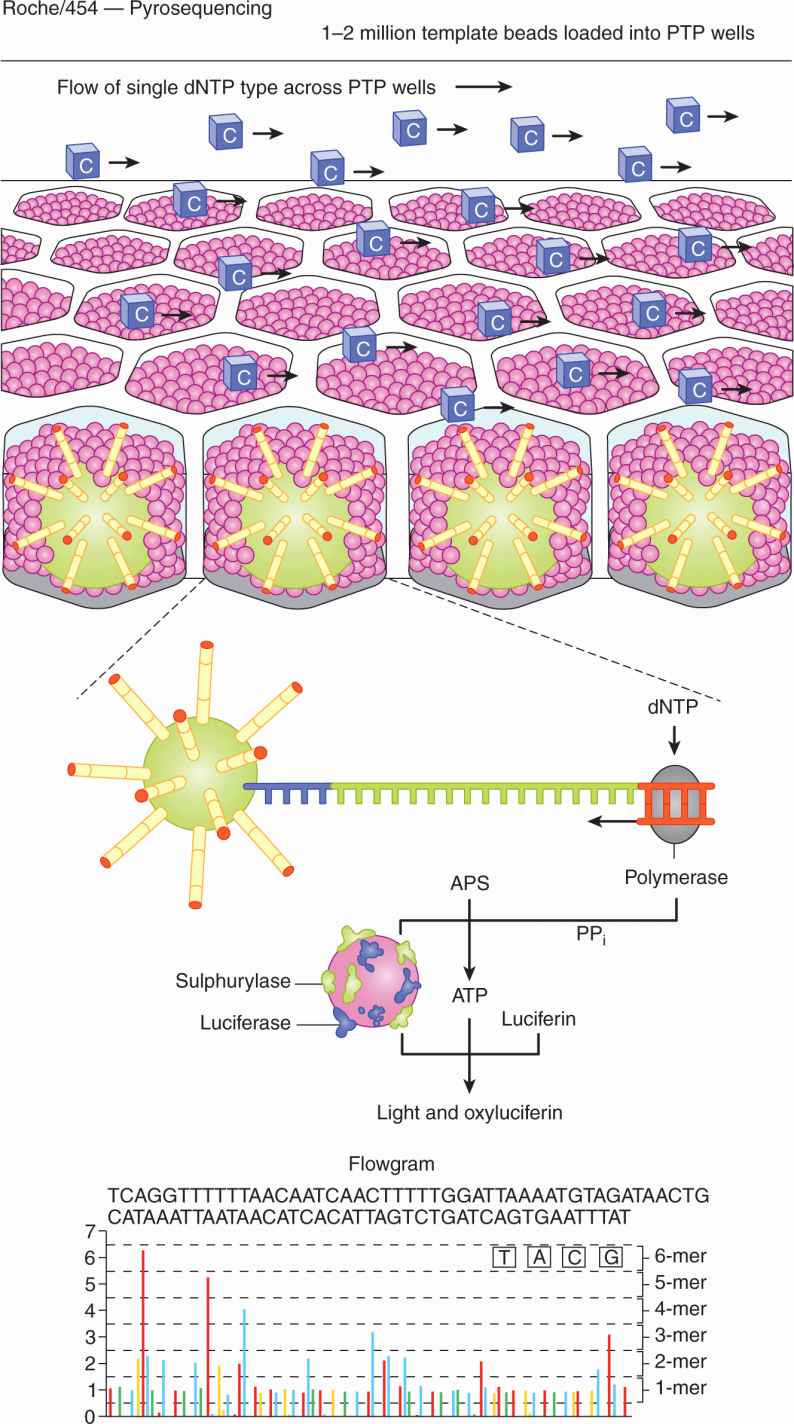

Pyrosequencing (used in the Roche/454 platform) (Fig. 2–15) is a bioluminescence method that measures the incorporation of nucleotides by the release of inorganic pyrophosphate by proportionally converting it into visible light using serial enzymatic reactions. Following loading of the DNA-amplified beads into individual PTP wells, additional smaller beads, which are coupled with sulphurylase and luciferase are added. Nucleotides are then flowed sequentially in a fixed order across the PTP device. If a nucleotide complementary to the template strand appears, the polymerase extends the existing DNA strand by adding nucleotide(s). Addition of 1 (or more) nucleotide(s) results in a reaction that generates a light signal that is recorded. The signal strength is proportional to the number of nucleotides incorporated in a single nucleotide flow. The order and intensity of the light peaks are recorded to reveal the underlying DNA sequence.

FIGURE 2–15 Pyrosequencing. After loading of the DNA-amplified beads into individual PicoTiterPlate (PTP) wells, additional beads, coupled with sulphurylase and luciferase, are added. The fiberoptic slide is mounted in a flow chamber, enabling the delivery of sequencing reagents to the beadpacked wells. The underneath of the fiberoptic slide is directly attached to a high-resolution camera, which allows detection of the light generated from each PTP well undergoing the pyrosequencing reaction. The light generated by the enzymatic cascade is recorded and is known as a flow gram. PP, Inorganic pyrophosphate. (From Metzker, 2010.)

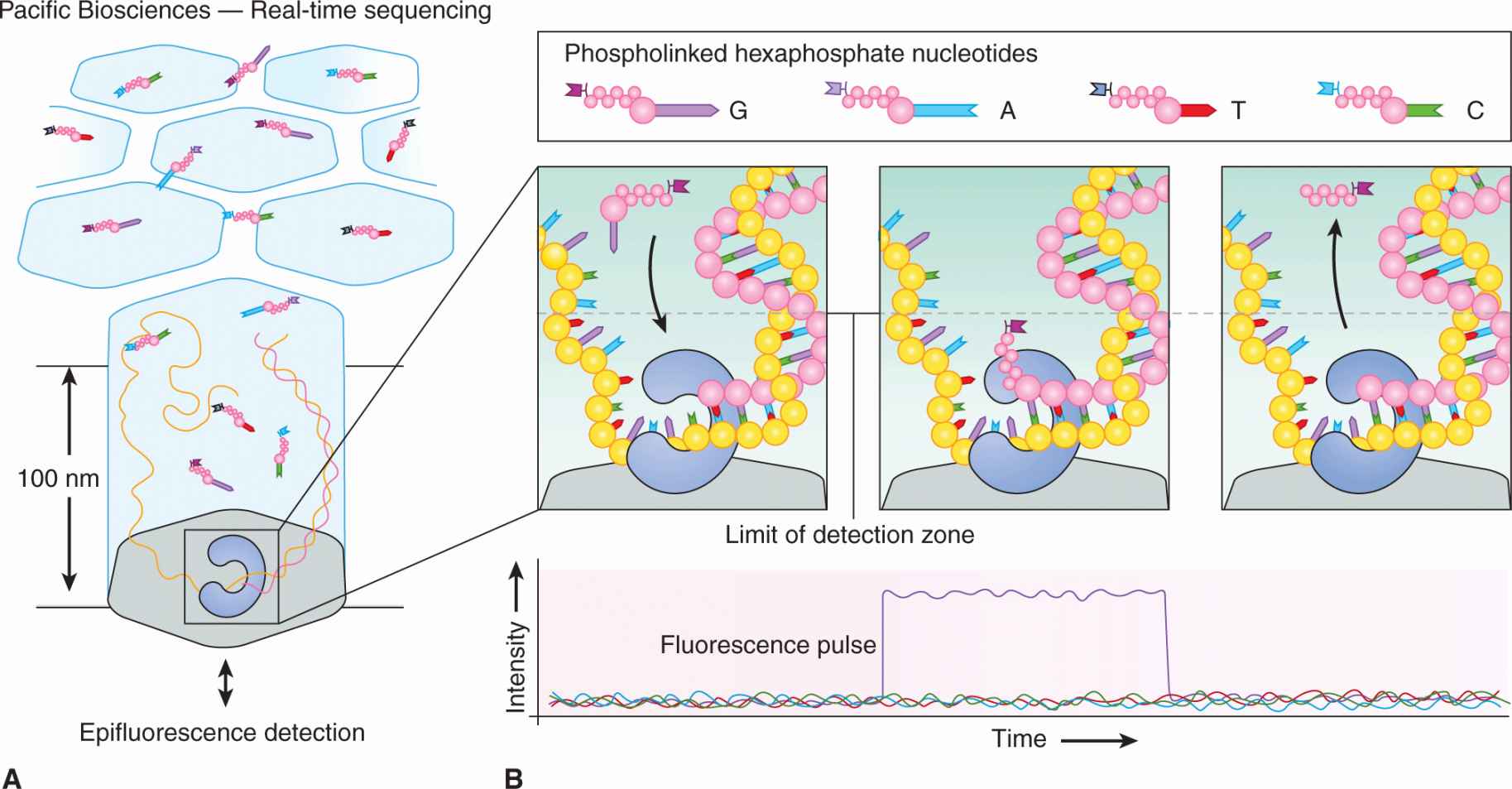

The method of real-time sequencing (as used in the Pacific Biosciences platform, Fig. 2–16) involves imaging the continuous incorporation of dye-labeled nucleotides during DNA synthesis by attaching single DNA polymerase molecules to the bottom surface of individual wells known as “zero-mode waveguide detectors” that can detect the light from the fluorescent nucleotides as they are incorporated into the elongating primer strand.

FIGURE 2–16 Pacific Biosciences’ four-color real-time sequencing method. The zero-mode waveguide (ZMW) design reduces the observation volume, therefore reducing the number of stray fluorescently labeled molecules that enter the detection layer for a given period. The residence time of phospho linked nucleotides in the active site is governed by the rate of catalysis and is usually milliseconds. This corresponds to a recorded fluorescence pulse, because only the bound, dye-labeled nucleotide occupies the ZMW detection zone on this timescale. The released, dye-labeled pentaphosphate by-product quickly diffuses away, as does the fluorescence signal. (From Metzker, 2010.)

The Ion Torrent sequencing relies on emulsion PCR amplified particles (ion sphere particles) to be deposited into an array of wells by a short centrifugation step. The sequencing is based on the detection of hydrogen ions that are released during the polymerization of DNA, as opposed to the optical methods used in other sequencing systems. A microwell containing a template DNA strand to be sequenced is flooded with a single type of nucleotide. If the introduced nucleotide is complementary to the leading template nucleotide it is incorporated into the growing complementary strand. This causes the release of a hydrogen ion that triggers a hypersensitive ion sensor, which indicates that a reaction has occurred. If homopolymer repeats are present in the template sequence multiple nucleotides will be incorporated in a single cycle. This leads to a corresponding number of released hydrogens and a proportionally higher electronic signal.

Despite the substantial cost reductions associated with next-generation technologies in comparison with the automated Sanger method, whole-genome sequencing is expensive but the costs are continuing to fall. In the interim, investigators are using the NGS platforms to target specific regions of interest. This strategy can be used to examine all of the exons in the genome, specific gene families that constitute known drug targets, or megabase-size regions that are implicated in disease or pharmacogenetic effects. Methods to perform the initial first step are known as genomic partitioning and broadly include methods involving PCR, or other hybridization methodologies. These are generally hybridized to target-specific probes either on a microarray surface or in solution.

The ability to sequence large amounts of DNA at low-cost makes the NGS platforms described above useful for many applications such as discovery of variant alleles through resequencing targeted regions of interest or whole genomes, de novo assembly of bacterial and lower eukaryotic genomes, cataloguing the mRNAs (“transcriptomes”) present in cells, tissues and organisms (RNA–sequencing), and gene discovery.

2.2.11 Variation in Copy Number and Gene Sequence

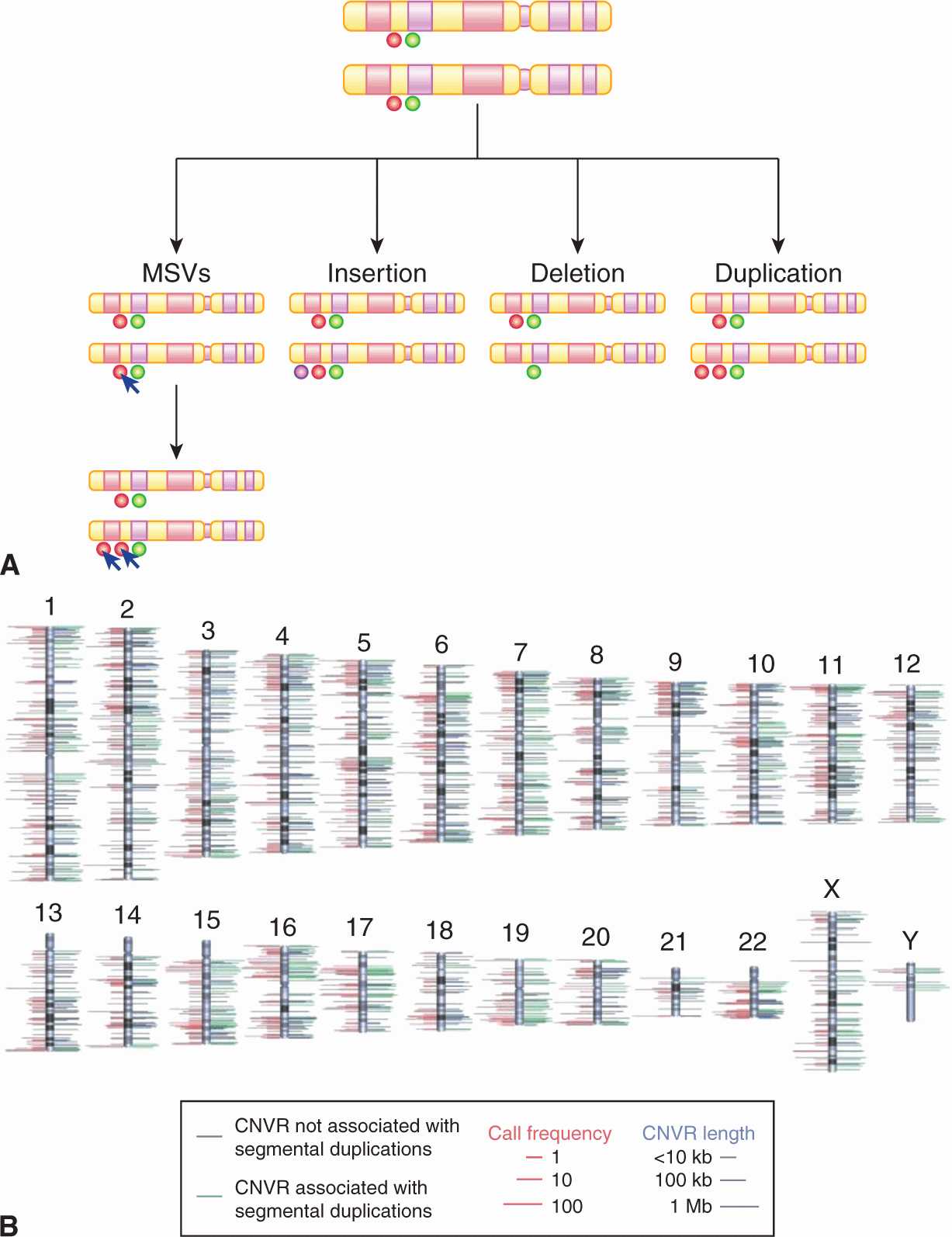

The recent application of genome-wide analysis to human genomes has led to the discovery of extensive genomic structural variation, ranging from kilobase pairs to megabase pairs (Mbp) in size, that are not identifiable by conventional chromosomal banding. These changes are termed copy-number variations (CNVs) and can result from deletions, duplications, triplications, insertions, and translocations; they may account for up to 13% of the human genome (Redon et al, 2006).

Despite extensive studies, the total number, position, size, gene content, and population distribution of CNVs remain elusive. There has not been an accurate molecular method to study smaller rearrangements of 1 to 50 kbp on a genome-wide scale in different populations. Recent analyses revealed 11,700 CNVs involving more than 1000 genes (Redon et al, 2006; Conrad et al, 2010). Wider application of array CGH techniques and NGS is likely to reveal greater structural variation among different individuals and populations, as the majority of CNVs are beyond the resolving capability of current arrays. There are several different classes of CNVs (Fig. 2–17). Entire genes or genomic regions can undergo duplication, deletion and insertion events, whereas multisite variants (MSVs) refer to more complex genomic rearrangements, including concurrent CNVs and mutation or gene conversions (a process by which DNA sequence information is transferred from one DNA helix, which remains unchanged, to another DNA helix, whose sequence is altered). CNVs can be inherited or sporadic; both types may be involved in causing disease including cancer. However, the phenotypic effects of CNVs are unclear and depend on whether dosage-sensitive genes or regulatory sequences are influenced by the genomic rearrangement.

FIGURE 2–17 A) Outline of the classes of CNVs in the human genome. B) The chromosomal locations of 1447 copy number variation regions (a region covered by overlapping CNVs) are indicated by lines to either side of the ideograms. Green lines denote CNVRs associated with segmental duplications; blue lines denote CNVRs not associated with segmental duplications. The length of right-hand side lines represents the size of each CNVR. The length of left-hand side lines indicates the frequency with which a CNVR is detected (minor call frequency among 270 HapMap samples). When both platforms identify a CNVR, the maximum call frequency of the two is shown. For clarity, the dynamic range of length and frequency are log transformed (see scale bars). (From Redon et al, 2006.)

Use of high-resolution SNP arrays in cancer genomes has shown that CNVs are frequent contributors to the spectrum of mutations leading to cancer development. In adenocarcinoma of the lung, a total of 57 recurrent copy number changes were detected in a collection of 528 cases (Weir et al, 2007). In 206 cases of glioblastoma, somatic copy number alterations were also frequent, and concurrent gene expression analysis showed that 76% of genes affected by copy number alteration had expression patterns that correlated with gene copy number (Cerami et al, 2010). High-resolution analyses of copy number and nucleotide alterations have been carried out on breast and colorectal cancer (Leary et al, 2008). Individual colorectal and breast tumors had, on average, 7 and 18 copy number alterations, respectively, with 24 and 9 as the average number of protein-coding genes affected by amplification or homozygous deletions.

Heritable germline CNVs may also contribute to cancer. For example, a heritable CNV at chromosome 1q21.1 contains the NBPF23 gene for which copy number is implicated in the development of neuroblastoma (Diskin et al, 2009). Also, a germline deletion at chromosome 2p24.3 is more common in men with prostate cancer, with higher prevalence in patients with aggressive compared with nonaggressive prostate cancer (Liu et al, 2009). However, how CNVs, either somatic or germline, contribute to cancer development is still poorly understood. Possible explanations come from the Knudson’s two-hit hypothesis (Knudson, 1971): tumor-suppressor genes can be lost as a consequence of a homozygous deletion leading directly to cancer susceptibility (see Chap. 7, Sec. 7.2.3). Alternatively, heterozygous deletions may harbor genes predisposing to cancer that become unmasked when a functional mutation arises in the other chromosome resulting in tumor development. Duplications or gains of chromosomal regions may result in increased expression levels of one or more oncogenes. Germline CNVs can provide a genetic basis for subsequent somatic chromosomal changes that arise in tumor DNA.

2.2.12 Microarrays and RNA Analysis

Microarray analysis has been developed to assess expression of the increasing number of genes identified by the Human Genome Project. There are several commercial kits designed to assist with RNA extraction from cells or tissues. The extracted RNA is then usually converted to cDNA with reverse transcriptase, and this may be combined with an RNA amplification step.

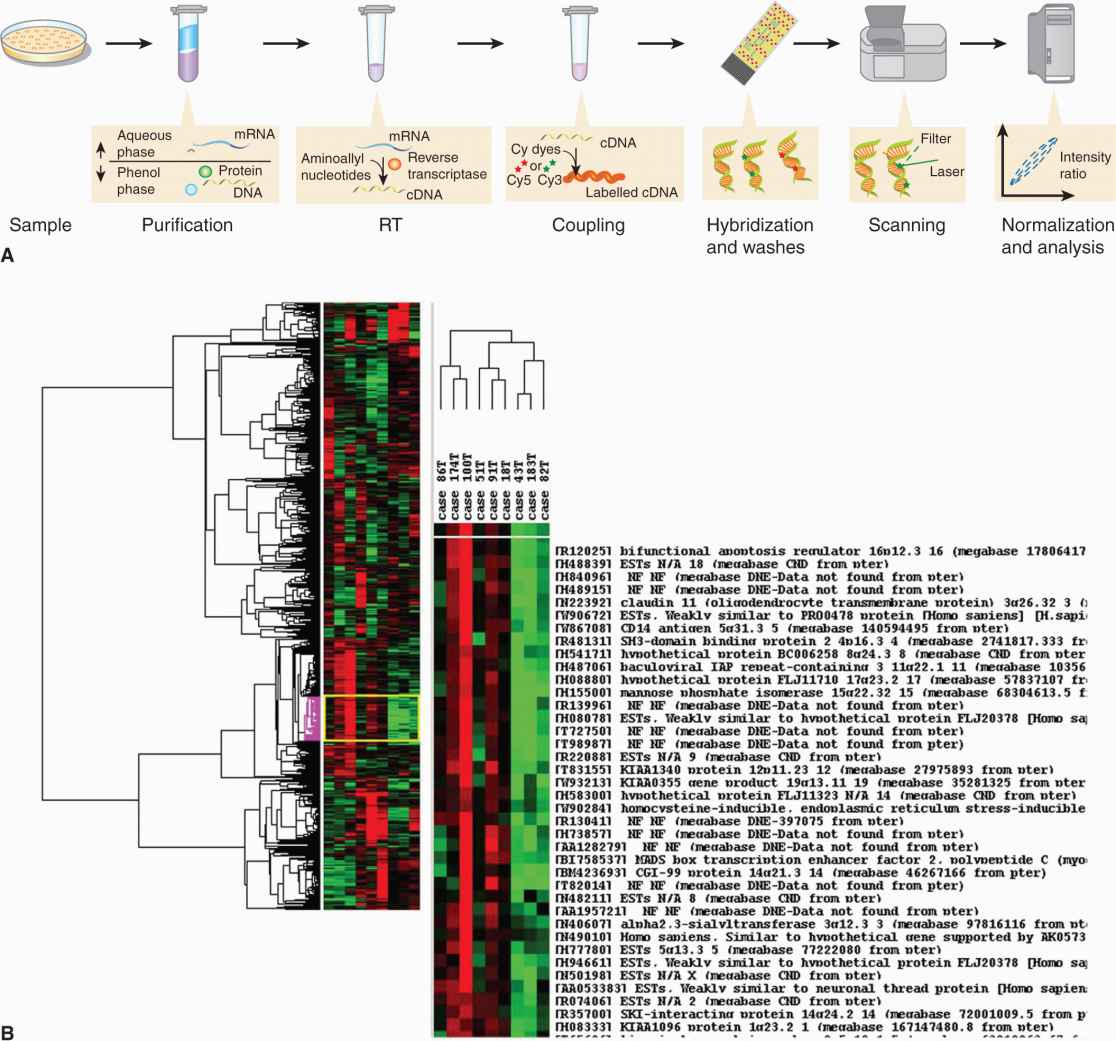

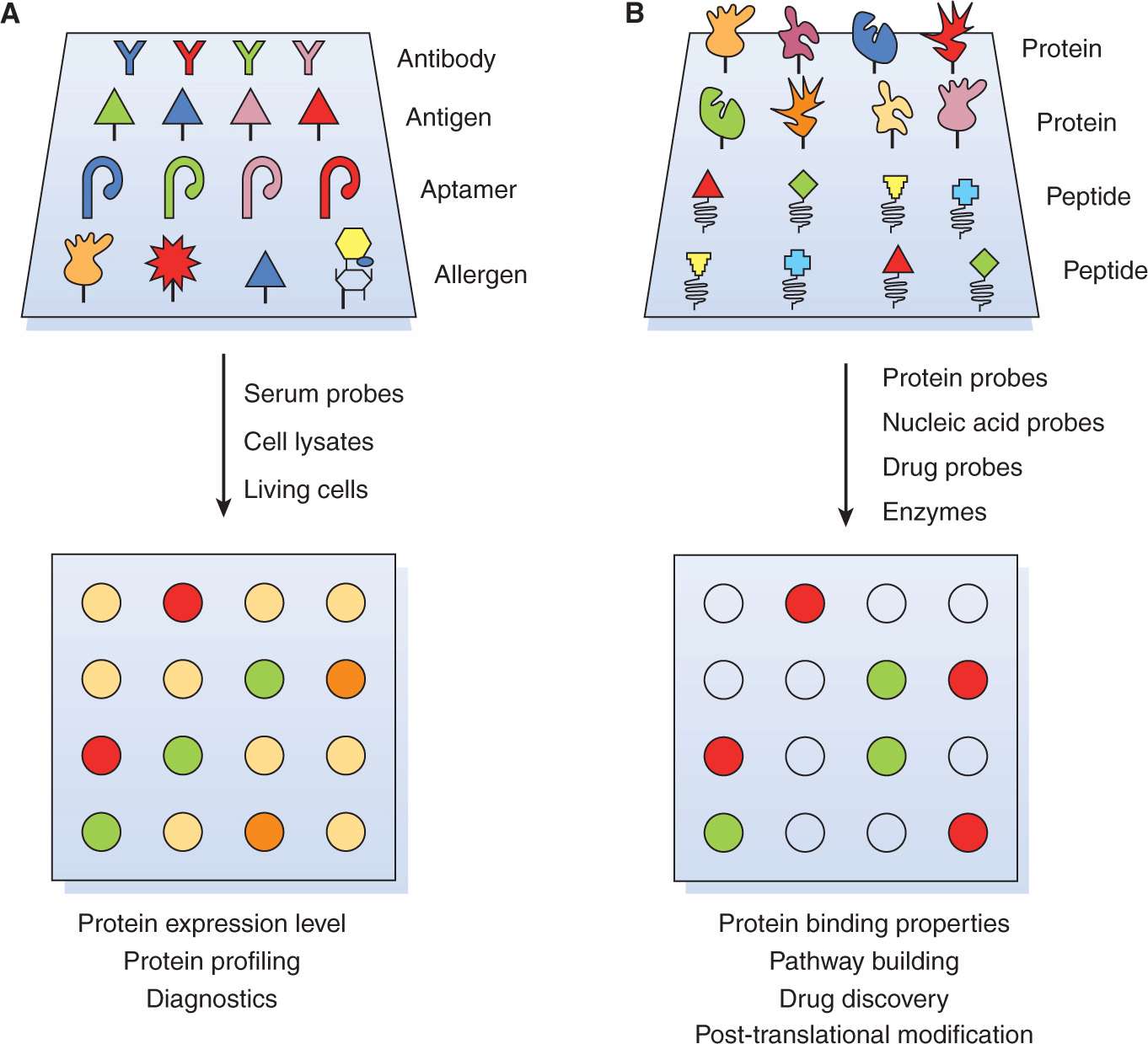

The principle of an expression array involves the production of DNA arrays or “chips” on solid supports for large-scale hybridization experiments. It consists of an arrayed series of thousands of microscopic spots of DNA oligo-nucleotides, called features, each containing specific DNA sequences, known as probes (or reporters). This approach allows for the simultaneous analysis of the differential expression of thousands of genes and has enhanced understanding of the dynamics of gene expression in cancer cells (Fig. 2–18).

FIGURE 2–18 A) The steps required in a microarray experiment from sample preparation to analyses. RT, Reverse transcriptase. For details see text. Briefly, samples are prepared and cDNA is created through reverse transcriptase. The fluorescent label is added either in the RT step or in an additional step after amplification, if present. The labeled samples are then mixed with a hybridization solution that contains light detergents, blocking agents (such as COT1 DNA, salmon sperm DNA, calf thymus DNA, PolyA or PolyT), along with other stabilizers. The mix is denatured and added to a pinhole in a microarray, which can be a gene chip (holes in the back) or a glass microarray. The holes are sealed and the microarray hybridized, either in a hybridization oven, (mixed by rotation), or in a mixer, (mixed by alternating pressure at the pinholes). After an overnight hybridization, all nonspecific binding is washed off. The microarray is dried and scanned in a special machine where a laser excites the dye and a detector measures its emission. The intensities of the features (several pixels make a feature) are quantified and normalized (see text). (Reproduced with permission from Jacopo Werther/Wikimedia Commons.) B) The output from a typical microarray experiment, a hierarchical clustering of cDNA microarray data obtained from 9 primary laryngeal tumors. Results were visualized using Tree View software, and include the dendrogram (clustering of samples) and the clustering of gene expression, based on genomic similarity. Tree View represents the 946 genes that best distinguish these 2 groups of samples. Genes whose expression is higher in the tumor sample relative to the reference sample are shown in red; those whose expression is lower than the reference sample are shown in green; and no change in gene expression is shown in black. (Courtesy of Patricia Reis and Shilpi Arora, the Ontario Cancer Institute and Princess Margaret Hospital, Toronto.)

There are a number of microarray platforms in common use. These platforms include: (a) Spotted arrays where DNA fragments (usually created by PCR) or oligonucleotides are immobilized on glass slides. The size of the fragment can be any length (usually 500 bp to 1 kbp) and the size of the oligo-nucleotides range from 20 to 100 nucleotides. These arrays can be created in individual laboratories using “affordable” equipment. (b) Affymetrix arrays, where the probes are synthesized using a light mask technology and are typically small (20 to 25 bp) oligonucleotides. (c) NimbleGen, the maskless array synthesizer technology that uses 786,000 tiny aluminum mirrors to direct light in specific patterns. Photo deposition chemistry allows single-nucleotide extensions with 380,000 or 2.1 million oligonucleotides/array as the light directs base pairing in specific sequences. (d) Agilent, which uses ink-jet printer technology to extend up to 60-mer bases through phosphoramidite chemistry. The capacity is 244,000 oligonucleotides/array. The analysis of microarrays is discussed in Section 2.7.1.

All the sequencing approaches described in Section 2.2.10 can be applied to RNA, in some cases by simply by converting the RNA to cDNA before analysis. It may also be necessary to remove the ribosomal RNA from the sample to increase the sensitivity of detection. This approach, known as RNA-Seq is becoming increasingly available, although it remains expensive. The technique possesses certain advantages when compared to expression microarrays in that it obviates the requirement for preexisting sequence information in order to detect and evaluate transcripts, and can detect fusion transcripts.

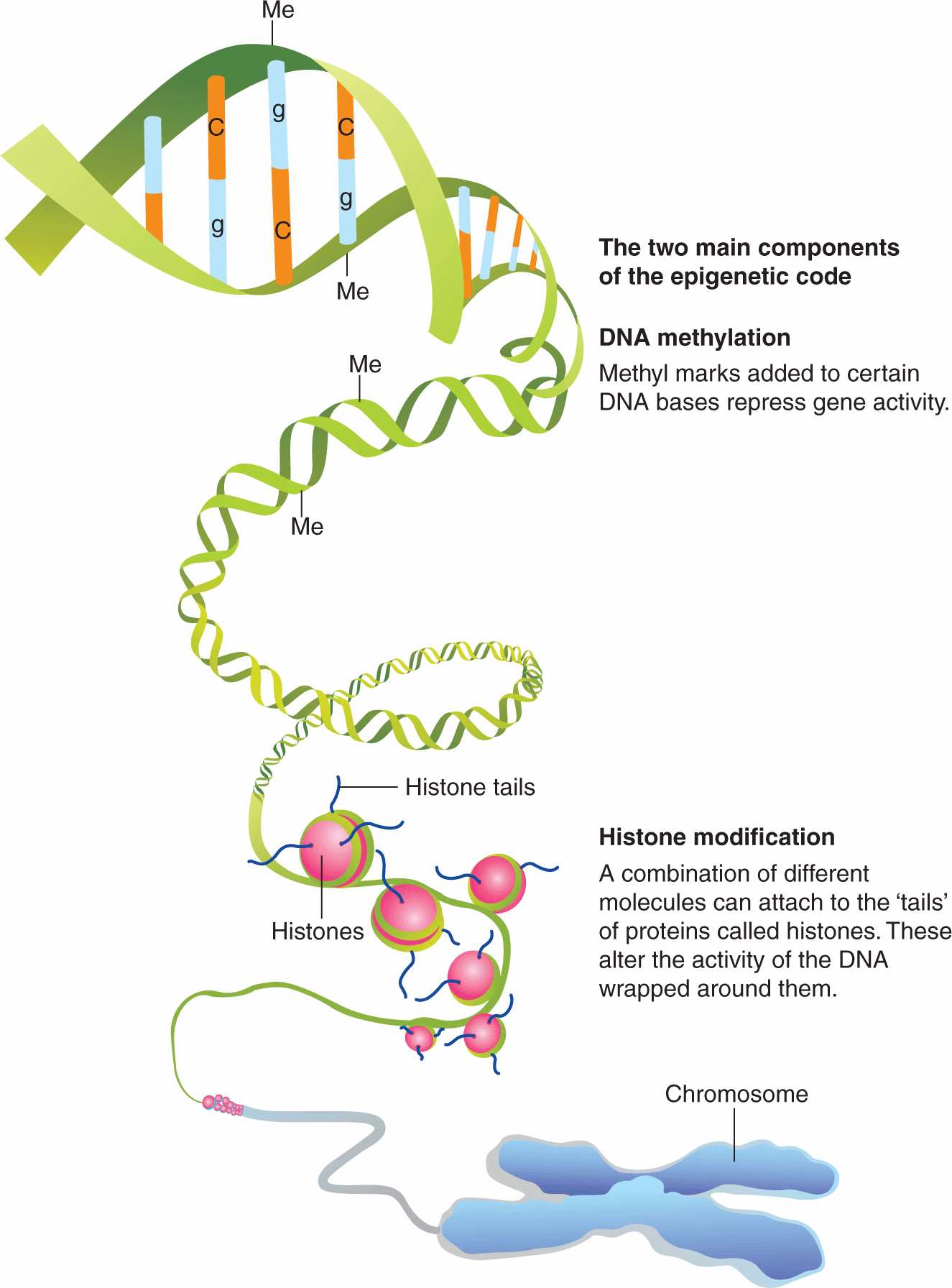

2.3 EPIGENETICS

Epigenetics relates to heritable changes in gene expression that are not encoded in the genome. These processes are mediated by the covalent attachment of chemical groups (eg, methyl or acetyl groups) to DNA and associated proteins, histones and chromatin (Fig. 2–19). Examples of epigenetic effects include imprinting, gene silencing, X chromosome inactivation, position effect, reprogramming, and regulation of histone modifications and heterochromatin. Importantly, epigenetic change is thought to be inherent in the carcinogenesis process. In general, cancer cells exhibit generalized, genome-wide hypomethylation and local hypermethylation of CpG islands associated with promoters (Novak, 2004). Although the significance of each epigenetic change is unclear, hundreds to thousands of genes can be epigenetically silenced by DNA methylation during carcinogenesis. Given these widespread effects, there are epigenetic modifier drugs in current clinical use and there is great potential for further therapeutic utility (see Chap. 17, Sec. 17.3). Additionally, because tumor-derived DNA is present in various, easily accessible body fluids, tumor specific epigenetic modifications such as methylated DNA could prove to be a useful biomarkers for cancer prediction or prognosis (Woodson et al, 2008).

FIGURE 2–19 An overview of the major epigenetic mechanisms that affect gene expression. In addition, there are a number of varieties of histone modifications that are associated with alterations in gene expression or characteristic states, such as stem cells. (From http://embryology.med.unsw.edu.au/MolDev/Images/epigenetics.jpg.)

2.3.1 Histone Modification

Histones are alkaline proteins found in eukaryotic cell nuclei that package and order DNA into structural units called nucleosomes. Core histones consist of a globular C-terminal domain and an unstructured N-terminal tail. The epigenetic-related modifications to the histone protein occur primarily on the N-terminal tail (Novak, 2004). These modifications appear to influence transcription, DNA repair, DNA replication and chromatin condensation. For example, acetylation of lysine is associated with transcriptionally active DNA, while the effects (ie, activation or repression of transcription) of lysine and arginine methylation vary by location of the amino acid, number of methyl groups, and proximity to a gene promoter (Turner, 2007).

2.3.2 DNA Methylation

DNA methylation involves the addition of a methyl group to the 5′ position of the cytosine pyrimidine ring or the number 6 nitrogen of the adenine purine ring in DNA. In humans, approximately 1% of DNA bases undergo methylation and 10% to 80% of 5′-CpG-3′ dinucleotides are methylated; non-CpG methylation is more prevalent in embryonic stem cells. Unmethylated CpGs are often grouped in clusters called CpG islands, which are present in the 5′ regulatory regions of many genes (Gardiner-Garden and Frommer, 1987). In cancer, for reasons that remain unclear, gene promoter CpG islands acquire abnormal hypermethylation, which results in transcriptional silencing that can be inherited by daughter cells following cell division. There are at least 2 important consequences of DNA methylation. First, the methylation of DNA may physically impede the binding of transcriptional activators to the promoter, and second, methylated DNA may be bound by proteins known as methyl-CpG-binding domain proteins (MBDs). These proteins can recruit additional proteins to the locus, such as histone deacetylases, thereby forming heterochromatin (tightly coiled and generally inactive) linking DNA methylation to chromatin structure.

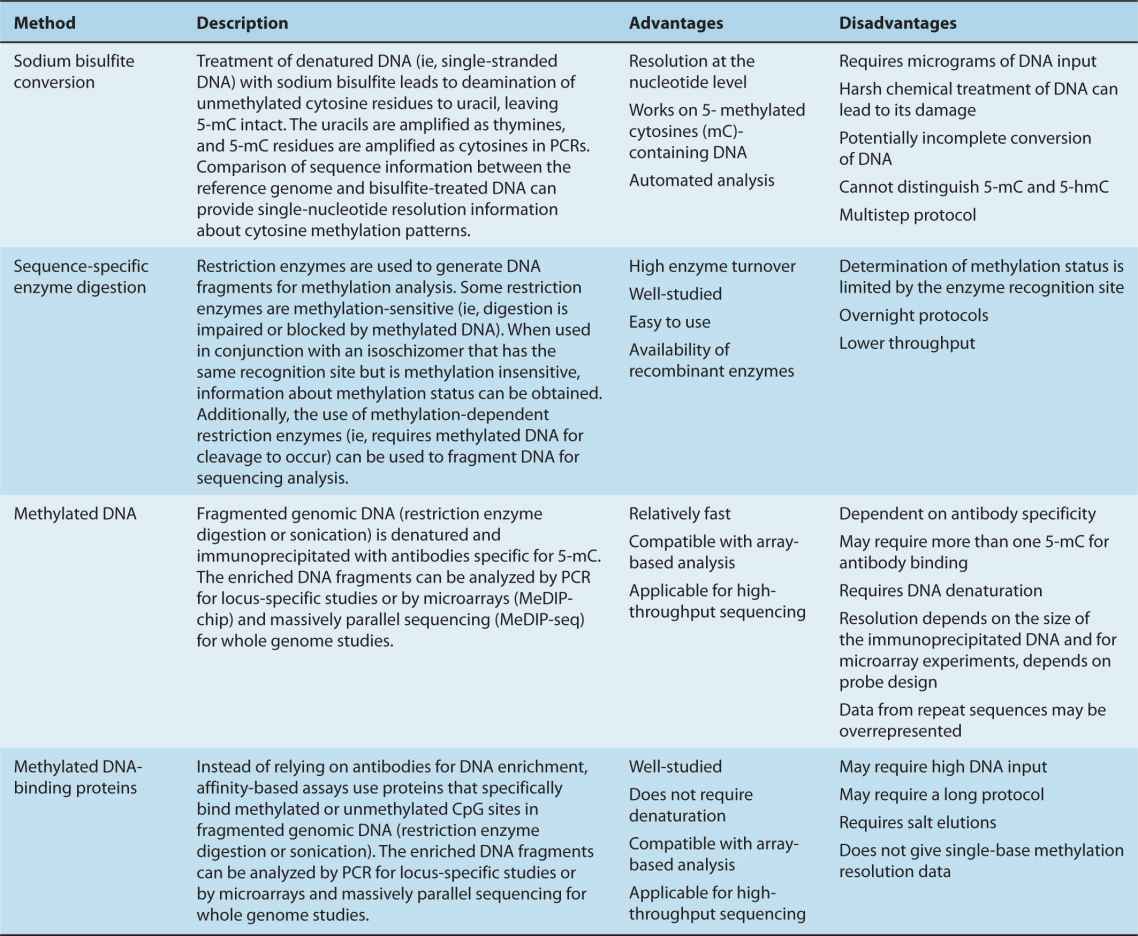

2.3.3 Technologies for Studying Epigenetic Changes

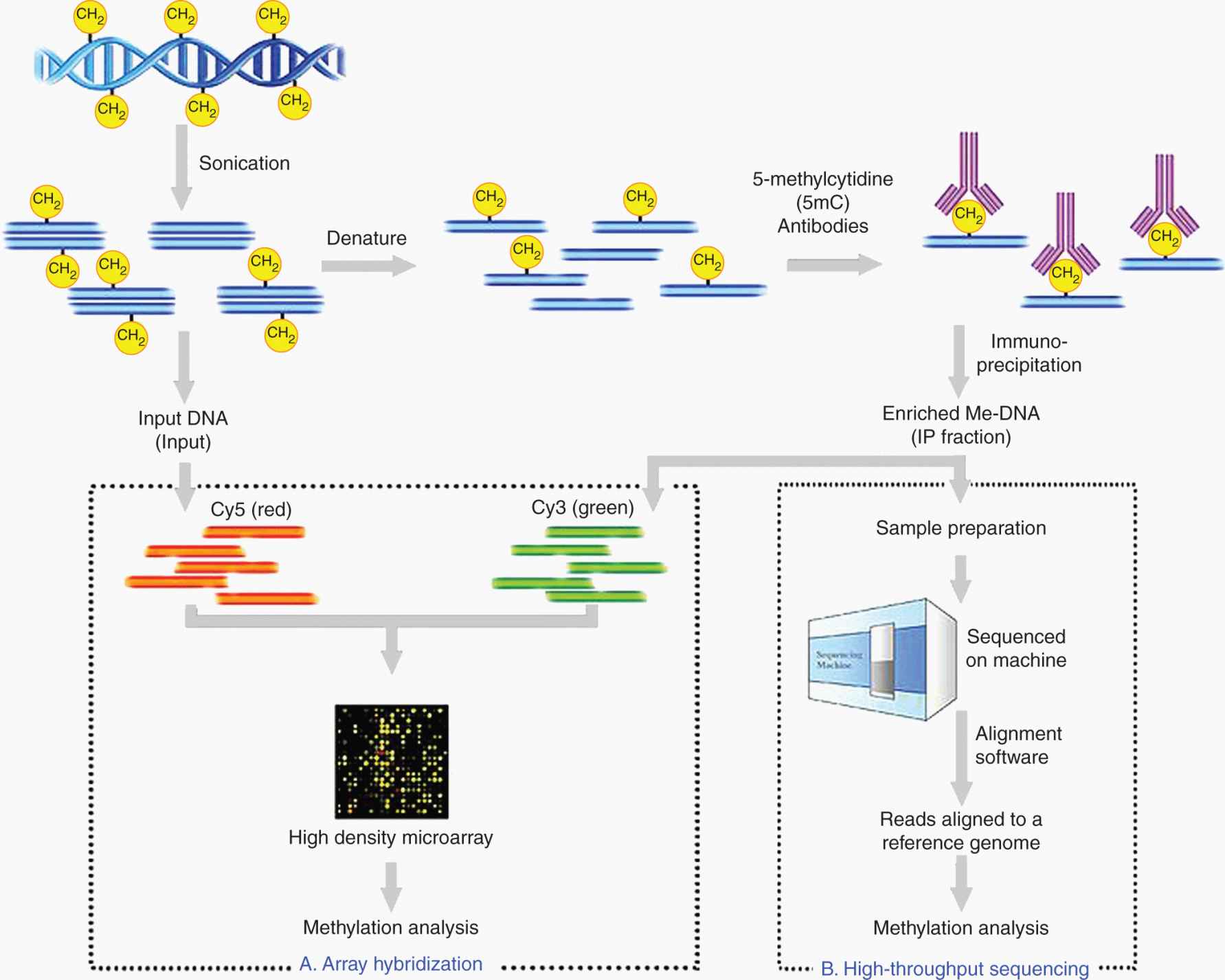

Epigenetic research uses a wide range of techniques designed to determine DNA–protein interactions, including chromatin immunoprecipitation (ChIP) (together with ChIP-on-chip and ChIP-seq), histone-specific antibodies, methylation-sensitive restriction enzymes and bisulfite sequencing. Here, we focus on the main approaches for studying DNA methylation along with their relative advantages and disadvantages (Table 2–3). A few points are worth emphasizing; sodium bisulfite converts unmethylated cytosines to uracil, while methylated cytosines (mC) remain unchanged (Fig. 2–20). This technique can reveal the methylation status of every cytosine residue, and it is amenable to massively parallel sequencing methods. Affinity-based methods using methyl-specific antibodies (MeDIP) are becoming more popular for whole genome analyses as methyl-specific antibodies improve in sensitivity and specificity (Fig. 2–21).

TABLE 2–3 Methods for analyzing DNA methylation.

FIGURE 2–20 The most commonly used technique is sodium bisulfite conversion, the “gold standard” for methylation analysis. Incubation of the target DNA with sodium bisulfite results in conversion of all unmodified cytosines to uracils leaving the modified bases 5-methylcytosine or 5-hydroxymethylcytosine (5-mC or 5-hmC) intact. The most critical step in methylation analysis using bisulfite conversion is the complete conversion of unmodified cytosines. Generally, this is achieved by alternating cycles of thermal denaturation with incubation reactions. In this example, the DNA with methylated CpG at nucleotide position #5 was processed using a commercial kit. The recovered DNA was amplified by PCR and then sequenced directly. The methylated cytosine at position #5 remained intact, while the unmethylated cytosines at positions 7, 9, 11, 14, and 15 were completely converted into uracil following bisulfite treatment and detected as thymine following PCR.

FIGURE 2–21 Schematic outline of MeDIP. Genomic DNA is sheared into approximately 400 to 700 bp using sonication and subsequently denatured. Incubation in 5-mC antibodies, along with standard immunoprecipitation (IP), enriches for fragments that are methylated (IP fraction). This IP fraction can become the input sample to 1 of 2 DNA detection methods: array hybridization using high-density microarrays (A) or high-throughput sequencing using the latest in sequencing technology (B). Output from these methods are then analyzed for methylation patterns to answer the biological question. (From http://en.wikipedia.org/wiki/Methylated_DNA_immunoprecipitation.)

A wide variety of analytical and enzymatic downstream methods can be used to characterize isolated genomic DNA of interest. Analytical methods, such as high-performance liquid chromatography (HPLC) and matrix-assisted laser desorption/ionization-time of flight mass spectrometry (MALDI-TOF MS; see also Sec. 2.4), have been used to quantify modified bases in complex DNAs. Although HPLC is highly reproducible, it requires large amounts of DNA and is often unsuitable for high-throughput applications. In contrast, MALDI-TOF MS provides relative quantification and is amenable to high-throughput applications.

Other methods to detect methylation include real-time PCR, blotting, microarrays (eg, ChIP on chip) and sequencing (eg, ChIP-Sequencing [ChIP-Seq]). ChIP-Seq combines ChIP with massively parallel DNA sequencing to identify the binding sequences for proteins of interest. Both ChIP techniques rely upon an antibody being available to an epigenetic modification of interest that is then used to “pull-down” the associated DNA via crosslinking so it can be subsequently analyzed. Previously, ChIP-on-chip was the most common technique utilized to study protein-DNA relations. This technique also utilizes ChIP initially, but the selected DNA fragments are ultimately released (“reverse crosslinked”) and the DNA is purified. After an amplification and denaturation step, the single-stranded DNA fragments are identified by labeling with a fluorescent tag such as Cy5 or Alexa 647 and poured over the surface of a DNA microarray, which is spotted with short, single-stranded sequences that cover the genomic portion of interest.

2.4 CREATING AND MANIPULATING MODEL SYSTEMS

2.4.1 Cell Culture/Cancer Cell Lines

Cells that are cultured directly from a patient are known as primary cells. With the exception of tumor-derived cells, most primary cell cultures have a limited life span. After a certain number of population doublings (called the Hayflick limit) cells undergo senescence and cease dividing although generally retaining viability. However, established or immortalized cell lines have an ability to proliferate indefinitely either through random mutation or deliberate modification, such as enforced expression of the telomerase reverse transcriptase (see Chap. 5, Sec. 5.7). There are numerous well-established cell lines derived from particular cancer cell types such as LNCaP for hormone-sensitive prostate cancer; MCF-7 for hormone-sensitive breast cancer; U87, a human glioblastoma cell line; and SaOS-2 for osteosarcoma.

Despite cell lines often being used in preclinical experiments to explore cancer biology there are a number of caveats that limit their validity: (a) The number of cells per volume of culture medium plays a critical role for some cell types. For example, a lower cell concentration makes granulosa cells undergo estrogen production, whereas a higher concentration makes them appear as progesterone-producing theca lutein cells. (b) Cross-contamination of cell lines may occur frequently and is often caused by proximity (during culture) to rapidly proliferating cell lines such as HeLa cells. Because of their adaptation to growth in tissue culture plates, HeLa cells may spread in aerosol droplets to contaminate and overgrow other cell cultures in the same laboratory, interfering with the validity of data interpretation. The degree of contamination among cell types is unknown because few researchers test the identity or purity of already-established cell lines, although scientific journals are increasingly requiring such tests. (c) As cells continue to divide in culture, they generally grow to fill the available area or volume. This can lead to nutrient depletion in the growth media, accumulation of apoptotic/necrotic (dead) cells and cell-to-cell contact, which leads to contact inhibition or senescence. Furthermore, tumor cells grown continuously in culture may acquire further mutations and epigenetic alterations that can change their properties and may affect their ability to reinitiate tumor growth in vivo. (d) The extent to which cancer cell lines reflect the original neoplasm from which they are derived is variable. For example, the prostate cancer cell line DU145 was derived from a brain metastasis, which is unusual in prostate cancer. Furthermore, the line does not express prostate-specific antigen (PSA) and its hypotriploid karyotype is uncommon in prostate cancer. The increasing recognition of the genetic heterogeneity both between and within individual cancers has raised further concerns about how well individual cell lines represent the cancer type from which they were derived.

2.4.2 Manipulating Genes in Cells

The function of a gene can often be studied by placing it into a cell different from the one from which it was isolated. For example, one may wish to place a mutated oncogene, isolated from a tumor cell, into a normal cell to determine whether it causes malignant transformation. The process of introducing DNA plasmids into cells is termed transfection. A number of transfection protocols have been developed for efficient introduction of foreign DNA into mammalian cells, including calcium phosphate or diethylaminoethyl (DEAE)-dextran precipitation, spheroplast fusion, lipofection, electroporation, and transfer using viral vectors (Ausubel and Waggoner, 2003). For all methods, the efficiency of transfer must be high enough for easy detection, and it must be possible to recognize and select for cells containing the newly introduced gene. Control over the expression of introduced genes can be achieved by the use of inducible expression vectors. These vectors allow the manipulation of a gene, most commonly when an exogenous agent (such as tetracycline or estrogen) is added or taken away from culture media: this is achieved with a specific repressor that responds to the exogenous agent, and is fused to domains that activate the gene of interest.

One method of transfection uses hydroxyethyl piperazineethanesulfonic acid (HEPES)-buffered saline solution (HeBS) containing phosphate ions combined with a calcium chloride solution containing the DNA to be transfected. When the 2 are combined, a fine precipitate of the positively charged calcium and the negatively charged phosphate of the DNA results in a fine solute, which is then added to the recipient cells. As a result of a process not completely understood, the cells take up the DNA-containing precipitate. A more efficient method is the inclusion of the DNA to be transfected in liposomes, which are small, membrane-bounded bodies that can fuse with the cell membrane, thereby releasing the DNA into the cell. For eukaryotic cells, transfection is better achieved using cationic liposomes (or mixtures). Popular agents are lipofectamine (Invitrogen, New York, USA) and UptiFectin (Interchim, Montiuçon Cedex, France). Another method uses cationic polymers such as DEAE-dextran or polyethyleni-mine: the negatively charged DNA binds to the polycation and the complex is absorbed via endocytosis.

Other methods require physical perturbation of cells (which may be detrimental to the study) to introduce DNA. Some examples include electroporation (application of an electric charge), sonoporation (sonic pulses), and optical (laser) transfection. Particle-based methods, such as the gene gun (where the DNA is coupled to a nanoparticle of an inert solid and “shot” directly into the target cell), magnetofection (utilizing magnetic forces to drive nucleic acid particle complexes into the target cell), and impalefection (impaling cells by elongated nanostructures such as carbon nanofibers or silicon nanowires which have been coated with plasmid DNA) are becoming less popular given the greater efficiency of viral transfection.

DNA can also be introduced into cells using viruses as carriers; the technique is called viral transduction, and the cells are transduced. Retroviruses are very stable, as their cDNA integrates into the host mammalian DNA, but only relatively small pieces of DNA (up to 10 kbp) can be transferred. Adenoviral-based vectors can accommodate larger inserts (~36 kbp) and have a very high efficiency of transfer (see Chap. 6, Sec. 6.2.2). However, with increasing frequency, lentiviruses (Fig. 2–22) are being used to introduce DNA into cells; they have the advantages of high-efficiency infection of dividing and nondividing cells, long-term stable expression of the transgene, and low immunogenicity.

FIGURE 2–22 Schematic outlining the process of lentiviral transfection. Cotransfection of the packaging plasmids and transfer vector into the packaging cell line, HEK293T, allows efficient production of lentiviral supernatant. Virus can then be transduced into a wide range of cell types, including both dividing and nondividing mammalian cells. Note that the packaging mix is often separated into multiple plasmids, minimizing the threat of recombinant replication-competent virus production. Viral titers are measured in either transduction units (TU)/mL or multiplicity of infection (MOI), which is the number of transducing lentiviral particles per cell to which the following relationship applies under experimental conditions:

(Total number of cells per well) × (Desired MOI) = Total TU needed

(Total TU needed)/(TU/mL reported on certificate of authentication) = Total mL of lentiviral particles to add to each well

Whichever method is used to introduce the DNA, it is usually necessary to select for retention of the transferred genes before assaying for expression. For this reason, a selectable gene, such as the gene encoding resistance to the antibiotics geneticin (G418), neomycin, or puromycin, can be introduced simultaneously.

2.4.3 RNA Interference

RNA interference (RNAi) is the process of mRNA degradation that is induced by double-stranded RNA in a sequence-specific manner. RNAi has been observed in all eukaryotes, from fission yeast to mammals. The power and utility of RNAi for specifically silencing the expression of any gene for which the sequence is available has driven its rapid adoption as a crucial tool for genetic analysis.

The RNAi pathway is thought to be an ancient mechanism for protecting the host and its genome against viruses that use double-stranded RNA (dsRNA) in their life cycles. RNAi is now recognized to be but one of a larger set of sequence-specific cellular responses to RNA, collectively called RNA silencing. RNA silencing plays a critical role in regulation of cell growth and differentiation using endogenous small RNAs called microRNAs (miRNAs). These miRNAs also play a role in carcinogenesis. For example, miR-15a and miR-16-1 act as putative tumor suppressors by targeting the oncogene BCL2. These miRNAs occur in a cluster at the chromosomal region 13q14, which is frequently deleted in cancer and is downregulated by genomic loss or mutations in CLL (Calin et al, 2005), prostate cancer (Bonci et al, 2008), and pituitary adenomas (Bottoni et al, 2005).

miRNAs are mostly transcribed from introns or other noncoding areas of the genome into primary transcripts of between 1 kb and 3 kb in length, called pri-miRNAs (Rodriguez et al, 2004) (Fig. 2–23). These transcripts are processed by the ribonucleases Drosha and DiGeorge syndrome critical region gene 8 (DGCR8) complex in the nucleus, resulting in a hairpin-shaped intermediate of approximately 70 to 100 nucleotides, called precursor miRNA (pre-miRNA) (Landthaler et al, 2004; Lee et al, 2003). The pre-miRNA is exported from the nucleus to the cytoplasm by exportin 5 (Perron and Provost, 2009). Once in the cytoplasm, the pre-miRNA is processed by Dicer, another ribonuclease, into a mature double-stranded miRNA of approximately 18 to 25 nucleotides. After strand separation, the guide strand or mature miRNA is incorporated into an RNA-induced silencing complex (RISC) and the passenger strand is usually degraded. The RISC complex is comprised of miRNA, argonaute proteins (argonaute 1 to argonaute 4) and other protein factors. The argonaute proteins have a crucial role in miRNA biogenesis, maturation and miRNA effector functions (Hutvagner and Zamore, 2002; Chendrimada et al, 2005).

FIGURE 2–23 miRNA genomic organization, biogenesis and function. Genomic distribution of miRNA genes. The sequence encoding miRNA is shown in red. TF, Transcription factor. A) Clusters throughout the genome transcribed as polycistronic primary transcripts and subsequently cleaved into multiple miRNAs; B) intergenic regions transcribed as independent transcriptional units; C) intronic sequences (in gray) of protein-coding or protein-noncoding transcription units or exonic sequences (black cylinders) of noncoding genes. pri-miRNAs are transcribed and transiently receive a 7-methylguanosine (7mGpppG) cap and a poly(A) tail. The pri-miRNA is processed into a precursor miRNA (pre-miRNA) stem-loop of approximately 60 nucleotides (nt) in length by the nuclear ribonuclease (RNase) III enzyme Drosha and its partner DiGeorge syndrome critical region gene 8 (DGCR8). Exportin-5 actively transports pre-miRNA into the cytosol, where it is processed by the Dicer RNase III enzyme, together with its partner TAR (HIV) RNA binding protein (TRBP), into mature, 22 nt-long double-strand miRNAs. The RNA strand (in red) is recruited as a single-stranded molecule into the RNA-induced silencing (RISC) effector complex and assembled through processes that are dependent on Dicer and other double-strand RNA-binding domain proteins, as well as on members of the argonaute family. Mature miRNAs then guide the RISC complex to the 3′ untranslated regions (3′-UTRs) of the complementary mRNA targets and repress their expression by several mechanisms: repression of mRNA translation, destabilization of mRNA transcripts through cleavage, deadenylation, and localization in the processing body (P-body), where the miRNA-targeted mRNA can be sequestered from the translational machinery and degraded or stored for subsequent use. Nuclear localization of mature miRNAs has been described as a novel mechanism of action for miRNAs. Scissors indicate the cleavage on pri-miRNA or mRNA. (From Fazi et al, 2008.)

The discovery of the miRNAs suggested that RNAi might be triggered artificially in mammalian cells by synthetic genes that express mimics of endogenous triggers. Indeed, mimics of miRNAs in the form of short hairpin RNAs (shRNAs) have proven to be an invaluable research tool to further our understanding of many biological processes, including carcinogenesis. shRNAs contain a sense strand, antisense strand, and a short loop sequence between the sense and antisense fragments. Because of the complementarity of the sense and antisense fragments in their sequence, such RNA molecules tend to form hairpin-shaped dsRNA. shRNA can be cloned into a DNA expression vector and can be delivered to cells in the same ways devised for delivery of DNA. These constructs then allow ectopic mRNA expression by an associated pol III type promoter. The expressed shRNA is then exported into the cytoplasm where it is processed by dicer into short-interference RNA (siRNA), which then get incorporated into the siRNA RISC. A number of transfection methods are suitable, including transient transfection, stable transfection, and delivery using viruses, with both constitutive and inducible promoter systems.

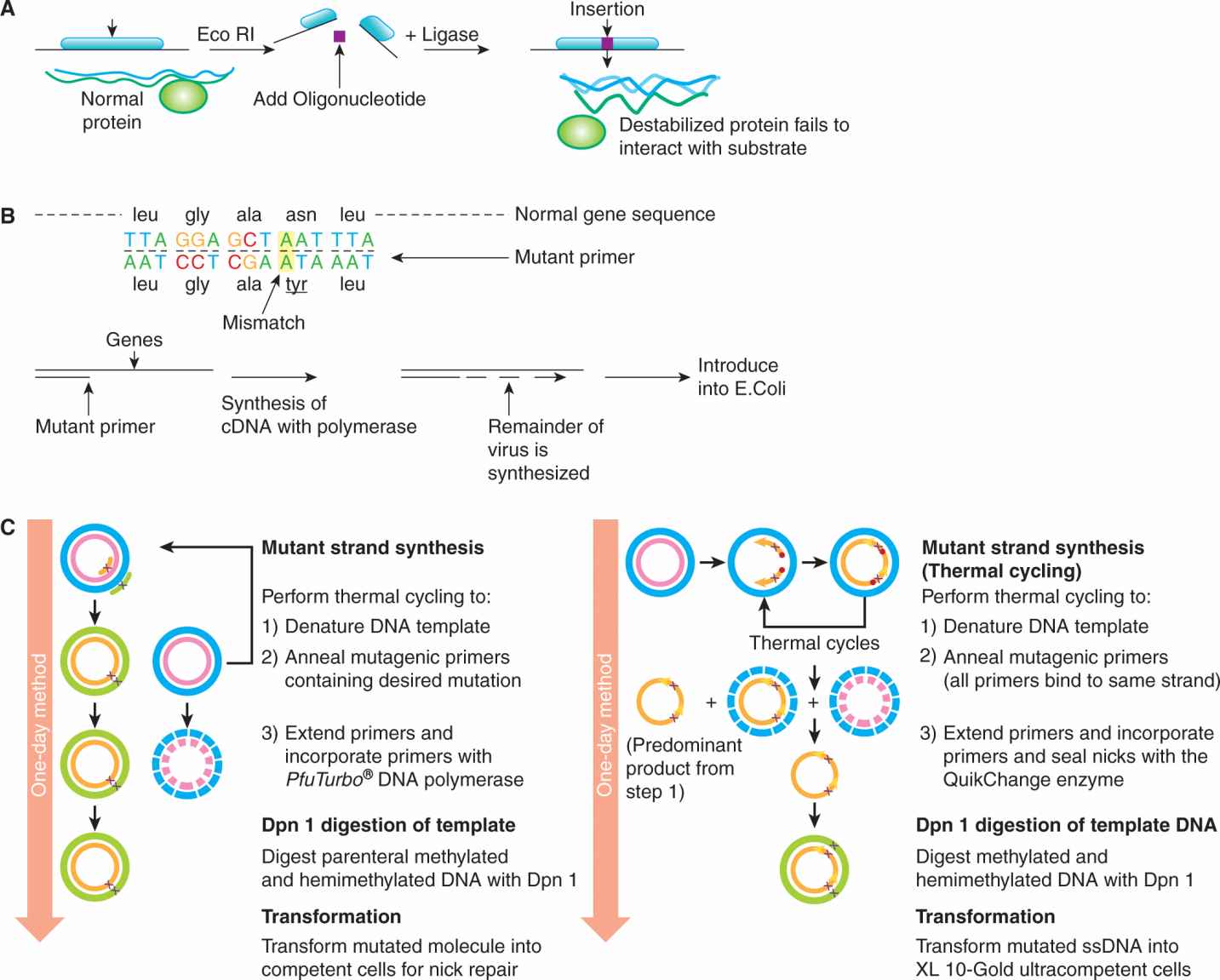

2.4.4 Site-Directed Mutagenesis

Following the sequencing of the human genome (and that of other species), a plethora of genes are being identified without any knowledge of their function. Important clues concerning protein function may be provided through similarity in the amino acid sequence and secondary protein structure to other proteins or protein domains of known function. For example, many transcription-factor proteins have a characteristic motif through which DNA-binding takes place (eg, leucine-zipper or zinc-finger domain; see Chap 8. Sec. 8.2). One way of testing the putative function of such a sequence is to see whether a mutation within the critical site causes loss of function. In the example of transcription factors, a single mutation might result in a protein that failed to bind DNA appropriately. Site-directed mutagenesis permits the introduction of mutations at a precise point in a cloned gene, resulting in specific changes in the amino acid sequence. By site-directed mutagenesis, amino acids can be deleted, altered, or inserted, but for most experiments, the changes do not alter the reading frame and disrupt protein continuity. There are two classical methods of introducing a mutation into a cloned gene (Ausubel and Waggoner, 2003). The first method (Fig. 2–24A) relies on the chance occurrence of a restriction enzyme site in a region one wishes to alter. Typically, the gene is digested with the restriction endonuclease, and a few nucleotides may be inserted or deleted at this site by ligating a small oligonucleotide complementary to the cohesive DNA terminus that remains after enzyme digestion. The second method (Fig. 2–24B) is more versatile but requires more manipulation. The gene is first obtained in a single-stranded form by cloning into a vector such as M13 phage. First, a short oligonucleotide is synthesized containing the desired nucleotide change but otherwise complementary to the region to be mutated. The oligonucleotide will anneal to the single-stranded DNA but contains a mismatch at the site of mutation. The hybridized oligonucleotide-DNA duplex is then exposed to DNA polymerase I (plus the 4 nucleotides and buffers), which will synthesize and extend a complementary strand with perfect homology at every nucleotide except at the site of mismatch in the primer used to initiate DNA synthesis. The double-stranded DNA is then transfected into bacteria in the phage, and because of the semiconservative nature of DNA replication, 50% of the M13 phage produced will contain normal DNA and 50% will contain the DNA with the introduced mutation. Several methods allow easy identification of the mutant M13 virus. Using these techniques, the effects of artificially generated mutations can be studied in cell culture or in transgenic mice (see following section).

FIGURE 2–24 Methods for site-directed mutagenesis. A) Insertion of a new sequence at the site of action of a restriction enzyme by ligating a small oligonucleotide sequence within the reading frame of a gene. B) Use of a primer sequence that is synthesized to contain a mismatch at the desired site of mutagenesis. C) Outline of the PCR-based methodology. (From http://www.biocompare.com/Application-Notes/42126-Fast-And-Efficient-Mutagenesis/.)

More recently, techniques such as whole plasmid mutagenesis that rely on PCR (see Sec. 2.2.5) are often used as this produces a fragment containing the desired mutation in sufficient quantity to be separated from the original, unmutated plasmid by gel electrophoresis, which may then be used with standard recombinant molecular biology techniques. Following plasmid amplification (usually in Escherichia coli), commercially available kits (see Fig. 2–24C) can be used that involve a pair of complementary mutagenic primers that are used to amplify the entire plasmid DNA in a thermocycling reaction using a high-fidelity non–strand-displacing DNA polymerase. The reaction generates a nicked, circular DNA. The template DNA is eliminated by enzymatic digestion with a restriction enzyme such as DpnI, which is specific for methylated DNA, as all the DNA produced from the E. coli vector is methylated; the template plasmid which is biosynthesized in E. coli will therefore be digested, whereas the mutated plasmid is generated in vitro and is therefore unmethylated and left undigested.

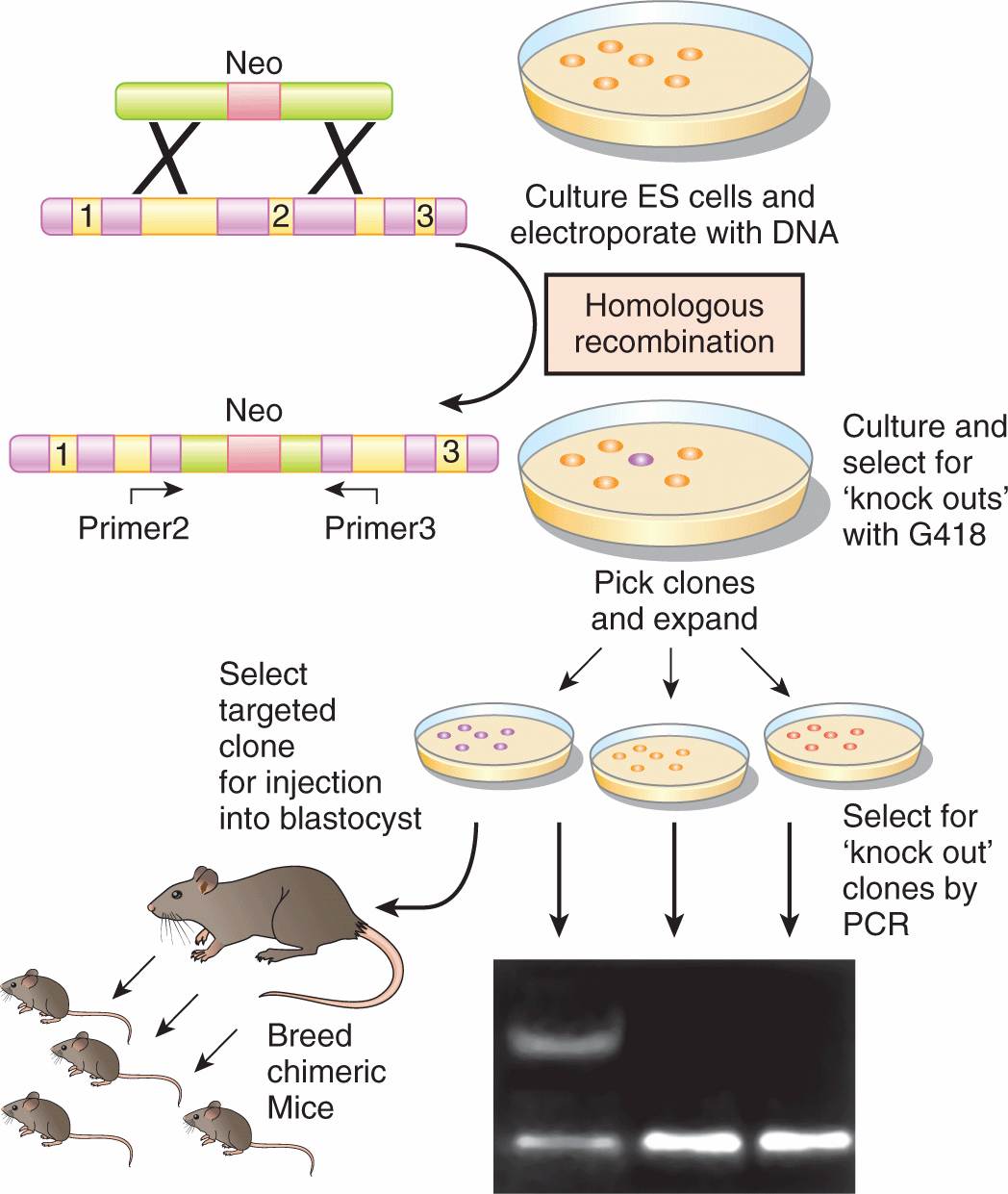

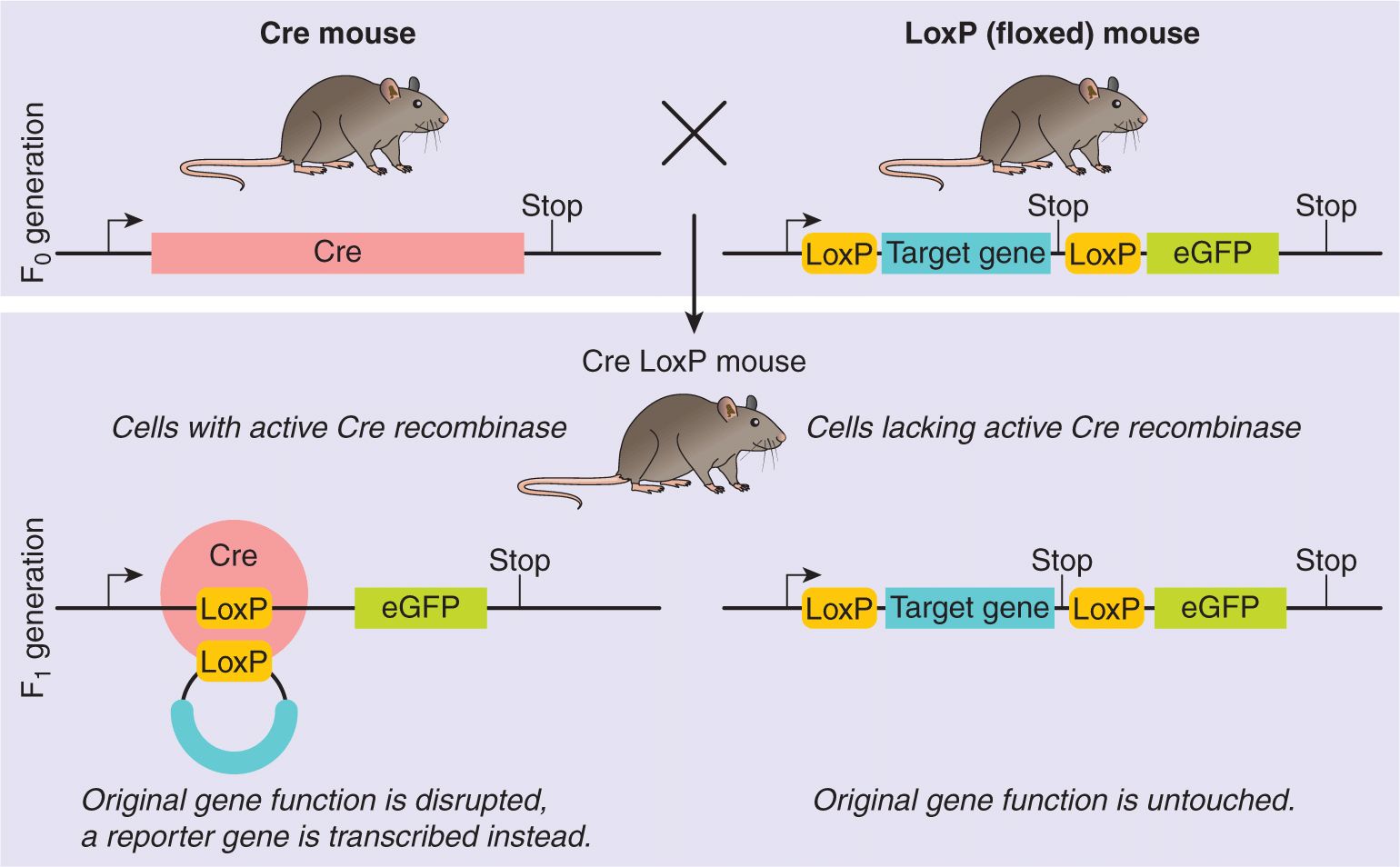

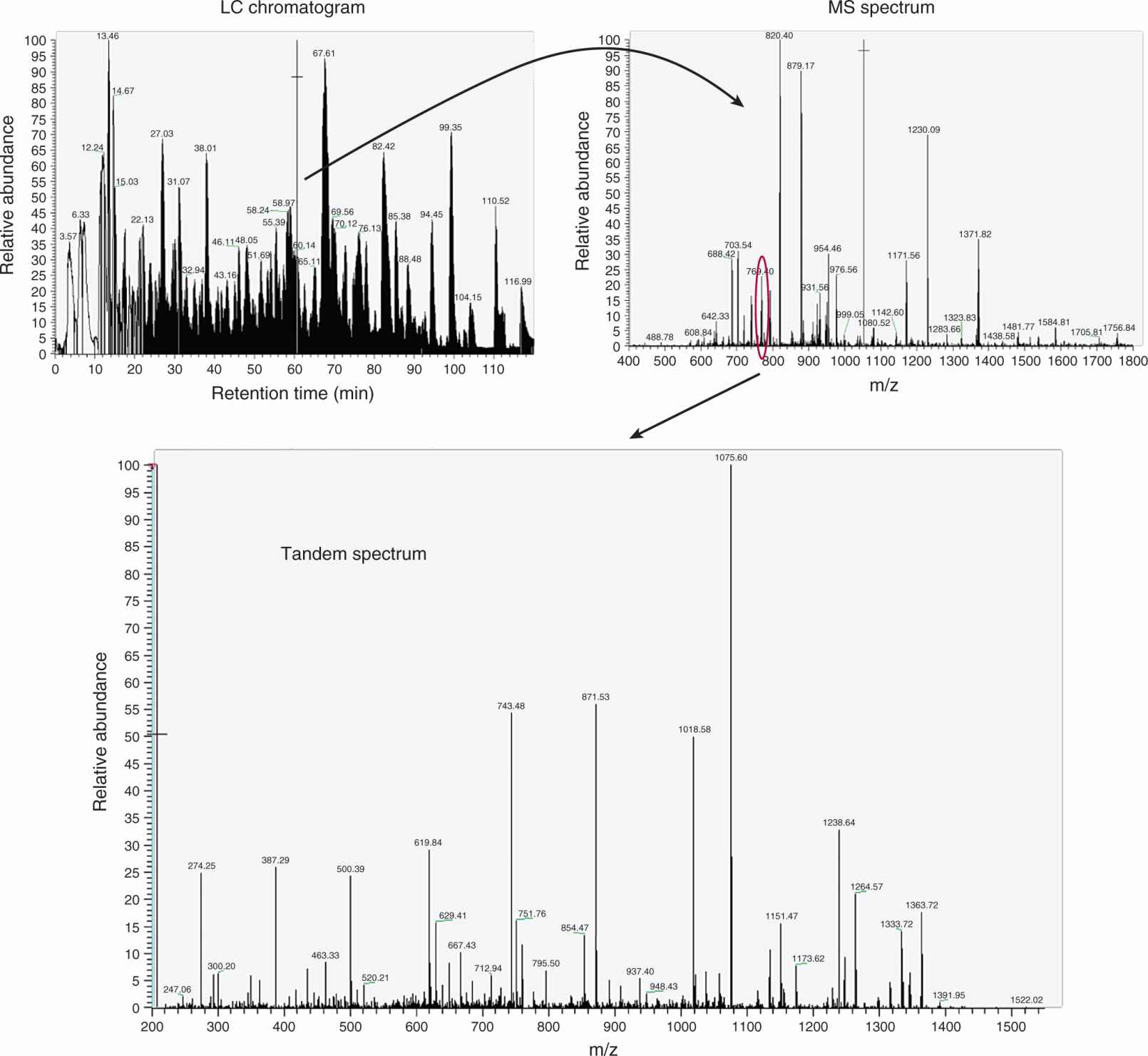

2.4.5 Transgenic and Knockout Mice