Plain Language Summary

The benefits and harms of breast cancer screening have been debated for many years. One reason this debate continues is continuing uncertainty about the magnitude of these benefits and harms. In the past, decision makers have relied on randomized controlled trials (RCTs) to give us the best estimates of the benefit of screening—the breast cancer mortality reduction attributable to screening. However, these RCTs were conducted from 20 to 50 years ago. In that time several important factors have changed: women’s breast cancer risk, screening technology, and breast cancer treatment. These factors could change the benefit of screening. Thus, we need current evidence about the reduction in breast cancer mortality from present-day screening. Because of the time required, the cost, and other inherent limitations of RCTs, this study design is not up to the task of providing this necessary information in a timely and ongoing manner.

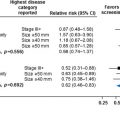

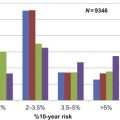

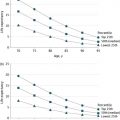

A number of observational studies have recently been conducted to help us better understand the effect of mammography in today’s setting on breast cancer mortality. Unfortunately, these studies also have serious methodological limitations. A systematic review of the best of these studies shows much variation in their results. The best estimate from all of these studies is that current mammography is associated with about a 10% relative reduction in breast cancer mortality, which is less than predicted by the older RCTs. But this estimate is also not conclusive, and is likely to change in the future.

There is a need for an international team of experts to develop standards for higher-quality observational studies so that the effect of screening on breast cancer mortality can be better estimated and monitored over time.

Introduction

To make decisions about whether to start or continue widespread screening programs, and how intensive the screening should be, policy-makers need information about the current benefits and harms of screening. Clinicians need the same information to help their patients make individual decisions about screening. But we live in a constantly changing world, and what we thought we knew before rapidly becomes out of date and less relevant for today’s decisions. Screening programs, like all other facets of health care, need to be evaluated at the beginning, and then constantly monitored as key factors change over time. Programs once regarded as high value (ie, providing benefits that clearly justify the harms and costs) may become lower value as the risk of populations, screening technology, and treatment change.

It is important to require a high level of evidence about benefits and harms before implementing a widespread screening program at a particular intensity, and the same level of evidence to determine, periodically, whether the program should be continued at its current intensity. This high level of evidence is needed for at least three reasons: (1) the benefits of screening are often smaller than many would guess, and they may become even smaller over time; (2) the harms of screening are often larger than many would expect, and may grow over time; and (3) the costs of a full screening program are actually larger than many would suspect, and may increase over time. Screening programs that are implemented or continued without adequate supporting evidence may cause more harm than good and drain resources from other important health priorities.

It has been thought that the highest evidence requirement for a screening program is a RCT of screening. But because of several inherent limitations of RCTs of screening, and because of the need for ongoing monitoring, this chapter will suggest that implementation of a new screening program, and determination of whether to continue an existing program at its current intensity, should require both an RCT of screening and adequate observational evidence to determine that the benefits of a specific screening strategy clearly outweigh the harms and costs (ie, that it is high value). One, or even several, RCTs of screening without appropriate observational evidence from screening implementation are not enough. Further, prior to implementation or continuation, there should also be a determination of the extent to which the screening program at its proposed intensity would reduce the societal burden of the condition, and whether the cost and effort of the program would be more wisely spent in another way that would have a greater effect on reducing societal burden from the same condition or other health problems. The screening programs that should be implemented or continued are the ones that are both high value (ie, provide—for the people screened—benefits that clearly outweigh the harms and costs) and also relieve a substantial part of society’s burden of suffering from the condition.

These suggestions together would lead to a slowing down of implementation of new screening proposals (eg, adjunctive screening for women with dense breasts; changing primary screening from digital mammography to tomosynthesis), and perhaps a rolling back of the intensity of some existing programs. The intent is to increase the certainty that screening as currently practiced or proposed would actually lead to the wise use of resources to improve the health of the public without unduly increasing the harms of intensive health care.

Breast cancer screening has been implemented in many developed countries over the past 50 years, yet questions about the value of intensive screening and the extent to which this screening reduces the societal burden of breast cancer, remain, and may even be growing in importance. At least two of the reasons for this ongoing discussion may be an increasing awareness of the harms of screening, and an increasing concern that changes in population risk, screening technology, and treatment may make the results of the multiple screening RCTs less applicable to today. Although investigators have conducted many observational studies to help estimate current benefits and harms, these studies also have multiple methodologic challenges, and have yet to gain the full confidence of decision makers. The time has arrived when we should reexamine whether to continue breast cancer screening at its current level of intensity.

This review will examine the advantages and disadvantages of the older RCTs of breast cancer screening and the more recent observational evidence in assessing the current balance of benefits and harms of breast cancer screening. It will also make suggestions about how we might improve our evidence base to make better decisions about the appropriate intensity of breast cancer screening programs in the future.

Are RCTs of Screening the “Gold Standard”?

This section will briefly review some of the limitations of RCTs of screening. Further details of evidence from RCTs of breast cancer screening are provided in the chapter “Estimates of Screening Benefit: The Randomized Trials of Breast Cancer Screening” of this book. It is important to understand that RCTs of screening are different from RCTs of treatment. Trials of screening start with individuals who do not currently have a past diagnosis, symptoms, or signs of the condition in question; they then randomize participants to invitation to screening invitation or no invitation. Trials of treatment start with people who have the condition and randomize them to treatment “A” or treatment “B.” Trials of treatment tell us relatively little about the benefits and harms of a screening program. This is because the issue of screening is whether earlier treatment conveys better health outcomes than later treatment. That is, the issue is the timing of treatment. The only difference between randomized groups in a screening RCT is that the affected individuals in the invited group are, on average, treated earlier than similar affected individuals in the not-invited group. The treatment strategy is the same in both groups—but it is just instituted earlier in the invited versus the not-invited group. The issue examined by RCTs of treatment is different: is treatment A better than treatment B?

But even RCTs of screening have limitations in providing the right evidence to determine the value of a screening program. First, screening RCTs estimate the benefits of a single screening intensity for a limited amount of time. For example, the Health Insurance Plan RCT in the United States, started in 1963, examined the effect on breast cancer mortality of five annual screening rounds of 1960s-type mammography and careful clinical breast examination (CBE) for women of ages 40–64 years. The study found a statistically significant 23–24% reduction in breast cancer mortality at 18 years follow-up. This study provides important and necessary evidence that there is a benefit for some women from screening with annual mammography and careful CBE for 5 years. But the implications for policy-makers are still unclear. For example, is there further benefit from screening the same women for a longer duration? What are the physical and psychological harms of such screening? What is the cost of such screening? Would we gain similar benefit from screening every 2 or even 3 years rather than annually? Would we gain similar benefits from starting even earlier at age 30 and continuing even later to age 80? These questions revolve around the more general issue of intensity and value: how does value (ie, the balance between benefits and harms plus costs) change as we vary screening intensity (ie, the starting and stopping ages that define eligibility for screening and the frequency and sensitivity of the screening test) ? An RCT of screening does not vary intensity, nor does it adequately assess the real–life harms and costs of screening in the community.

There are further deficiencies in the evidence provided by RCTs of screening. In many cases, participants in screening RCTs are not representative of the larger potentially-eligible population. In addition, the personnel and technology of screening and treatment in RCTs may be very different from that available in most community settings. Another important limitation of screening RCTs for breast cancer is that the trials were mostly conducted in the 1960s, 1970s, and early 1980s. Since then, the risk of developing breast cancer has changed for the population of screening-eligible women—with the rise and fall of postmenopausal hormone treatment, the increased prevalence of obesity, the increased awareness of women about breast lumps, changes in age at menarche and menopause, change in age at first pregnancy. Also, the technology of screening has changed, increasing its sensitivity and the cancer detection rate. Perhaps most dramatically, the range and effectiveness of treatment has increased. Thus, it is not clear how applicable the results of these older RCTs are to the present day. It may be, in fact, that increased sensitivity of screening tools, which find more lesions that are pathologically “breast cancer,” may not have reduced mortality more than older, less sensitive mammography, but may instead have increased overdiagnosis by finding those “breast cancers” that would never progress to clinically important lesions.

In addition, RCTs rarely provide adequate evidence about the potential harms of screening. Without evidence about harms, calculation of the balance between benefits versus harms/costs (ie, the value) of a screening program is impossible. These harms occur throughout the screening process, and can be organized into several categories: physical harms (eg, infection from a breast biopsy), psychological harms (eg, anxiety from a false positive mammogram), financial strain (eg, worry about future anticipated expenses from dealing with a newly detected lesion), opportunity costs (eg, lost opportunities to participate in work or family events due to time needed for testing/treatment or due to distraction from concern over positive screening), or hassles (eg, difficulties in organizing transportation or substitutes for responsibilities). The problem of overdiagnosis, which is a potential problem in nearly every screening program, can have both psychological (eg, labeling) and physical (eg, overtreatment) effects (see also chapter: Challenges in Understanding and Quantifying Overdiagnosis and Overtreatment , for further details on overdiagnosis). For each of these potential harms, decision makers need information about both the frequency and the severity of the harm. RCTs, whether of screening or of treatment, rarely provide adequate information about these harms.

Thus, these inherent limitations of RCTs of screening

- –

failure to provide comparative evidence about different intensities (ie, frequency, starting and stopping ages, test sensitivity) of screening;

- –

failure to provide evidence about long-term effects of screening;

- –

failure to provide adequate evidence about screening harms or costs ;

- –

failure to provide evidence about the benefits and harms of current-day screening;

- –

failure to provide evidence of the effects of screening in usual, community settings.

Advantages and Problems With Observational Studies

Well-conducted observational studies of various designs can supplement RCTs to supply the evidence we need to make optimal decisions about screening programs. Prospective cohort and ecologic designs have the greatest potential value, and could be conducted within already existing national databases. Linking national databases that reliably capture information on women’s risk characteristics, adherence to a specific screening strategy, types of breast cancers diagnosed, treatment received, and breast cancer deaths, these types of studies can be conducted in an ongoing, monitoring manner, and thus can help us understand changes in mortality as women’s risk, screening technology, and treatment change. Thus, if a country expands screening to a younger or older age group, or changes screening technology or frequency, the effects on incidence, mortality, medical procedures (eg, mastectomy), and even false positive screening tests could be assessed. In addition, prospective cohort and ecologic designs can be used to compare trends in breast cancer mortality among countries with different intensities of screening, thus gaining insight into the value of different starting and stopping ages, different frequencies of screening, and different screening strategies with different sensitivities. These study designs are also much less costly than expensive RCTs, and can provide evidence in a more timely fashion.

Prospective cohort designs are most helpful, as they include individual-level data, linking actual screening behavior with incidence and mortality in the same people. These cohorts are most helpful when they are true inception cohorts, such as birth cohorts, and when they are analyzed in a similar way to the way an RCT would be analyzed. This involves minimizing selection bias by including only participants meeting strict eligibility criteria: enrolling only people with similar risk for incidence and mortality, excluding people at the start who do not represent the typical risk profile of the population of interest. Birth cohorts are a particularly good choice as all are exposed similarly to risk-changing external factors over time. Because prospective cohort studies can collect information on various potential confounding factors (ie, factors that are unbalanced between comparison groups, that may explain mortality differences that would otherwise be incorrectly ascribed to screening) over time, they can adjust for these confounders in the analysis, thus minimizing selection bias. Prospective cohort studies also collect data over time on both exposure (ie, particular intensities of screening) and outcome (ie, cancer incidence and mortality) in uniform ways, and thus measurement can be more accurate and reliable, and data can be collected equally between more-intensive and less-intensive screening groups. Prospective cohort studies can also minimize bias and imprecision in assessment of outcomes by using committees blinded to exposure to impose strict criteria for such outcomes as mortality due to breast cancer. Because prospective cohort studies can include large numbers of people and continue over long periods of time, they can examine longer-term effects of screening programs and provide more precise estimates. Finally, prospective cohort studies examine screening as practiced in the community in the current time period, making their results more applicable to the real population and to present-day decision making.

Ecologic studies have many of the same advantages as prospective cohorts, except they do not gather individual-level data, and thus open themselves up the “ecologic fallacy” of drawing conclusions only indirectly on population incidence and mortality from individual screening. They are also more subject to selection bias than prospective cohort studies, as statistical adjustment for potential confounding factors can only be done on a population rather than an individual basis. Finally, measurement bias is also potentially more problematic in ecologic studies than in prospective cohorts because assessment of exposure, potential confounders, and outcome is on a population basis, potentially more prone to systematic and random error than would individual data. Ecologic studies, however, do capture the final result we all care most about—the health of the general public. Whatever the screening or other health program, if it doesn’t have an effect on the health of the public on a population level, then it can hardly be said to have succeeded. To the extent that those aspects of health most important to the people involved can and are assessed by ecologic studies, these studies may more rightly be considered the end result of a chain of evidence, the chain being the real “gold standard.”

There are important problems, however, with prospective cohort and ecologic studies. Some—related to “internal validity” (risk of bias)—are noted above. Selection bias, measurement bias, and confounding are large challenges that are not easily solved. In general, the risk of bias of these studies is greater than well-conducted RCTs. If well conducted, however, prospective cohort and ecologic studies can still provide more applicable and current information for decision makers. Beyond risk of bias, prospective cohorts and ecologic studies are subject to variation in design features and statistical analysis that make interpretation difficult and result in dueling and conflicting results. Among the design features are studies that do not include information on potentially important confounding variables (eg, use of postmenopausal hormone treatment or change in body mass index) or on accessibility of effective treatment (eg, tamoxifen). Thus, differences in mortality between groups screened at different intensities may reflect factors other than screening.

Among the statistical analysis problems are studies that include adjustment for lead time and for actual (vs invited) screening. These adjustments tend to increase the estimated mortality reduction from more intensive screening, but can be criticized as overadjustment (in the case of lead time) or as addressing a separate question (in the case of actual screening). If a prospective cohort study uses an inception cohort over a lengthy time period, collecting cumulative data on breast cancer incidence and mortality between groups screened more versus less intensively, then adjustment for lead time should not be needed. Likewise, adjustment for actual screening is asking a question for an ideal rather than the real world—what would the mortality reduction be if everyone invited to screening and no one not invited actually underwent screening? Decision makers need an answer to a different question: what would the mortality reduction be in the real world (where some invited people are not screened and some not invited people are) if this screening program at the studied intensity were to be implemented? Adjusting mortality differences to reflect an idealized world answers the wrong question and is unwarranted. Even so, one must realize that screening in the “not-invited” control group in observational studies has the potential to underestimate the effect of instituting a new screening program (or modifying an older program) compared with a totally unscreened area.

One further design problem should be addressed. In considering changes in intensity of screening—including changes in starting or stopping ages, changes in screening frequency, or changes in the sensitivity of screening strategies—observational studies may sometimes focus on intermediate rather than health outcomes. These include changes in interval cancers or in stage of diagnosis rather than breast cancer mortality. The validity of these intermediate outcomes, however, depends entirely on the extent to which they accurately predict health outcomes. Even more difficult is that the change in intermediate outcomes must predict not only the direction but also the magnitude of change in the health outcome. It is important to remember that policy-makers must weigh the magnitude of benefits (ie, breast cancer mortality reduction) against the magnitude of harms and costs in arriving at a fair assessment of the value of a particular screening intensity. Because large observational studies may provide an assessment of only the direction rather than the magnitude of health benefit, and because large observational studies may not adequately assess harms and costs, they must be interpreted with caution. Our current understanding of prognostic factors for breast cancer is incomplete; one should resist the temptation to overinterpret changes in stage at diagnosis or interval cancers as providing adequate evidence for a large magnitude of reduction in breast cancer morbidity or mortality. Overinterpretation of these studies would be an error.

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree