Introduction

What makes one an effective user of the clinical laboratory?

Such a user:

- •

Identifies at least one sympathetic ally in the clinical laboratory to whom one can reach out for advice about laboratory testing. None of us can be expert in all the areas we need to know for the best possible care of patients, so including a laboratory expert as an ad hoc member of the care team should be a best practice for all clinicians.

- •

Is at least somewhat conversant with the language of the clinical laboratory to make it easier to communicate with laboratory experts, as well as to understand key articles published in the clinical laboratory literature.

- •

Understands that diagnostic testing, like so much of medicine, is “playing the odds,” and has enough awareness of basic probability theory and statistics to know: (1) when and when not to order laboratory tests and (2) how confident to be about a positive or negative result.

- •

Has insight into how laboratory tests are validated (for quality purposes).

- •

Knows enough about laboratory methodology to decide which test is most appropriate for the patient.

- •

Is aware of test limitations and possible sources of error (many of which can occur even before the sample is actually tested).

- •

Accepts the reality that different versions/kits/brands of the same test can give markedly different results, and adjusts diagnostic strategy accordingly.

- •

Recognizes the limitations and statistical fuzziness of all reference intervals, particularly those in pediatrics, and interprets results carefully with this inexactitude in mind.

Collaboration among clinicians and laboratorians

Even with a laboratory test as seemingly simple as thyroid-stimulating hormone (TSH), a collaborative relationship between an endocrinologist and a clinical laboratory expert can be mutually beneficial. An experienced clinician may be well aware of the significant circadian rhythm of this hormone but may or may not be aware of the substantial interindividual difference in how much daily variability is present , nor how much TSH results may vary among different assays. The laboratorian in turn may be intimately familiar with how often interfering antibodies may lead to falsely elevated results but may or may not realize how common it is to see teenaged congenital hypothyroidism patients with high TSH and high free thyroxine (T4) because of last-minute double-dosing after months of chronic noncompliance. A clinical laboratory expert can explain how heterophilic antibody interference differs from TSH autoantibody interference (we will cover this later), and which diagnostic maneuvers make the most sense given the clinical scenario presented by the clinician. There is a time commitment involved in arranging regular clinician-laboratorian communications, but without a doubt patients benefit tremendously from what experts can teach each other.

Learning to speak (some of) the language of the laboratory

The language used in the clinical laboratory can be a barrier to learning. “Labspeak” is not quite a foreign language but represents a dialect with many terms unfamiliar to clinicians. Imagine the following conversation:

Clinician: “The results for test X on this patient seem really different between laboratory Y and your laboratory.” Laboratorian: “I agree with you that these two results show more discordance than I’d expect from normal analytical and biological variation because the total allowable error is only 15% even though we’re down near the LOQ. The assay QC looks fine, so we should check for potential preanalytical issues and consider possible heterophilic antibody interference as well. And let’s check the platforms used, because the assays for this analyte are definitely not standardized or even harmonized to date.”

Although those working in the clinical laboratory should be savvy enough to avoid speaking like this to a clinician (especially using acronyms like LOQ), the earlier paragraph is perfectly plausible for a conversation between two laboratorians, and in fact might be the most concise way to convey key points in the investigation to follow. Knowing even just a few commonly used laboratory terms shown in Table 4.1 can help bridge the communication gap and will certainly help a clinician better understand key articles in a useful journal, such as Clinical Chemistry .

| Term and/or Concept | Associated Acronyms | Pertinent Section |

|---|---|---|

| Aliquot | 2 | |

| Analyte | 2 | |

| Calibrator/calibration | 7 | |

| Carryover | 4 | |

| Chromatography | LC, HPLC, UPLC | 5 |

| Competitive immunoassay | RIA, EIA, CIA | 5 |

| Extraction | 5 | |

| Harmonization | 7 | |

| Heterophilic antibody | HAMA, HAAA | 6 |

| Immunometric assay | IRMA, ICMA, IFMA, ELISA | 5 |

| Interferences | 6 | |

| Limit of quantitation (vs. limit of detection) | LOQ (vs. LOD) | 4 |

| Linearity | 4 | |

| Mass spectrometry | MS, GC-MS, LC-MS/MS | 5 |

| Matrix | 4 | |

| Method comparison | 4 | |

| Platform | 2 | |

| Positive (vs. negative) predictive value | PPV (vs. NPV) | 3 |

| Preanalytical | 6 | |

| Precision | 4 | |

| Receiver-operator characteristic curve | ROC curve | 3 |

| Recovery | 4 | |

| Reportable range | 4 | |

| Sensitivity & specificity (analytical) | 4 | |

| Sensitivity & specificity (clinical) | 4 | |

| Stability | 3 | |

| Standardization | 4 | |

| Validation (Analytical) | 7 |

Many of these terms will be defined in sidebars in the appropriate section, but there are a few general ones that are worth mentioning right away:

- •

“Analyte” is a very common word in laboratory medicine, simple in concept, yet unfamiliar to most clinical ears. It is a generic term for “the thing being measured/analyzed.” Feeling comfortable with this word will make it much easier to talk with the laboratory and scour the pertinent laboratory literature.

- •

“Aliquots” are smaller portions of a sample, prepared from the original, or “mother” tube. You can use an aliquot to send out to another laboratory for corroboration, or use a “fresh aliquot” to repeat the test, if you think the original one may have had too many freeze-thaw cycles, or was potentially contaminated.

- •

“Platform” is a general, albeit somewhat ambiguous term, most often used to describe the manufacturer and model of automated testing instruments, for example, the Beckman Access versus the Roche Elecsys. Why is this of any importance to the clinician? Because platforms differ in their performance characteristics and vulnerability to interferences. We will see later on that comparing results for the same sample on two different platforms is sometimes the fastest way to investigate certain types of interferences. A clinician faced with an unexpected result must be aware enough to ask the laboratory if they can corroborate that result “on a different platform” when appropriate.

Laboratory statistics: the basics of evidence-based diagnosis

Biostatistics and epidemiology are often taught during the early preclinical years of training, when students are hungry to gain clinical experience, and are sometimes dismissive of what seems like more didactic study. Yet, both experienced clinicians and those involved in clinical research realize quickly how important it is to have at least a basic awareness of medical statistics to avoid making significant errors in diagnostic or treatment decisions.

If you call your laboratory asking about the sensitivity and specificity of a test, you will be asked whether you want “clinical sensitivity and specificity” (covered here) versus the completely different “analytical sensitivity and specificity” (discussed in the following methodology/validation section). The clinical laboratory will certainly have data on the latter, but likely only limited studies for the former, because establishing clinical sensitivity and specificity typically require significant clinical studies beyond the reach of the clinical laboratory.

- •

“Clinical sensitivity” is how often the test will be positive in a patient who has the disease being tested for. An excellent mnemonic (useful for examinations) is to think of the abbreviation for “positive in disease” as “PID” and consider how important it is to be “sensitive” when you have a patient with clinical PID (pelvic inflammatory disease).

- •

“Clinical specificity” is how often the test will be negative in a patient who is “healthy” (at least, who does not have the disease being tested for). The mnemonic in this case is “negative in health,” or “NIH”—consider how important it is to be very “specific” when writing an NIH grant proposal.

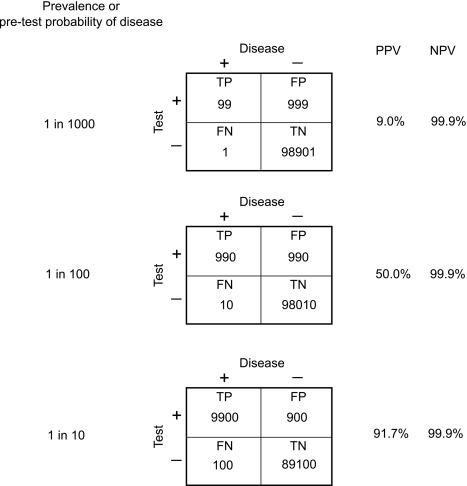

Perhaps more relevant to clinical practice is to understand the concepts of “positive predictive value” (PPV) and “negative predictive value” (NPV)

- •

PPV is the probability of disease in a patient with a positive test result.

- •

NPV is the probability of “health” (nondisease) in a patient with a negative test result.

Both NPV and PPV are affected by the underlying prevalence of disease, or more precisely, by the probability that the patient in question has the disease (“pretest probability”). Fig. 4.1 summarizes the definitions of these terms, whereas Fig. 4.2 demonstrates the dramatic effect of increasing pretest probability on the PPV of a diagnostic test, which should be a testament to the importance of a good history and physical examination before deciding on laboratory test ordering. The best way to improve diagnostic test performance is to be as certain as possible about the diagnosis even before ordering the test!

In clinical practice, most endocrine laboratory tests give continuous rather than “yes/no” results and are therefore rarely used as strict positive/negative tests. A TSH of either 6.0 mU/L 60 mU/L are both “positive,” but neither value will be used as a diagnostic cutoff for the diagnosis of primary hypothyroidism. Using a TSH of 6.0 mU/L would ensure that virtually all patients with primary hypothyroidism are detected (maximum sensitivity) but at the expense of many false negatives (very poor specificity) and unnecessary referrals to the endocrine clinic. On the other hand, using a cutoff of 60 mU/L would minimize the number of false negatives (excellent specificity) but at the expense of missing many true cases of primary hypothyroidism (clinically unacceptable low sensitivity). The choice of a cutoff somewhere in between these two extremes should be determined by the clinical scenario (e.g., perhaps lower in a 14-month-old infant than in an obese but otherwise healthy 13-year-old teenager) rather than an arbitrary universal threshold.

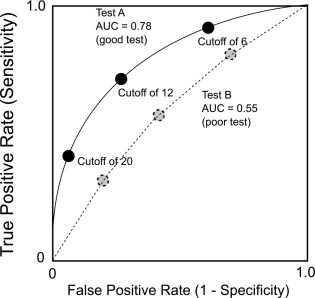

For tests that generate continuous results, the overall diagnostic effectiveness of a test can also be evaluated by plotting the true positive rate versus the false positive rate, producing a receiver-operating characteristics curve (ROC curve).

- •

An ROC curve plots, for various test results, the true positive rate (clinical sensitivity) of a test on the y-axis versus the false positive rate (1 – clinical specificity) on the x-axis ( Fig. 4.3 ). The area under the curve (AUC) can be used to estimate the ability of the test to distinguish disease from nondisease, with an AUC of 0.50, indicating a test without diagnostic value, a test with an AUC of 0.90 or more generally considered as excellent, and one with an AUC of 0.70 considered to be a fair diagnostic test.

Fig. 4.3

Receiver-operating characteristic curves for two theoretical diagnostic tests.

Two important caveats for evidence-based diagnosis:

- (1)

Calculation of clinical sensitivity and specificity for a diagnostic test depends upon clear definition of who does have the disease in question and who does not. If there is no gold standard diagnostic test for comparison, or if the definition of the disease evolves and becomes less clear-cut over time (e.g., early definitions of severe/anatomically proven growth hormone deficiency [GHD] versus later, less well-defined cases of GHD), the definitions of sensitivity and specificity may be approximate at best.

- (2)

Calculation of sensitivity, specificity, and true and false positive or negative rates will vary depending upon the nature of the population being studied. For example, a very good diagnostic test applied across the entire population of the United States will have a far higher false positive rate (as is seen with screening tests) than the same test applied to a carefully selected patient group who has been deemed likely to have the disease in question based on history and physical examination. This again emphasizes the need to increase pretest probability of disease as much as possible before ordering any diagnostic testing.

Analytical validation

Clinical Scenario 1

An investigator inadvertently runs two tubes containing nothing but water on a peptide classic radioimmunoassay (RIA) and is nonplussed when the results show a significant level of the peptide in these tubes.

Clinical Scenario 2

A laboratory declines to run a tumor marker immunoassay ordered on viscous cyst fluid because of a lack of analytic validation data but relents when the physician insists that the laboratory run the sample with a disclaimer “nonvalidated sample type; interpret with caution.” Despite the disclaimer, the laboratory and the clinician are later both sued successfully for inappropriate diagnosis and unnecessary treatments based on what turns out to be a falsely positive result.

Both of these brief clinical vignettes illustrate why regulatory agencies and anyone concerned with quality laboratory testing place such emphasis on analytic method validation. A peptide RIA may give accurate results in serum, but totally inaccurate results in a protein-free fluid; perhaps the tracer (See Methodology: Immunoassays on page 91) in scenario 1 stuck to the sides of the plastic tube, leading to decreased tracer binding and an apparent detectable level of peptide where none was actually present. Scenario 2 represents a situation of misdiagnosis; the viscosity of the solution might have affected the interaction of the assay components, with substantial impact on the patient and legal consequences for all involved.

Analytical validation is meant to ensure that an assay method is accurate for its intended use. Components of an analytical validation include the following: (1) linearity/reportable range; (2) precision; (3) analytical sensitivity; (4) analytic specificity, interferences, and recovery; (5) accuracy/method comparison; (6) sample types and matrix effects; (7) stability; and (8) carryover. Determining reference intervals is an important part of many analytic validations and crosses over to clinical validation. Note that even though not all of these components are always required from a regulatory point of view, all represent good-quality laboratory practice.

Linearity/Reportable Range

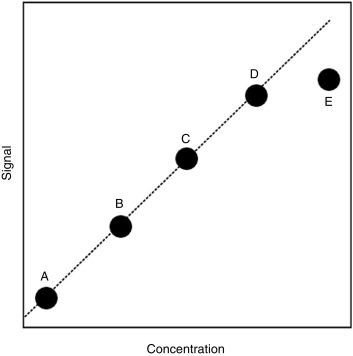

Also referred to as the analytic measurement range (AMR), this is the range of concentrations over which the assay is known to be reliable. Standards of known concentration (calibrators) are assayed and plotted against the signal generated in the assay. For the hypothetical study shown in Fig. 4.4 , the upper limit of the AMR would likely be at the concentration represented by calibrator D, because the higher concentration represented by calibrator E does not result in a similar degree of increased signal. However, it may be possible to dilute the sample so that one can make a measurement within the AMR, thereby allowing for assay of concentrations above the upper limit of the AMR. The calibrator choice may alter the absolute value reported, particularly with peptides and proteins where the standard may represent only one of a mixture of differentially modified (e.g., glycosylated or cleaved) forms present in the circulation.

Precision

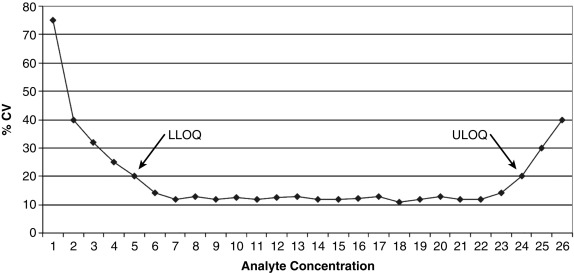

Also known as reproducibility or replicability, this defines whether the random error of the assay is small enough to make the assay clinically useful. A commonly used analogy is that of the target shooter: how close together are the bullet holes? Note that precision is distinct from accuracy (a different part of the validation study); precision addresses only if the shots are close together, not whether they are actually hitting the bulls-eye. Both intraassay (e.g., 20 measurements done on the same assay run) and interassay (1 measurement done daily for 20 days) precision are studied and the standard deviations (SDs) of the replicate measurements calculated. In general, precision is presented as the coefficient of variation (CV), which is the SD divided by the mean, expressed as a percentage. For example, at a mean value of 100 ng/mL, an assay with an SD of 5 ng/mL would have a CV of 5%. Note that the concentration of the analyte factors into the assay’s precision because CV values tend to be higher at either limit of the AMR ( Fig. 4.5 ).

Analytic Sensitivity

This part of a validation study determines how low an analyte concentration can be measured with acceptable precision. It is distinct from clinical sensitivity (how often a result is positive in a patient with disease), which is a general part of a clinical validation study done well after the analytic validation has been completed. A frequent problem is the use of many different terms for analytic sensitivity, for example, minimal detectable concentration, limit of detection (LOD), limit of blank, or limit of absence, all of which describe the lowest possible concentration that can be confidently distinguished from zero. Although an assay developer might cite the LOD to make the assay look as sensitive as possible, the clinician should realize that values down near the LOD are hugely variable and not really quantitative. A more conservative and clinically useful analytic sensitivity limit is the limit of quantitation (LOQ), also known as functional sensitivity and typically defined as the lowest concentration that can be measured with a CV of less than 20%. Even this amount of variability is not trivial; if a testosterone assay has a CV of 20% at the LOQ of 30 ng/dL, one could obtain a result of either less than 30 ng/dL or 36 ng/dL on exactly the same sample, run on two different occasions.

Analytic Specificity, Interferences, and Recovery

Specificity in this context refers to the ability of the assay to measure a specific analyte without cross-reacting with other substances in the sample. Analytic specificity studies may involve the addition of known amounts of similar analytes to a sample; for example, a cortisol assay may be tested for cross-reactivity with cortisone, prednisone, prednisolone, dexamethasone, 17-hydroxyprogesterone, and other steroids. Closely related are interference studies to see if commonly encountered situations, such as hemolysis, hyperbilirubinemia, and lipemia affect the test results. Recovery studies are less often performed; here, a standard of known concentration is added into a sample, and the sample assayed to see what percentage of the added standard is detected (ideally 100%, but frequently less).

Accuracy/Method Comparison

Determining the accuracy of an assay is a multistep process, not all of which can be addressed in a typical analytic validation study. Accuracy may be part of the original test development decision-making process—for example, including an extraction and chromatography step to avoid otherwise problematic cross-reactions. Full determination of the clinical accuracy of a test may not be possible until the analytic validation is completed and the test released to investigators for clinical validation studies. Therefore so-called accuracy studies in an analytic validation are by necessity limited to just a small portion of the whole accuracy process. The interference and recovery studies mentioned earlier are pertinent to test accuracy, but the most common approach is to compare the new test method to another comparator method. Ideally, the method used for comparison will be some type of gold standard reference method, but often such a method is not available. As a proxy, the method under validation is compared with a well-accepted method, and the results shown (new method result on the y-axis, comparison method result on the x-axis) on a correlation plot ( Fig. 4.6 , upper panel). Also frequently used is a bias or difference plot ( Fig. 4.6 , lower panel), which better shows intermethod differences that may escape notice on a simple correlation plot.

Sample Types and Matrix Effects

Sample types are not automatically interchangeable. Ethylenediaminetetraacetic acid (EDTA) plasma from a lavender top tube may perform fine in an assay, whereas heparin plasma from a green top tube may not. One parathyroid hormone assay may give comparable results from both EDTA plasma and red top tube serum, whereas a different parathyroid hormone assay may not. These represent examples of a “matrix effect.”

- •

Matrix refers to the components of a sample other than the analyte being measured.

As noted in our vignettes, the matrix (e.g., protein-free, viscous, high-salt, containing high levels of paraproteins) may have a profound effect on the accuracy of a laboratory assay.

The matrix of serum is different than that of plasma, because of the absence of fibrinogen and anticoagulants, plus the presence of substances released by platelets. Differences in many analyte values may be more than is suggested by this apparently small difference; one study of metabolite profiles showed that 104 out of 122 metabolites had significantly higher concentrations in serum as compared with plasma. It is important to realize that an assay validated for one matrix cannot be automatically used for another.

Stability

Typically, sample stability is studied at ambient (about 22°–26° C), refrigerated (about 2°–6° C), and regular frozen (around − 18° to − 20° C) temperatures. For assays used in clinical studies where specimens may be banked for prolonged periods, stability studies should also be performed at deeper frozen (e.g., − 70° C) temperatures. Aliquots stored at these temperatures for varying periods of time are recovered and assayed to see if results are stable relative to baseline. Stability limits are an inherent property of the assay rather than the analyte: an osteocalcin assay designed to pick up only full-length molecules may have a short stability limit for samples at room temperature, whereas another assay that detects osteocalcin molecule fragments, as well as full-length protein, may have a much longer stability limit.

Carryover

Another important quality process is to ensure that there is no cross-contamination between samples, and that a sample with very high values will not carry over and falsely elevate results in the next sample to be assayed. Assays should be designed to minimize this problem, but there should also be awareness on the part of the laboratory staff to check for carryover whenever a sample with an extremely elevated value is encountered.

Methodology

Immunoassays

Immunoassay is an important methodology in the endocrine laboratory. Understanding the basic principles involved allows specialists to: (1) identify when a particular immunoassay is or is not appropriate for a particular clinical scenario, (2) anticipate potential physiological and technical issues affecting the interpretation of laboratory results, and (3) understand how to work with the clinical laboratory to investigate unanticipated or clinically discordant test results.

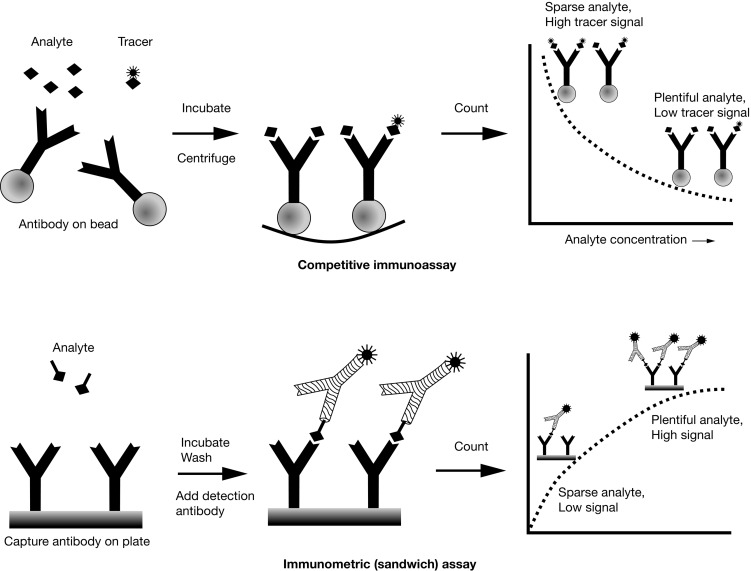

Competitive Immunoassay versus Immunometric (Sandwich) Assay

There are two main immunoassay formats relevant to endocrinology laboratory testing, and understanding some of the basic differences between these formats helps both interpretation of results and troubleshooting of unexpected situations. The first class of assays is termed competitive , with the RIA as the archetypal example. A primary antibody against the analyte of interest is added to the patient’s sample, together with a radiolabeled version of the analyte (tracer) that competes with the endogenous analyte for binding to the primary antibody ( Fig. 4.7 , upper panel). After a sufficiently long incubation time, the primary antibody is precipitated using a second anti-immunoglobulin (Ig)G antibody, polyethylene glycol, or (most commonly nowadays) by using primary antibody attached to a solid support, such as a bead, which allows a simple centrifugation step to collect the primary antibody. Any unbound tracer or analyte is washed away, and the amount of tracer in the precipitate is then quantified.