Allogeneic hematopoietic stem cell transplantation remains the only known curative procedure for the myelodysplastic syndromes (MDS). Because the median age at diagnosis for MDS is in the late seventh decade of life, despite the curative potential, transplantation is not undertaken routinely, and careful consideration must be made regarding the appropriateness of the transplant recipient. This article focuses on appropriate patient selection for transplantation for MDS.

Allogeneic hematopoietic stem cell transplantation remains the only known curative procedure for the myelodysplastic syndromes (MDS). MDS is currently the third most common indication for allogeneic stem cell transplantation as reported to the Center for International Blood and Marrow Transplantation Research (CIBMTR). Comparative registry analyses have documented the benefit of transplantation over conventional supportive and disease-modifying therapeutics ; however, this should not be interpreted as an indication for transplantation in all patients. Because the median age at diagnosis for MDS is in the late seventh decade of life, despite the curative potential, transplantation is not undertaken routinely, and careful consideration must be made regarding the appropriateness of the transplant recipient. This article focuses on appropriate patient selection for transplantation for MDS.

Timing of transplantation

The timing of transplantation has always been a topic of discussion for patients and clinicians alike. From both the patient’s and physician’s perspective, faced with the uncertainty of transplantation outcomes but the certainty of eventual MDS disease progression, decisions often are made based on patient preference. Supporting these decisions are several single or multi-institution experiences that demonstrated improved outcomes with earlier transplantation. The inherent bias in these types of analyses is that patients and physicians self-select for early transplantation. This bias, although recognized, often is overlooked, because patients who choose early transplantation identify with those included in the analysis to justify their decision.

Most analyses that have examined the timing of transplantation for MDS have involved patients who underwent myeloablative procedures. For instance, the Seattle group presented results of targeted busulfan therapy in patients undergoing related or unrelated donor transplantation and examined the results based on the international prognostic scoring system (IPSS). Although patients were enrolled prospectively on a targeted busulfan treatment program, the decision to undergo transplant was based upon physician and patient preference. As predicted, results correlated with IPSS stage, and suggested that outcomes were improved with transplantation at an earlier stage of disease. There were no relapses among the patients in the lowest IPSS score group, whereas the relapse rate was 42% for patients in the highest IPSS category. As a consequence, survival was 80% in the lowest IPSS risk group, but under 30% for patients in the high IPSS category.

de Witte and colleagues examined outcomes of transplantation for patients with refractory anemia or refractory anemia and ringed sideroblasts. This analysis studied transplant outcomes in 374 patients who underwent transplantation from either matched, sibling, or unrelated donors. Both standard myeloablative and reduced-intensity conditioning regimens were used as deemed appropriate by the treating physician in this retrospective review. Unfortunately, fewer than half of the patients had sufficient information available to calculate IPSS risk scores, and therefore outcomes stratified on this basis were not reliable. One other shortcoming of this analysis was that transplantation procedures spanned over a decade, and inherent differences in transplantation technology were evident with improving overall survival with more recent transplantation. Although factors such as conditioning intensity, stem cell source, and donor status did not affect outcome, the authors noted a significant association between year of transplantation, recipient age, and most relevant here, duration of identified myelodysplastic disease as being important predictors of overall survival. None of these factors were associated with relapse-free survival in multivariable analysis. The authors therefore suggested that earlier transplantation was associated with improved transplantation outcome. Again, this should not be interpreted as a recommendation for early transplantation in individuals with otherwise low risk MDS, because the authors did not compare outcomes for cohorts of patients who chose supportive care alone with those who chose transplantation.

To address the shortcomings of these and other biased retrospective analyses, the author and colleagues generated a Markov decision model to best understand how treatment decisions would affect overall outcome in large cohorts of patients with newly diagnosed MDS. The decision model was developed to determine if transplantation at the time of initial diagnosis, transplantation delayed a fixed number of years, or transplantation at the time of leukemic transformation was the optimal usage strategy for transplantation. Using data from several large, nonoverlapping databases, the author and colleagues demonstrated that the optimal treatment strategy for patients with low- and intermediate-1 IPSS disease categories was to delay transplantation until the time of leukemic progression. Immediate transplantation was recommended for patients with intermediate-2 and high-risk IPSS scores. These recommendations were stable when adjustments for quality of life were incorporated also. One of the shortcomings of this analysis was the inability to provide treatment decision guidance at other clinically relevant time points for patients not undergoing immediate transplantation. Although not formally tested, other important clinical events, such as a new transfusion requirement, recurrent infections, or recurrent bleeding episodes, certainly could be considered triggers to move on to transplantation.

In addition to identifying clinically relevant events that might trigger transplantation, there may be certain subgroups of patients with low- or intermediate-1 risk myelodysplasia in whom early transplantation may offer a survival advantage. As such, other prognostic systems may be of some utility.

Investigators at the MD Anderson Cancer Center analyzed the outcomes of 865 patients with low- or intermediate-1 IPSS risk disease referred to their center between 1976 and 2005. Using regression techniques, the authors were able to further subdivide patients based on the degree of thrombocytopenia, patient age, cytogenetics, age, and marrow blast count into three groups of patients with clinically different clinical outcomes. It is important to consider that the MD Anderson model used clinical data collected at the time of referral, whereas the IPSS model used data collected at the time of patient diagnosis for modeling.

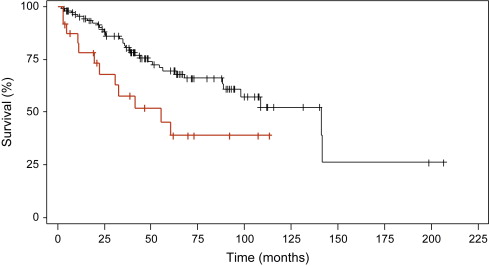

To determine if the clinical factors identified in the MD Anderson model added additional information when applied to the IPSS cohort of patients, the author and colleagues applied this algorithm to patients in the original IPSS cohort. Using the MD Anderson scoring system, 136 low- and intermediate-1 risk patients aged 18 to 60 years could be subclassified further into prognostically relevant subgroups. Collapsing the MD Anderson scores (because of small patient numbers) allowed the identification of two distinct patient subgroups within the low- and intermediate-1 risk groups. These groups had median survivals of 141.2 months and 55.2 months ( P = .012, Fig. 1 ). Importantly, these two subgroups were not simply representative of the original low- and intermediate-1 IPSS risk groups. In fact, one third of the intermediate-1 risk patients were grouped into the higher risk group with the MD Anderson score, whereas all of the low-risk patients by IPSS remained in the low-risk group with the MD Anderson system. Thus, it is possible that some patients with higher-risk intermediate-1 IPSS scores may benefit from earlier, more aggressive intervention, including transplantation, although this requires formal testing either in a mathematical model or in a prospective clinical trial.

The MD Anderson Scoring system includes two gradations of thrombocytopenia (<50 × 10 9 /L and 50–200 × 10 9 /L) but only one classification for anemia (hemoglobin less than 10 g/dL), which does not reliably capture red cell transfusion requirement. Transfusion frequency, and its sequelae, particularly iron overload, have recently both been demonstrated to be important prognostic factors in MDS transplant outcomes. For example, Platzbecker and colleagues compared the outcomes of patients who were and were not transfusion-dependent at the time of transplantation. Even though patients in the transfusion-dependent group had more low-risk features compared with the transfusion-independent group, overall survival was inferior in this group, with a 3-year overall survival of 49% compared with 60% in the transfusion-independent group ( P = .1).

Because transfusion dependency appears to have prognostic importance, it should be considered in choosing patients for transplantation. As a result of the evolving role that transfusion support plays in determining prognosis, the Pavia group examined a cohort of patients stratified by the IPSS classification system, to determine the relevance of the World Health Organization (WHO) classification among different IPSS groups. The new WHO classification system for myelodysplastic disorders clearly identifies patients into distinct prognostic categories. For patients with low- and intermediate-1 risk IPSS scores, the WHO histologic classification scheme was able to significantly differentiate survival among the different histologic subtypes within each IPSS range. Patients in higher IPSS categories did not have significantly different survival outcomes when some stratified by WHO histology. More importantly, in the same analysis, the authors were able to demonstrate a significant effect on survival based on the requirement for transfusion support. As would be expected, outcomes in patients with higher-grade myelodysplasia were affected less by transfusion requirement then were patients with less advanced WHO histologies. As a result of the demonstration of the impact of transfusion even among WHO stratified patients, the impact of transfusion requirement then was incorporated into the newer WHO Prognostic Scoring System (WPSS). In this system, the impact of regular transfusion requirement (defined as requiring at least one transfusion every 8 weeks in a 4-month period) was given the same regression weight as progressing from favorable to intermediate cytogenetics, or from intermediate to unfavorable cytogenetics. In contrast to the initial IPSS publication, which carries prognostic information only at the time of initial MDS diagnosis, this model was time-dependent, such that patients could be re-evaluated continually with this scoring model, and updated prognostic information could be generated at any time point in the patient’s treatment course. As such, the development of new cytogenetic changes, or the development of an ongoing transfusion need would add usable information that would help guide treatment decisions, including those that affect transplantation. A formal decision analysis using the WPSS is underway (M. Cazzola, personal communication, 2009), and the results may supplant those that use only the IPSS. It is important to note in addition that the WPSS already has been shown have independent prognostic significance in predicting survival and relapse after allogeneic transplantation.

If transfusion dependence is an independent risk for excess mortality after transplantation, there must be a measurable effect of this dependence that is independent of disease activity. The result of excess transfusion is accumulation of stored iron, which often is reflected in the serum ferritin level. At the author’s own center, it was reported that an elevated pretransplantation serum ferritin level was strongly associated with lower overall and disease-free survival after transplantation, and this association was limited to a subgroup of patients with acute leukemia or MDS. Additionally, the association largely was attributable to increased treatment-related mortality. Similarly, a prospective single-institution study of 190 adult patients undergoing myeloablative transplantation demonstrated that elevated pretransplant serum ferritin was associated with increased risk of 100-day mortality, acute graft-versus-host-disease, and bloodstream infections or death as a composite endpoint.

Other more recently described factors also may be important in determining MDS outcome, and may help guide treatment decisions toward or away from allogeneic stem cell transplantation. For example, Haase and colleagues recently analyzed individual cytogenetic changes in over 2000 individuals with MDS. They identified several previously identified favorable cytogenetic changes that were associated with median survivals that ranged from 32 to 108 months, with some subgroups having survivals that were not reached. In addition, novel individual cytogenetic changes that were associated with an intermediate prognosis were identified that were not addressed in the IPSS analysis. At an even finer level, molecular prognostication may hold even more information than cytogenetics. Mills and colleagues recently described a gene expression profile methodology to accurately predict the risk of transition from MDS to acute myeloid leukemia. This model was able to accurately differentiate groups of patients with a time to transformation greater than or less than 18 months, and this model was more powerful at predicting time to leukemic transformation and overall survival when compared with the IPSS scoring system. This gene expression profile needs to be tested in a prospective trial where it is used to help guide therapy to determine if it truly is effective.

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree