An Introduction to Human Factors in Aerospace

Thomas Rathjen

Mihriban Whitmore

Kerry McGuire

Namni Goel

David F. Dinges

Anthony P. Tvaryanas

Gregory Zehner

Jeffrey Hudson

R. Key Dismukes

David M. Musson

The twentieth century is the age of the machine-the most complex and ingenious of which are designed to take us off our planet earth. But at the heart of these almost unbelievably sophisticated creations is the thin-skinned perishable bag of carbon, calcium and phosphorus combined with oxygen and nitrogen, a few ounces of sulphur and chlorine, traces of iron, iodine, cobalt and molybdenum added to fat and forty litres of water—Man. There he sits at the centre of this Aladdin’s cave of scientific genius, the finger on the button, the tiny battery which will operate all this complexity.

INTRODUCTION

What is Human Factors?

What is human factors? Why is it an important consideration in the context of aerospace medicine? The purpose of this chapter is to address these questions and, by way of examples, provide aerospace medicine practitioners with a broad awareness of this field that is just as critical to the success of complex human endeavors in aerospace as is physiological well-being.

Many terms and definitions are used to describe the practice of human factors. It is referred to as human engineering, ergonomics, industrial engineering, human-systems engineering, and other names. A popular definition was established by the International Ergonomics Association in August 2000 (2):

“Ergonomics (or human factors) is the scientific discipline concerned with the understanding of interactions among humans and other elements of a system, and the profession that applies theory, principles, data, and other methods to design in order to optimize human well-being and overall system performance.”

This definition illustrates well the essential elements of human factors. First, its goal is to enable a system to perform successfully by considering the role of the human in that system. Second, all interactions the human may experience are important: hardware, software, environment … even other humans. Third, the practice of human factors is grounded in scientific methodology.

As scientific and engineering disciplines go, human factors is still a relatively young field. Its emergence coincided with the development of increasingly complex systems that humans were called upon to operate, such as the airplane and World War II era weapons systems. Early studies were concerned with such topics as the use of instruments and automatic pilots in early aircraft cockpits, evaluation and training of pilot skills, effects of fatigue on pilot performance, as well as visual perception and display design. Currently there is a tremendous variety of specialized fields within the human factors discipline, ranging from anthropometrics and biomechanics, to sensual perception, to cognitive performance factors, to information processing, and to team dynamics.

As the definition given in the preceding text illustrates, these diverse fields have in common the goal of understanding how the human’s limitations, capabilities, characteristics, behaviors, and responses will affect performance of a given system, and how that understanding can then be applied

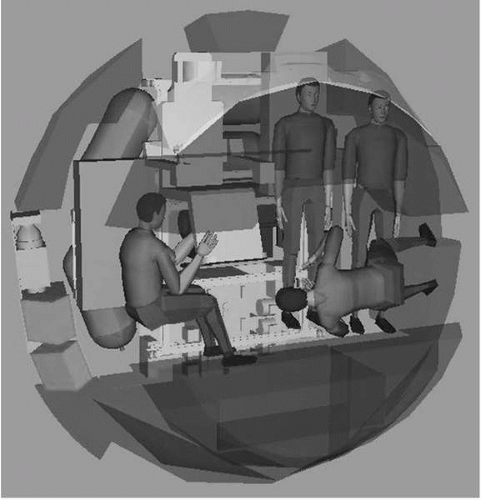

to design of the system to minimize risks and optimize performance. The anthropometry profession will determine how a space suit must be designed to accommodate the entire astronaut population. The habitability specialist will determine vehicle architectures that support operator tasks (Figure 23-1). The investigator in human information processing capabilities will specify how aircraft systems displays must be designed to maximize situational awareness under a variety of conditions. The cognitive performance expert will specify the level of automation and decision support necessary for a team to operate a complex missile defense system. These are just a few examples.

to design of the system to minimize risks and optimize performance. The anthropometry profession will determine how a space suit must be designed to accommodate the entire astronaut population. The habitability specialist will determine vehicle architectures that support operator tasks (Figure 23-1). The investigator in human information processing capabilities will specify how aircraft systems displays must be designed to maximize situational awareness under a variety of conditions. The cognitive performance expert will specify the level of automation and decision support necessary for a team to operate a complex missile defense system. These are just a few examples.

It is impossible to provide a comprehensive introduction to human factors in the context of a single book chapter. Rather, this chapter is intended to provide the aerospace medicine student with a general appreciation of the valuable contribution of human factors to the success of aerospace systems and the well-being of the humans who operate and interact with them. This will be done by exploring in greater depth just a few examples of human factors that work in aerospace. These examples are grouped into two topics: those pertaining to the understanding and characterization of human performance capabilities and the application of human factors in system design and operations.

Why is Human Factors Important to Aerospace Medicine?

The human factors discipline should be an integral part of design and development process of every system, including medical systems. Applying human factors principles in the medical domain will facilitate optimum caregiver and patient safety and system performance, and operator effectiveness will be reflected in medical device design.

FIGURE 23-1 An example of computer human modeling analysis to assess the habitable volume adequacy of an early crew exploration vehicle (CEV) conceptual design. |

There are numerous cases where system failure resulted when human factors guidelines were not considered. A report in 1999 suggested that at least 44,000 (and up to 98,000) people die in the United States each year from medical errors in hospitals (a figure greater than that recorded for road traffic fatalities, breast cancer, or acquired immune deficiency syndrome (AIDS) (3). The U.S. Food and Drug Administration (FDA) has stated that although many of these fatalities cannot be attributed to human errors involving medical equipment or systems, some certainly can be (4).

Some examples of human factors applications and benefits in medicine (5) are listed in the following text:

Understanding human limitations early in the development of medical devices can reduce errors and avoid performance problems exacerbated by stress and fatigue

Using human factors in a design process can reduce the costs of procuring and maintaining products

Human factors can minimize the incidence of injury or longer-term discomfort/dissatisfaction from poor working environments

A human factors task analysis can help identify key components of surgical skill, ensuring that students have affordable, appropriate, valid, and reliable training

To provide guidance on human factors practices in medicine, the Association for the Advancement of Medical Instrumentation produced a document titled, “Human Factors Engineering Guidelines and Preferred Practices for the Design of Medical Devices (AAMI HE48:1993).” This document covers human factors engineering processes and general recommendations for human factors engineering, workspace, signs/symbols/markings, controls and displays, alarms and signals, and user-computer interface. For instance in 1999, the Veteran’s Affairs (VA) hospital system started a nationwide bar-coding program. A simplified version of bar-coding starts when a physician orders a medication. This order is transmitted to the pharmacy where a bar-code is generated. A pharmacist verifies the order and the order is sent back to the floor. A nurse on the floor uses a scanner to compare the bar-code in the patient’s bracelet against the bar-code for the order. In 2006, the FDA stated that bar-code systems can reduce the number of medication errors by 50% and prevent more than 500,000 adverse drug events over the next 20 years (6).

In addition to the relevance of human factors in the medical domain, there is another reason it is advantageous for the aerospace medicine practitioner to have an awareness and appreciation of the field. As new and ever more complex air and space vehicle systems are developed, it is important for the aerospace medicine practitioner to be involved in the design process to ensure that the system operators’ physiological and behavioral factors are considered and human health and well-being protected. Similarly, human factors representatives must be involved to ensure user-centered design solutions that will enable system success. Therefore, teaming between these disciplines, which are both focused on the human element, can enable leveraging, and add weight to the importance of human issues on practical, real-world system development programs.

HUMAN PERFORMANCE

Before human factors can be applied to the design of aerospace systems and operational procedures, it is necessary to understand humans’ capabilities, limitations, and characteristics that will affect system performance. How big (or small) are the users of a system? How far can they reach? What colors do they best perceive in certain situations? How much can they remember under stressful conditions? How does the environment, such as temperature, atmospheric composition, and lighting, affect their endurance on cognitive tasks and physical tasks? In addition, how much variance in all of these things is there among the entire population of system users?

One should recognize the relevance of aerospace medicine concerns to the understanding of human performance, both physiological and behavioral. Fully explaining human performance characteristics will usually require an in-depth knowledge of physiological parameters. For example, the musculoskeletal system, and also psychological and cardiovascular factors, will determine strength and endurance capabilities. Moreover, behavioral and neurologic health will affect cognitive abilities. It is not typically the role of the human factors practitioner to fully explore and explain the underlying physiological drivers for human characteristics, but rather to determine that they exist and statistically bind them (for the given user population) so that designs can accommodate them. However, the student of aerospace medicine should have a unique appreciation of human performance considerations due to a unique understanding of human physiological and behavioral health in aviation and space environments.

This section provides three examples of human performance characteristics: fatigue, human error, and anthropometry. Again, these are not intended to be comprehensive, but rather to provide the reader with a general awareness of human performance considerations in aerospace applications.

Fatigue

Overview: Sleep Deprivation, Performance, and Fatigue

Fatigue is a ubiquitous risk in all modes of transportation. In operational environments, cognitive performance degrades with sleep loss, often referred to as a fatigue effect, which can be measured through a simple sustained attention task, the psychomotor vigilance task (7). In most cases, the effects of fatigue on performance are due to inadequate sleep and/or functioning at a nonoptimal circadian phase, but such effects can also occur as a function of prolonged work hours (time on task) and inadequate recovery times. The effects of inadequate sleep (rest) and recovery on cognitive performance are primarily manifested as increasing variability in cognitive speed and accuracy, resulting in increasingly unreliable behavioral performance. When partial or total sleep deprivation produces increased performance variability, this is hypothesized to reflect state instability, defined as moment-to-moment shifts in the relationship between neurobiological systems mediating wake maintenance and sleep initiation (7). Sleep-initiating mechanisms repeatedly interfere with wakefulness, making cognitive performance both increasingly variable and dependent on compensatory measures, such as motivation, which are unable to override increased sleep pressure without consequences (7).

Sleep deprivation induces a wide range of effects on cognitive performance. Although cognitive performance usually becomes progressively worse with extensions of time-on-task, performance on even very brief cognitive tasks that require speed of cognitive throughput, working memory, and other aspects of attention are sensitive to sleep deprivation. Moreover, divergent and decision-making skills involving the prefrontal cortex are adversely affected by sleep loss (7). These include skills critical in operational environments such as risk assessment, assimilation of changing information, updating strategies based on new information, lateral thinking, innovation, maintaining interest in outcomes, insight, communication, and temporal memory

skills (7). In addition, fatigue and deficits in neurocognitive performance due to sleep loss compromise many working memory and executive attention functions important in operational environments. These include, but are not limited to assessment of the scope of a problem due to changing or distracting information, remembering the temporal order of information, maintaining focus on relevant cues and flexible thinking, avoiding inappropriate risks, gaining insight into performance deficits, avoiding perseveration on ineffective thoughts and actions, and making behavioral modifications based on new information (7).

skills (7). In addition, fatigue and deficits in neurocognitive performance due to sleep loss compromise many working memory and executive attention functions important in operational environments. These include, but are not limited to assessment of the scope of a problem due to changing or distracting information, remembering the temporal order of information, maintaining focus on relevant cues and flexible thinking, avoiding inappropriate risks, gaining insight into performance deficits, avoiding perseveration on ineffective thoughts and actions, and making behavioral modifications based on new information (7).

Time zone transit, prolonged work hours, and work environments with irregular schedules contribute to performance decrements and fatigue, and therefore pose risks to safe operations. Both aviation and space environments impose such demands on their respective flight crew.

Fatigue: Aviation Settings

Operator fatigue associated with jet lag is a major concern in aviation, particularly with travel across multiple time zones. Flight crews consequently experience disrupted circadian rhythms and sleep loss. Studies have documented episodes of fatigue and the occurrence of uncontrolled sleep periods (microsleeps) in pilots (8). Flight crewmembers remain at their destination only for a short period, and therefore do not have the opportunity to adjust physiologically to the new time zone and/or altered work schedule before embarking on another assignment, thereby compounding their risk for fatigue.

Notably, although remaining at the new destination after crossing time zones for several days is beneficial, it does not ensure rapid phase shifting (or realignment) of the sleep-wake cycle and circadian system to the new time zone and light-dark cycle. Usually, pilots and flight crew arrive at their new destination with an accumulated sleep debt (i.e., elevated homeostatic sleep drive), because of extended wake duration incurred during air travel. As a result, the first night of sleep in the new time zone will occur without incident—even if it is abbreviated due to a wakeup signal from the endogenous circadian clock. However, on subsequent nights, most individuals will find it more difficult to obtain consolidated sleep because of circadian rhythm disruption. As a result, an individual’s sleep is not maximally restorative across consecutive nights, which leads to increased difficulty in maintaining alertness during the daytime. Such cumulative effects are incapacitating, often taking more than a week to fully dissipate through complete circadian reentrainment to the new time zone.

The magnitude of jet lag effects is also partly dependent on the direction of travel (8). Normally, eastward travel is more difficult for physiological adjustment than westward travel because it imposes a phase advance on the circadian clock, whereas westward transit imposes a phase delay. Because the human endogenous clock is longer than 24 hours, lengthening a day is easier to adjust physiologically and behaviorally than shortening a day by the same amount of time (8). However, adjustment to either eastward or westward phase shifts often requires at least a 24-hour period for each time zone crossed (e.g., transiting six time zones can require 5-7 days), assuming proper daily exposure to the new light-dark cycle. Regardless of the direction of phase shift imposed on flight crews, if there is inadequate time to adjust physiologically to the new time zone, cumulative sleep debt will develop across days and waking performance deficits will manifest—even if crews report no such deficits (9). These facts and guidelines need to be considered when designing and implementing flight schedules for aviation crews. Current Federal Aviation Regulations (FAR) limit aviation crew scheduling to no more than 14 hours in a day (with 10 consecutive hours of rest immediately proceeding) (10), 34 hours in any 7 consecutive days (11), and 120 hours in any calendar month (11). Beyond aviation pilots, Air Traffic Control Specialists (ATCS) who work on the ground and experience the demands of 24-hour operations also report fatigue (12). Many ATCS work counterclockwise by rapidly rotating shift schedules. Such schedules not only result in difficulties in sleeping but also can produce fatigue, exhaustion, sleepiness, and symptoms of gastrointestinal distress typically found in shift workers (12). The high levels of alertness required over extended periods of time makes ATCS vulnerable to the neurobehavioral and work performance consequences of circadian disruption and sleep loss, both of which can compromise air safety.

Fatigue: Space Operations

As is true for flight crew, astronauts can also experience performance decrements and fatigue in space. Space operations couple high-level sustained neurobehavioral performance demands with time constraints, and thereby necessitate precise scheduling of sleep opportunities to best preserve optimal performance and reduce fatigue (13). Astronaut sleep is restricted in space flight, averaging only 5 to 6.5 hr/d, as a result of endogenous sleep disturbances (e.g., motion sickness, circadian rhythm desynchrony, etc.), and environmental sleep disruptions (e.g., noise, movement around the sleeper, etc.), as well as sleep curtailment due to work demands (13). Such restriction is of concern, as ground-based experiments indicate that cognitive performance deficits and fatigue progressively worsen (i.e., accumulate) over consecutive days when sleep is restricted to amounts comparable to those experienced repeatedly by astronauts (9,13).

Scheduled sleep time during space flight is primarily determined by the mission’s operational needs. Sleep is regulated by a complex neurobiology involving homeostatic and circadian mechanisms that interact to determine sleep timing, duration, and structure (7). Therefore, total sleep time during space flight is usually less than scheduled time in bed. Reduced sleep efficiency, the percentage of time actually spent asleep in space flight, produces fatigue and it can therefore pose a serious risk to performance of critical mission activities. Consequently, sleep-wake schedules that optimize the recovery benefits of sleep, including reducing fatigue, while minimizing required sleep time need to be identified (13). To this end, the current practice in military

aviation and some space flight operations of using sleeping medication to ensure that sleep is obtained each day needs to be evaluated for its effectiveness in maintaining performance without creating sedating carryover effects. More research to develop other effective countermeasures for fatigue in space is currently underway, including investigating the use of timed light and the use of timed naps in space (13).

aviation and some space flight operations of using sleeping medication to ensure that sleep is obtained each day needs to be evaluated for its effectiveness in maintaining performance without creating sedating carryover effects. More research to develop other effective countermeasures for fatigue in space is currently underway, including investigating the use of timed light and the use of timed naps in space (13).

Conclusion

Fatigue, sleepiness, and performance decrements, including increased reaction times, memory difficulties, cognitive slowing, and attentional lapses, result from acute and chronic sleep loss and circadian displacement of sleep-wake schedules. As such, these are common occurrences in aviation and space environments, which utilize 24-hour work situations. Neurobehavioral and neurobiological research has demonstrated that waking neurocognitive functions depend on stable alertness resulting from adequate daily recovery sleep. Therefore, understanding and mitigating the risks imposed by physiologically based variations in fatigue and alertness in the operational workplace are essential for developing appropriate countermeasures and ensuring safety of flight and space crew.

Human Error

Overview: Aerospace Operations

Human error is a very broad topic the boundaries of which are not well defined. For example, no clear boundary exists between degradation of quality of performance of diverse tasks—as for example occurs under fatigue or stress—and discrete failures of action, such as forgetting to set wing flaps before attempting to takeoff.

James Reason’s book Human Error provides an excellent broad overview of the types of error that occur in everyday and workplace settings, the cognitive processes underlying vulnerability to error, and the ways in which organizational factors contribute to or mitigate against the propagation of errors into accidents (14). In aviation, and probably in most domains of skilled performance, most accidents are attributed to human error. An article in 1996 stated that estimates in the literature indicate that somewhere between 70% and 80% of all aviation accidents can be attributed, at least in part, to human error (15).

When professionals make errors while performing tasks not considered terribly difficult, both ordinary citizens and investigating organizations tend to assume these errors to represent some sort of deficiency on the part of the professional who made the error—that person must have lacked skill, was not conscientious, or failed to be vigilant (16). In some cases, those assumptions may be correct, but human factors research reveals that these cases are the exception rather than the rule. Both correct and erroneous performances are the product of the interaction of events, task demands, characteristics of the individual, and organizational factors with the inherent characteristics and limitations of human cognitive processes.

Some level of error is inevitable in the skilled performance of real-world tasks, such as flying airplanes, operating in emergency rooms, or driving automobiles. For example, Helmreich et al. have found that airline flight crews make one or more errors in approximately two third of flights observed (17). (These figures are probably undercounts because observers cannot detect all errors.) Because the performance of even the most skilled of experts is fallible, some may argue that automation should replace humans whenever possible. However, this perspective ignores the realities of the human tasks and the situations in which they are performed.

Computers can perform some tasks much faster and more reliably than humans, and those tasks should be assigned to computers. Nevertheless, human judgment is required for situations involving novelty, value judgments, or incomplete or ambiguous information. One would not want the decision of whether to divert a scheduled flight because of a sick passenger to be made by a computer! However, the very reasons that these tasks require human judgment make it unlikely for that judgment to be always correct.

Most errors made by professionals do not result in accidents, either because the errors do not have serious consequences or because the errors are caught and mitigated. Airline operations, for example, have extensive systems of defense to prevent or catch errors (e.g., the use of checklists and having the two pilots in the cockpit cross-check each other’s actions). When accidents do occur it is typically because several factors interact to undermine defenses which the organizations have erected to catch errors. This interaction is partly random, which makes it hard to prevent, as the number of factors that might interact is very large.

Illustrative Description

Because space does not permit a full review of the large research literature addressing the issues described earlier, we will briefly summarize a recent study that illustrates the nature of the errors of skilled experts, the causes of those errors, and possible countermeasures (16). The authors of this study reviewed the entire set of 19 major accidents in U.S. airlines occurring over a 10-year period, and in which the National Transportation Safety Board (NTSB) found flight crew error to be causal. The study examined the actions of each crew as the flight evolved, asking why might any highly experienced crew, in the situation of the accident crew, and knowing only what the accident crew knew at each moment, be vulnerable to doing things in much the same way as the accident crew.

The study found that almost all the events leading to these accidents clustered around five themes, defined as much by the situations confronting the pilots as by the errors they made:

Inadvertent slips and omissions when performing familiar tasks either under routine or challenging conditions. Examples of these slips and omissions are forgetting to

arm spoilers before landing, forgetting to turn on pitot heat, and forgetting to set flaps to takeoff position. These omissions are examples of prospective memory errors, a field of research that has recently burgeoned (18). Under benign conditions, these errors are usually caught before they become consequential. However, in the presence of other factors such as interruptions, time pressure, emergencies, fatigue, or stress these errors are much less likely to be caught, sometimes with dire consequences.

Inadequate execution of non-normal procedures under challenging conditions. Pilots in several accidents failed to correctly execute procedures for recovering from a spiral dive, from a stall, and from wind shear. Pilots receive training on these procedures, but studies have shown that in actual flight conditions, many pilots have trouble performing the correct response. One shortcoming of existing training for upset attitude recovery is that in simulation training pilots are expecting the upset and they typically know which upset they are about to encounter. Nevertheless, in the real world of surprise, confusion, and stress it is far more difficult to identify the nature of the upset and select and execute the correct response. Another shortcoming is that airline training does not allow pilots to practice responding to upsets often enough for responses to become automatic.

Inadequate response to rare situations for which pilots are not trained. These situations included a false stick-shaker activation just after rotation, an oversensitive autopilot that drove the aircraft toward the ground near decision height, anomalous indications from airspeed indicators that did not become apparent until the aircraft was past rotation speed, and an uncommanded auto-throttle disconnect whose annunciation was not at all salient. The first three of these four situations required quite rapid responses—the crews had at most a few seconds to recognize and analyze a situation for which they were not trained and had never encountered, and within these few seconds, the crew had to choose and execute the appropriate action. Here too surprise, confusion, stress, and time pressure undoubtedly played a role.

Judgment and decision making in ambiguous situations. An example of judgment in ambiguous situations is continuing an approach toward an airport in the vicinity of thunderstorms, exemplified by the accident at Charlotte in 1994. In this accident, the crew was very much aware that they might have to break off their approach and actively make plans for how they would handle this. Unfortunately, by the time it became apparent that they needed to go around, they were already in wind shear, and several other factors interfered with their attempt to recover.

No algorithm exists for crews to calculate exactly how far they may continue an approach near thunderstorms before it should be abandoned. Company guidance is typically expressed in rather general terms, and the crew must make this decision by integrating fragmentary and incomplete information from various sources, and improvising. When an aircraft crashes while attempting an approach under these conditions, the crew could be found at fault. Yet there are reasons to suspect that the decision making of the accident crews was similar to that of crews who were more fortunate. A Lincoln Laboratory study of radar data at Dallas/Fort Worth revealed that when thunderstorms are near the approach path it is not uncommon for airliners to penetrate the cells. Moreover, in the investigation of wind shear accidents it is not uncommon to find that another aircraft landed or took off only a minute or two ahead of the accident aircraft without difficulty. Both crews had the same information and both made the same decision, but rapidly fluctuating conditions allowed one to operate without difficulty and caused the other to crash. Removing the ambiguity through improved procedural norms for operating in these situations, and consistent application of these norms, might reduce accidents that occur during highly dynamic environments where both judgment and luck currently play a role.

Deviation from explicit guidance or Standard Operating Procedure (SOP). An example is attempting to land from an unstabilized approach resulting from a “slam-dunk” clearance, as occurred in an accident at Burbank in 2000, in which the airplane overran the runway and ended up ignominiously at a gas station (16). (Slam-dunk refers to situations in which controllers put the aircraft in a position of being so high and fast that it is difficult for pilots to stabilize aircraft speed, descent rate, and configuration in time to land safely.) If the company has explicit stabilized approach criteria, these deviations may seem simply to be willful violations. However, even here the situation may not be as simple as it seems. Does the company publish and train the stabilized approach criteria as an absolute bottom line or merely as guidance? What are the norms for what most pilots actually do in the company and in the industry? Pilots are influenced by company pressures for on-time performance and fuel economy, which conflict with formal guidance for operations. Also, some pilots may not have been trained to understand that correcting an unstabilized approach imposes so much workload that the flying pilot does not have enough mental capacity left over to reliably assess whether it is possible to get the aircraft stabilized by touchdown.

The study also identified a range of cross-cutting factors that contribute to the vulnerability of pilots in making the sorts of errors described earlier. In many accidents, several of these cross-cutting factors were working simultaneously. Six of these factors are as follows:

Situations requiring rapid response. Surprisingly, approximately two thirds of these accidents involved situations in which the crew had only a matter of seconds to choose and execute the appropriate response. Examples include upset attitudes, false stick-shaker activation just after rotation, anomalous airspeed indications at rotation, pilot-induced oscillation during flare, and autopilot-induced oscillation

at decision height. This finding is surprising because most threatening situations encountered in airline operations allow the crew time to think through what to do, and in these situations it is important to avoid rushing. We concluded that these 19 accidents included a disproportionately high number of situations requiring very rapid response because, although these situations are quite rare, when they do occur it is extremely difficult for crews to overcome their surprise, assess the situation, and quickly execute the appropriate response. Human cognitive processes simply do not allow pilots to reliably assess novel situations quickly and make the right response reliably.

Challenges of managing multiple tasks concurrently. These challenges appeared in the great majority of accidents. In some cases workload and time constraints were high in the final stages of the accident sequence, and the crew became so overloaded that they failed to recognize that they were losing control of the situation and failed to break off the approach. The accident at Little Rock in 1999 is a case in point, in which an MD-80 crew became so overloaded dodging the weather that they failed to consider the advisability of continuing the approach, failed to arm the spoilers, and failed to adjust the thrust reverser deployment for wet runway conditions. Overloaded crews often become reactive rather than proactive, responding to each new demand on their attention; consequently, little mental capacity is left to think strategically. Monitoring and cross-checking fall by the wayside.

However, in many of these accidents, adequate time was in principle available to perform all required tasks. Unfortunately, the inherent difficulty in reliably switching attention back and forth among concurrent tasks impaired performance in these accidents. Even experienced pilots are vulnerable to becoming preoccupied with one task to the neglect of other tasks, and they also forget to complete a task when interrupted or distracted or when forced to defer a task out of its normal sequence.

Plan continuation bias. This is a powerful but unconscious cognitive bias to continue the original course of action or a habitual course of action even when conditions change, making that original plan of action not to be a good idea. The pilots do not fully understand the cognitive mechanisms that underlie plan continuation bias, but we suggest that several factors come into play. Individuals may develop an inaccurate mental model of the level of risk in a particular situation because they always got by in the past, not realizing how close they came to the edge of the envelope. Norms come into play strongly here—pilots tend to do what their peers do, especially the flight directly in front of them. Information is often incomplete or ambiguous and generally arrives piecemeal, which makes it hard to integrate, especially under heavy workload, stress, or fatigue. Expectation bias makes us less likely to notice cues that the situation is not what we expect from past experience. In addition, there are subtle and not so subtle pressures from competing organizational goals—pilots are certainly aware that on-time performance and fuel costs directly influence the survival of their companies.

Stress. Stress probably undermined the performance of many of these flight crews; however, it is rather like fatigue in that it is hard to find the smoking gun after the accident. Stress, which is a normal physiological response to threat, hampers skilled performance by narrowing attention and reducing working memory capacity required to execute tasks. In particular, the combination of stress and surprise with requirements to respond rapidly and to manage several tasks concurrently, as occurred in several of these accidents, is a lethal setup.

Social and organizational issues. These issues may have a pervasive influence. For example, how operations are actually conducted on the line may deviate from the ideals expressed in flight operations manuals, for many reasons. Unfortunately, little data is available to accident investigators on the extent to which the accident crews’ actions were typical or atypical of other pilots in the situation they faced. Also, pilots may not be consciously aware of being influenced by competing goals that they have internalized, for example, the tradeoffs between on-time performance and conservative response to ambiguous situations. It is possible for pilots to receive mixed messages: On the one hand, they are supposed to follow company guidance on procedures, on the other hand they may be told, implicitly or explicitly, that the company’s survival depends on constraining fuel costs and upholding on-time performance.

This study has implications for understanding pilot error and for preventing future accidents. Foremost is that errors and accidents are best thought of as vulnerabilities and failures in the overall system rather than as deficiency on the part of pilots who met with accidents. Terms such as complacency and lack of situation awareness are labels, not explanations for why errors occur. Errors occur on almost all flights, but in the vast majority of flights, these errors are prevented from causing accidents. In aviation, a large number of safeguards have been erected to detect and mitigate errors, especially crew resource management (CRM), discussed later in this chapter. The latest version of CRM emphasizes threat and error management (TEM). The methods airlines use to achieve an extraordinarily high level of safety can be adapted to other fields, such as medicine and nuclear and chemical plant operations.

Conclusion

From a systems perspective, the best way to reduce vulnerability to errors and accidents is to design the overall operating system for resilience to equipment failures, unexpected events, uncertainty, and human error. Equipment, procedures, and training must be designed to match human operating characteristics, rather than expecting humans to adapt to the quirks of the equipment they must operate. Pilots can be trained to recognize situations in which they are vulnerable to error and can be provided techniques

for reducing vulnerability to specific forms of errors. All organizations—not just airlines—should periodically conduct systematic reviews of their operating procedures and revise procedures that are conducive to error.

for reducing vulnerability to specific forms of errors. All organizations—not just airlines—should periodically conduct systematic reviews of their operating procedures and revise procedures that are conducive to error.

Finally, organizations should recognize that efficiency and production throughput are pressures that often compete against safety. Organizations must recognize this conflict and take responsibility for establishing policies, procedures, and reward structures that truly support, giving safety the highest priority.

Anthropometrics

Overview

“Traditional Anthropometric measurements of bone and other tissue, though of scientific and practical value, are not functional; their applicability is limited to those rather standard conditions which exist when they are taken and they may not be transferred to other postures. Postural and kinematic problems may only be solved by a functional system of measurements.”

W.T. Dempster—from the Space Requirements of the Seated Operator, 1955 (19)

Anthropometrics is the measurement of human bodily characteristics (20). Everyone has seen long lists of anthropometric dimensions, usually presented as tables of percentile values. Ranges of joint motion, reach envelopes, strength profiles, and a great deal of other information on human capabilities and variation are presented in this manner as well. These data are commonly used in specifications for equipment design and to describe population variability. In reality, data of these types are of limited use. Current methods in anthropometry and biomechanics are more along the lines of Dempster’s vision. When designing and testing items of equipment, a systematic approach is used which includes the following:

Defining the anthropometry of the user population in multivariate space

Setting functional requirements, which operators of the equipment must be able to perform, or levels of physical stress they must endure

Testing the ability of the user population to meet the functional and safety requirements of the equipment

Developing predictive equations for modifying the equipment or selecting future users of the system

Designing aircraft cockpits to accommodate the wide range of body sizes existing in the U.S. population has always been a difficult problem for crew station engineers. The approach taken in the design of military aircraft has been to truncate the range of body sizes allowed in flight training, and then to develop standards and specifications to ensure that most of the remaining pilot sizes are accommodated. Accommodation in this instance is defined as the ability to perform the following:

Adequately see, reach, and actuate controls

Have external visual fields so that the pilot can see to land, clear for other aircraft, and perform a wide variety of missions (ground support/attack or air to air combat)

Finally, if problems arise, the pilot has to be able to escape safely

Each of these areas is directly affected by the body size of the pilot. Assignment of individuals to aircraft in which they are poorly accommodated puts them at increased risk for mishap. Although currently only a few accident investigations have reported body size as the sole cause of the mishap, there have been many mishaps where body size may have been a contributing factor. Methods for correcting this problem by predicting pilot fit and performance in the United States Air Force (USAF) aircraft based on anthropometric data were developed by the military in the 1990s. These methods, discussed in the subsequent text, can be applied to a variety of design applications where fitting the human operator into a system is a major concern.

Illustrative Description

Anthropometric Profiles

What are the anthropometric profiles of the male and female user populations, and how do we represent this variability?

Answering this question usually involves locating appropriate existing datasets or the creation of subsets from similar samples. As military anthropometric surveys are becoming outdated (21), many researchers are using civilian samples such as the 1999 Civilian American and European Surface Anthropometry Resource (CAESAR) (22). Owing to fitness differences between civilian and military populations, the CAESAR survey has to be restructured to match USAF demographic and fitness profiles. Generally, this means selecting individuals from the survey based on height, weight, age, race, and gender.

Once the population of interest has been defined by a sample, the traditional method of describing anthropometric variability uses lists of 5th and 95th percentile values for a large number of dimensions. Nearly all current USAF aircraft were designed in this way. Unfortunately, this method leads to many errors and misconceptions as percentiles are not additive and do not describe variability in body proportions. A multivariate technique for describing body size and shape variability is now used to specify new aircraft designs and existing aircraft modifications.

This method uses a Principal Components Analysis technique developed by Meindl et al. (23). Principal Component Analysis allows reduction of a long list of measurements to a smaller, more manageable number, and then enables designers to select the desired percentage level of a population to be accommodated. This desired percentage of the population is represented by a small set of selected boundary conditions, which take into account not only size variance but proportional variability as well. Table 23-1 lists the finalized, multivariate boundary cases that were developed for the Joint Primary Air Training System (JPATS) aircraft that later became known as the T-6 Texan II. These boundary cases represent individuals who are uniformly large or small, as well as those whose measurements combine, for example, small torsos with long limbs, and vice versa.

TABLE 23-1 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

If a workspace is designed to enable all these cases to operate efficiently, then all other less extreme body types and sizes in the target population should also be accommodated. However, as Hendy (24) suggests, for some applications many more model points must be considered. The representative cases may need to be distributed throughout the sample—rather than only on the periphery. This is particularly true in clothing applications. A designer would not want to base a design solely on the most extreme anthropometric combinations. Hence, the actual product being designed dictates the measurements of interest, the percentage of accommodation required, and the number of cases developed.

In addition, principal component analysis cannot describe all the variability in body size that must often be taken into account for a particular design. Some variability in the measurements is lost when a reduced number of components are used. In addition, it can be a needlessly complex technique for calculating some dimensions, for example, when only minimum or maximum values need be known. In the case of shoulder breadth, for example, it does not matter if the widest or most narrow shoulders are found on an individual with a given sitting height. Shoulder breadth is used to assure that wide shoulders clear the sides of the cockpit during ejection, and that narrow shoulders fit the restraint system properly. While measurements such as shoulder breadth must be considered in a cockpit design, the largest and smallest expected values for the measurement can be considered separately from the combinations of torso and limb size discussed earlier. Simple listing of the extreme values for measurements that are not related to seat position will suffice. However, this does not mean that a return to percentiles for these measurements is warranted. Dropping a significant percentage of a population for each measurement is a serious error. The values used should be at or very near the population minimum and maximum values for a given measurement. It must be reemphasized that selection of the measurements deemed important in a design application may be the most important step in the entire process.

Operational Requirements

What tasks must be performed in an aircraft to safely and effectively operate it?

These requirements establish the pass/fail criteria that pilots must perform to safely operate that particular aircraft. Although it is obvious that all controls must be reachable in an aircraft during normal operation, understanding pilot reach issues during worst-case scenarios, or emergency conditions, is essential. In an emergency, the inertial reel restraint system may lock, or, due to adverse G forces, the pilot may be pushed into a position from which it is difficult to reach a particular control. For these reasons (critical reaches as well as minimum visual fields to see the landing zone, or other aircraft in a formation) a critical tasks list must be defined. Anthropometric measures are associated with the performance of these critical tasks. These requirements become the pass/fail criteria during accommodation evaluations, or mapping, of the cockpit.

Cockpit Mapping

Can the performance of an individual in a particular cockpit be accurately predicted from their anthropometric measurements, and can these data be used to predict accommodation percentages for an entire population?

Once the operational requirements have been defined, cockpit mapping is used to make measurements on a sample of subjects performing the requirements in a crew station. The sample data are analyzed to produce regression equations that quantify the link between performance and anthropometry. When combined with the list of critical tasks discussed earlier, these data can be used to assess the impact of accommodation limits on the entire population in terms of the percentage that can or cannot operate a particular aircraft safely. As a collective, these regression equations comprise an algorithm that takes into account the adjustment ranges of the seat and controls, and is used to predict performance levels for an individual or a population. On the basis of our previous experience, at least 20 test subjects representing the range of variation as

well as the extremes of body size within the potential user population are needed to perform the cockpit evaluation. By combining the algorithms developed on this sample with datasets constructed to represent any current or future pilot population, the severity of the nonaccommodation problem that exists can be determined.

well as the extremes of body size within the potential user population are needed to perform the cockpit evaluation. By combining the algorithms developed on this sample with datasets constructed to represent any current or future pilot population, the severity of the nonaccommodation problem that exists can be determined.

Actually, the subjects are used as human “tools” to establish limits of body size accommodation. Each subject is measured both statically in the laboratory for anthropometry, and as they perform the list of operational requirements in the cockpit. Excess and miss distances are measured so that minimum ability levels can be calculated.

Generally, seven aspects of anthropometric accommodation are examined, which include the following:

Overhead clearance

Rudder pedal operation

Internal and external visual field

Static ejection clearances of the knee, leg, and torso with cockpit structures (i.e., canopy bow)

Operational leg clearances with the main instrument panel

Operational leg clearance with the control stick motion envelope and pilot’s ability to attain the full range of stick travel

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree